digitalis.io was founded just over 2.5 years ago, to provide expertise in complex distributed data platforms. We provide both consulting services and managed services for Cassandra, Kafka, Spark, Elasticsearch, DataStax Enterprise, Confluent and more.

In the beginning we started using a number of popular open source tools to implement our monitoring and alerting for our managed services, namely Prometheus, Grafana, ELK, Consul, Ansible etc. These are fantastic open source tools and they have served us well, giving us the confidence to manage enterprise deployment of distributed data platforms – alerting us when there are problems, ability to diagnose issues quickly, automatically performing routine scheduled tasks etc.

We found over time that these tools have become the focal point instead of the products we are supposed to be looking after. Each one of these open source tools requires frequent updates, configuration changes etc. imposing a significant amount of management effort to keep on top of it all. The complexity of the tools deployment architecture made the stack slow to deploy for our customers, in particular highly regulated industries such as financial services and healthcare.

We went back to the drawing board with the aim of reducing the efforts needed to on-board new customers and made a wish list.

- On-premises / cloud deployment

- Single dashboard for metrics / logs / service health

- Simple alert rules configurations

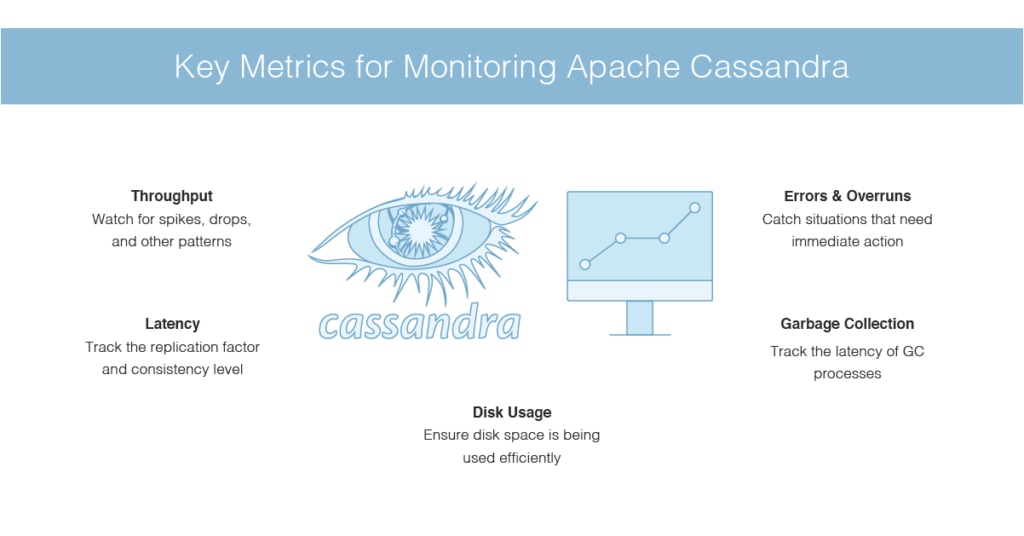

- Capture all metrics at high resolution (with Cassandra there are well over 20,000 metrics!)

- Capture logs and internal events like authentication, DDL, DML etc

- Scheduled backup / restore feature

- Performs domain specific administrative tasks, including Cassandra repair

- Manages the following products;

- Apache Cassandra

- Apache Kafka

- DataStax Enterprise

- Confluent Enterprise

- Elasticsearch

- Apache Spark

- etc

- Simplified deployment model

- Single agent for collecting metrics, logs, event, configs

- The same agent performs execution of health checks, backup, restore

- Single socket connection initiated by agent to management server requiring only simple firewall rules

- Bi-directional communication between agent and management server over the single socket

- Modern snappy GUI

- Manages Cassandra, Kafka, Elasticsearch etc etc

What the tool is not:

- Generic software like Grafana and ELK requiring a lot of custom configurations.

- Database – our software is stateless and plugs into external databases. Currently only supports Elasticsearch but we are looking to add others.

The target deployment architecture needed to be as simple as possible.

We evaluated many operational tools, both commercial and open-source. Unfortunately, there was nothing in the market that satisfied the our wish list, leading us to a decision to build this ourselves!

Build it

We imagineered, built the tool, and called it AxonOps. It consists of just 4 components – javaagent, native agent, server, and GUI making this extremely simple to deploy in any infrastructure as long as it is Linux!

Agent

AxonOps agent makes a single socket connection to the server for transporting the following;

- Logs

- Metrics

- Events

- Configurations

Agent-server connectivity works securely over the web infrastructure. To prove it we have successfully tested this working over the internet, load balancers before reaching the backend AxonOps server.

We have put in a mammoth effort in designing the agent as efficient as possible. We carefully defined and crafted a network protocol to keep the bandwidth requirements very low, even when shipping over 20,000 metrics every 5 seconds. This was unthinkable with our previous setup with jmx_exporter and Prometheus which required us to throw away most of the metrics. Now we have all the metrics at hand at a much higher resolution.

We avoided using JMX when connecting to the JVM, as many of you will be aware that scraping a large number of metrics causes CPU spikes. Instead, we built a Java agent that pushes all metrics to the native Linux AxonOps agent running on the same server. The Java agent also captures various internal events including authentication, JMX events (when people execute nodetool commands), DDL, DCL, etc. which are then shipped to the server, monitored and stored in Elasticsearch for queries.

Server

We built our server in Golang. Having spent many years building JVM based applications in the past, we are extremely impressed and pleased with the double digit megabytes memory footprint!

AxonOps server provides the endpoint for agents to connect into, as well as the API for the GUI.

The metrics API we implemented is Prometheus compatible. Our dashboard provides a comprehensive set of charts, but your existing Grafana can also be connected to AxonOps server to integrate with other dashboards.

AxonOps currently persists all its configurations, metrics, logs, and events into Elasticsearch. We need Elasticsearch for the storage of events and logs to make them searchable. For this initial version we decided to use Elasticsearch for all of AxonOps persistence requirements for simplicity. However, we are acutely aware of more efficient time series databases available on the market, and it is on our roadmap to add support for these.

GUI

The GUI is built as a single executable Linux binary file containing all assets, using Node.js and React.js frameworks. We decided to use Material Design look and feel, with an aim to make the GUI snappy and intuitive to use.

Some of the functionalities we have implemented are described below.

GUI – Dashboard with Metrics and Logs

We took inspiration from Grafana and ELK when designing our dashboards, but embedded both charts and logs in a single view with time range governing the display of both features. Alert rules can be defined graphically in each chart to integrate with PagerDuty and Slack for alerts etc.

GUI – Service Healthcheck

Having a service health dashboard to give us quick RAG status is extremely important to us. Systems like Nagios and Consul provided such functionality prior to building AxonOps. Again we wanted this integrated in the solution.

We have built this in a way that the configurations can be dynamically updated and pushed out to the agents. This means we do not have to deploy any scripts to the individual target servers. There are three types of checks we have implemented which cover all of our use cases;

- shell

- http

- tcp

GUI – Adaptive Regulation of Repair

Repair is one of the most difficult aspects of managing Cassandra clusters. There are only few tools available out there, most popular one being Reaper. I was once told by an engineer at Spotify the name was derived from mispronouncing the word “repair” with Swedish accent! Anyway, we did go through the Reaper code to see if this may work for us. Upon analysis we decided to implement our own.

Since AxonOps collects performance metrics and logs, we theorised a slightly more sophisticated approach than Reaper – an “Adaptive” repair system which regulates the velocity (parallelism and pauses between each subrange repair) based on performance trending data. The regulation of repair velocity takes input from various metrics including CPU utilisation, query latencies, Cassandra thread pools pending statistics, and IOwait percentage, while tracking the schedule of repair based on gc_grace_seconds for each table.

The idea of this is to achieve the following:

- Completion of repair within gc_grace_seconds of each table

- Repair process does not affect query performance

In essence, adaptive repair regulator slows down the repair velocity when it deems the load is going to be high based on the gradient of the rate of increase of load, and speeds up to catch up with the repair schedule when the resources are more readily available.

There is another reason why we decided to not go with Reaper. Reaper requires JMX access from the server, which does not fit well with AxonOps single socket connection model. The adaptive repair service running on AxonOps server orchestrates and issues commands to the agents over this existing connection.

From a user’s point of view, there is only a single switch to enable this service. Keep this enabled and AxonOps will take care of the repair of all tables for you. We are also looking into implementing adaptive compaction control using a similar logic to the adaptive repair.

GUI – Backup & Restore

Scheduled backup & restore is another requirement for our customers. We have added this feature in a way that it can flexibly integrate with various backup solutions that our customers use. It schedules Cassandra snapshots, with an option to attach pre/post snapshot script execution for each schedule. These scripts are defined on the AxonOps server-side, pushed down dynamically to the agents at execution time, removing the need to have them deployed on each target server in advance. I should point out here that the scripts are pushed down to the agent using the agent/server connection and it does not require SSH access.

GUI – Notification and Alerting

We like to see our operational activities being reported into Slack. We are also heavily reliant on PagerDuty for alerting us on problems for our managed services customers. AxonOps naturally had to have the integrations built into it so event notifications or alerts can be sent to the tools we use!

AxonOps General Availability

We have built AxonOps for ourselves but we are excited about it and we’d like to share this with you. We are shortly going to make AxonOps available for anybody to download and use for free! Please send us an email to [email protected] if you are interested. We’re currently working on the documentation, website, and license but we’ll get in touch when we are ready for you to download.