There are 3 mechanisms for making sure that your data stays in sync and consistent.

- Read Repair

- Hinted Handoff

- Anti-Entropy Repair

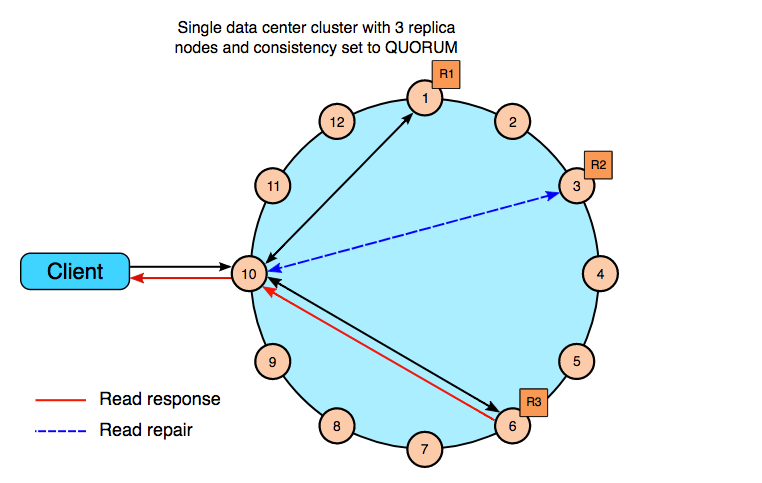

Read Repair

Read Repairs compare values and updates (repairs) any out of sync. Read Repairs happen when a read is done — the coordinator node then compares the…