To guarantee data consistency and cluster-wide data health, run a Cassandra repair and cleanup regularly, even when all nodes in the services infrastructure are continuously available. Regular Cassandra repair operations are especially important when data is explicitly deleted or written with a TTL value.

Schedule and perform repairs and cleanups in low-usage hours because they might affect system performance.

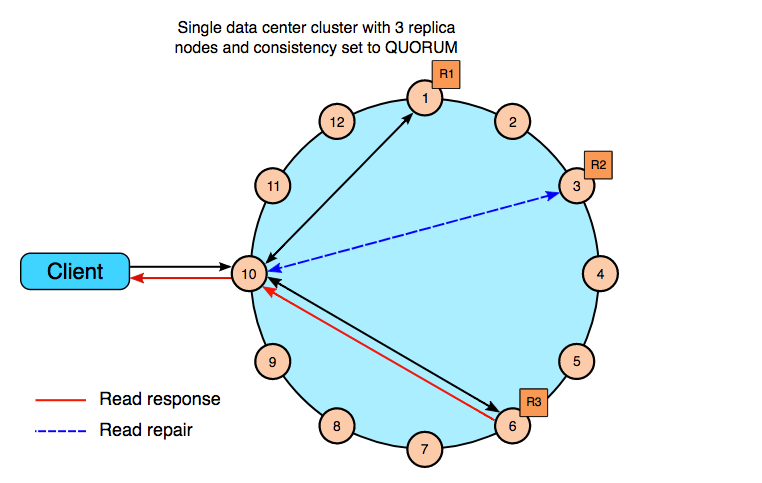

When using the NetworkTopologyStrategy, Cassandra is informed about the cluster topology and each cluster node is assigned to a rack (or Availability Zone in AWS Cloud systems). Cassandra ensures that data written to the cluster is evenly distributed across the racks. When the replication factor is equal to the number of racks, Cassandra ensures that each rack contains a full copy of all the data. With the default replication factor of 3 and a cluster of 3 racks, this allocation can be used to optimize repairs.

-

At least once a week, schedule incremental repairs by using the following nodetool command:

\'nodetool repair -inc - par\'When you run a repair without specifying the -pr option, the repair is performed for the token ranges that are owned by the node itself and the token ranges which are replicas for token ranges owned by other nodes. The repair runs also for the other nodes that contain the data so that all the data for the token ranges is repaired across the cluster. Since a single rack owns or is a replica for all of the data in the cluster, a repair on all nodes from a single rack has the effect of repairing the whole data set across the cluster.

In Pega Cloud Services environments, the repair scripts use database record locking to ensure that repairs are run sequentially, one node at a time. The first node that starts the repair writes its Availability Zone (AZ) to the database. The other nodes check every minute to determine if a new node is eligible to start the repair. An additional check is performed to determine if the waiting node is in the same AZ as the first node to repair. If the node's AZ is the same the node continues to check each minute, otherwise the node drops from the repair activity.

- Optional:

Check the progress of the repair operation by entering:

nodetool compactionstatsFor more information about troubleshooting repairs, see the "Troubleshooting hanging repairs" article in the DataStax documentation.

The following output shows that the repair in progress:compactionstats root@7b16c9901c64:/usr/share/tomcat7/cassandra/bin# ./nodetool compactionstats pending tasks: 1 - data.dds_a406fdac7548d3723b142d0be997f567: 1 id compaction type keyspace table completed total unit progress f43492c0-5ad9-11e9-8ad8-ddf1074f01a8 Validation data dds_a406fdac7548d3723b142d0be997f567 4107993174 12159937340 bytes 33.78% Active compaction remaining time : 0h00m00sWhen the command results states that no validation compactions are running and the netstats command reports that nothing is streaming, the repair is completed.

-

If a node joins the cluster after more than one hour unavailability, run the repair and cleanup activities:

-

In the nodetool utility, enter:

\'nodetool repair\' -

In the nodetool utility, enter:

\'nodetool cleanup\'

-