We’re pleased to announce that Reaper 2.2 for Apache Cassandra was just released. This release includes a major redesign of how segments are orchestrated, which allows users to run concurrent repairs on nodes. Let’s dive into these changes and see what they mean for Reaper’s users.

Reaper works in a variety of standalone or distributed modes, which create some challenges in meeting the following requirements:

- A segment is processed successfully exactly once.

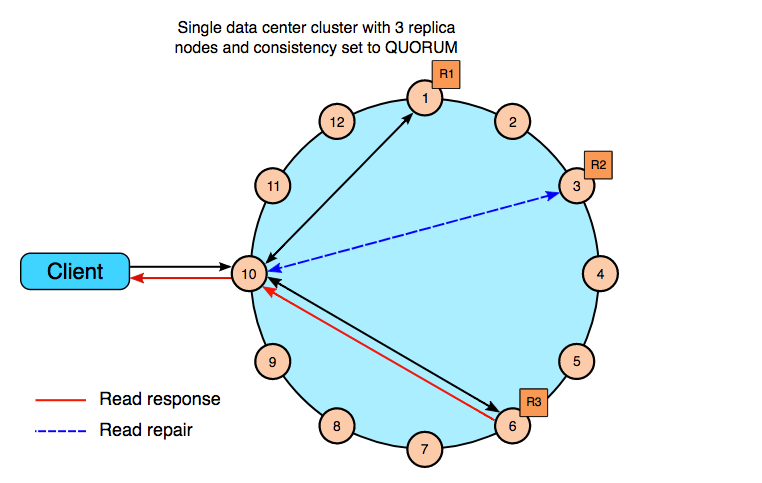

- No more than one segment is running on a node at once.

- Segments can only be started if the number of pending compactions on a node involved is lower than the defined threshold.

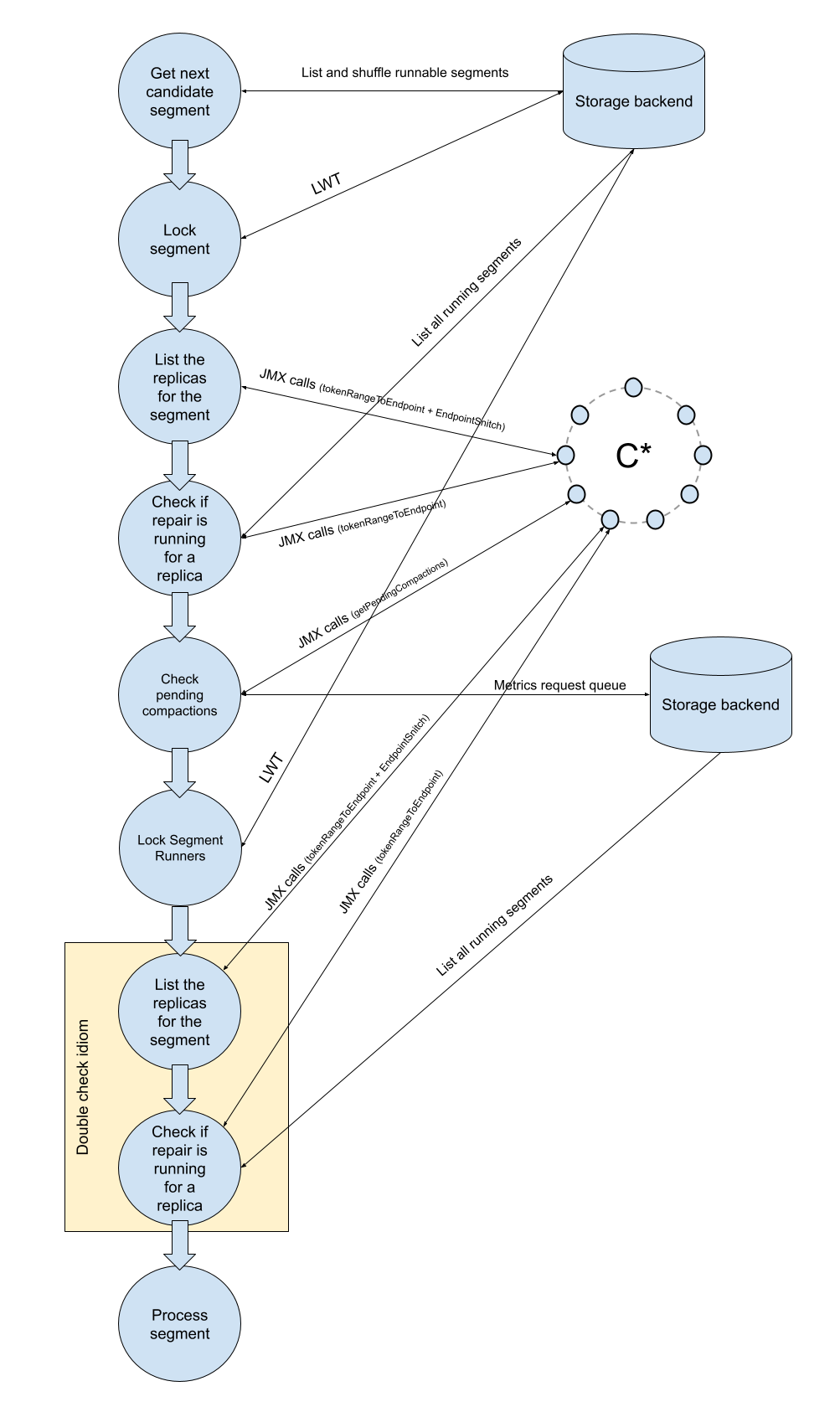

To make sure a segment won’t be handled by several Reaper instances at once, Reaper relies on LightWeight Transactions (LWT) to implement a leader election process. A Reaper instance will “take the lead” on a segment by using a LWT and then perform the checks for the last two conditions above.

To avoid race conditions between two different segments involving a common set of replicas that would start at the same time, a “master lock” was placed after the checks to guarantee that a single segment would be able to start. This required a double check to be performed before actually starting the segment.

There were several issues with this design:

- It involved a lot of LWTs even if no segment could be started.

- It was a complex design which made the code hard to maintain.

- The “master lock” was creating a lot of contention as all Reaper instances would compete for the same partition, leading to some nasty situations. This was especially the case in sidecar mode as it involved running a lot of Reaper instances (one per Cassandra node).

As we were seeing suboptimal performance and high LWT contention in some setups, we redesigned how segments were orchestrated to reduce the number of LWTs and maximize concurrency during repairs (all nodes should be busy repairing if possible).

Instead of locking segments, we explored whether it would be possible to lock nodes instead. This approach would give us several benefits:

- We could check which nodes are already busy without issuing JMX requests to the nodes.

- We could easily filter segments to be processed to retain only those with available nodes.

- We could remove the master lock as we would have no more race conditions between segments.

One of the hard parts was that locking several nodes in a consistent manner would be challenging as they would involve several rows, and Cassandra doesn’t have a concept of an atomic transaction that can be rolled back as RDBMS do. Luckily, we were able to leverage one feature of batch statements: All Cassandra batch statements which target a single partition will turn all operations into a single atomic one (at the node level). If one node out of all replicas was already locked, then none would be locked by the batched LWTs. We used the following model for the leader election table on nodes:

CREATE TABLE reaper_db.running_repairs (

repair_id uuid,

node text,

reaper_instance_host text,

reaper_instance_id uuid,

segment_id uuid,

PRIMARY KEY (repair_id, node)

) WITH CLUSTERING ORDER BY (node ASC)

The following LWTs are then issued in a batch for each replica:

BEGIN BATCH

UPDATE reaper_db.running_repairs USING TTL 90

SET reaper_instance_host = 'reaper-host-1',

reaper_instance_id = 62ce0425-ee46-4cdb-824f-4242ee7f86f4,

segment_id = 70f52bc2-7519-11eb-809e-9f94505f3a3e

WHERE repair_id = 70f52bc0-7519-11eb-809e-9f94505f3a3e AND node = 'node1'

IF reaper_instance_id = null;

UPDATE reaper_db.running_repairs USING TTL 90

SET reaper_instance_host = 'reaper-host-1',

reaper_instance_id = 62ce0425-ee46-4cdb-824f-4242ee7f86f4,

segment_id = 70f52bc2-7519-11eb-809e-9f94505f3a3e

WHERE repair_id = 70f52bc0-7519-11eb-809e-9f94505f3a3e AND node = 'node2'

IF reaper_instance_id = null;

UPDATE reaper_db.running_repairs USING TTL 90

SET reaper_instance_host = 'reaper-host-1',

reaper_instance_id = 62ce0425-ee46-4cdb-824f-4242ee7f86f4,

segment_id = 70f52bc2-7519-11eb-809e-9f94505f3a3e

WHERE repair_id = 70f52bc0-7519-11eb-809e-9f94505f3a3e AND node = 'node3'

IF reaper_instance_id = null;

APPLY BATCH;

If all the conditional updates are able to be applied, we’ll get the following data in the table:

cqlsh> select * from reaper_db.running_repairs;

repair_id | node | reaper_instance_host | reaper_instance_id | segment_id

--------------------------------------+-------+----------------------+--------------------------------------+--------------------------------------

70f52bc0-7519-11eb-809e-9f94505f3a3e | node1 | reaper-host-1 | 62ce0425-ee46-4cdb-824f-4242ee7f86f4 | 70f52bc2-7519-11eb-809e-9f94505f3a3e

70f52bc0-7519-11eb-809e-9f94505f3a3e | node2 | reaper-host-1 | 62ce0425-ee46-4cdb-824f-4242ee7f86f4 | 70f52bc2-7519-11eb-809e-9f94505f3a3e

70f52bc0-7519-11eb-809e-9f94505f3a3e | node3 | reaper-host-1 | 62ce0425-ee46-4cdb-824f-4242ee7f86f4 | 70f52bc2-7519-11eb-809e-9f94505f3a3e

If one of the conditional updates fails because one node is already locked for the same repair_id, then none will be applied.

Note: the Postgres backend also benefits from these new features through the use of transactions, using commit and rollback to deal with success/failure cases.

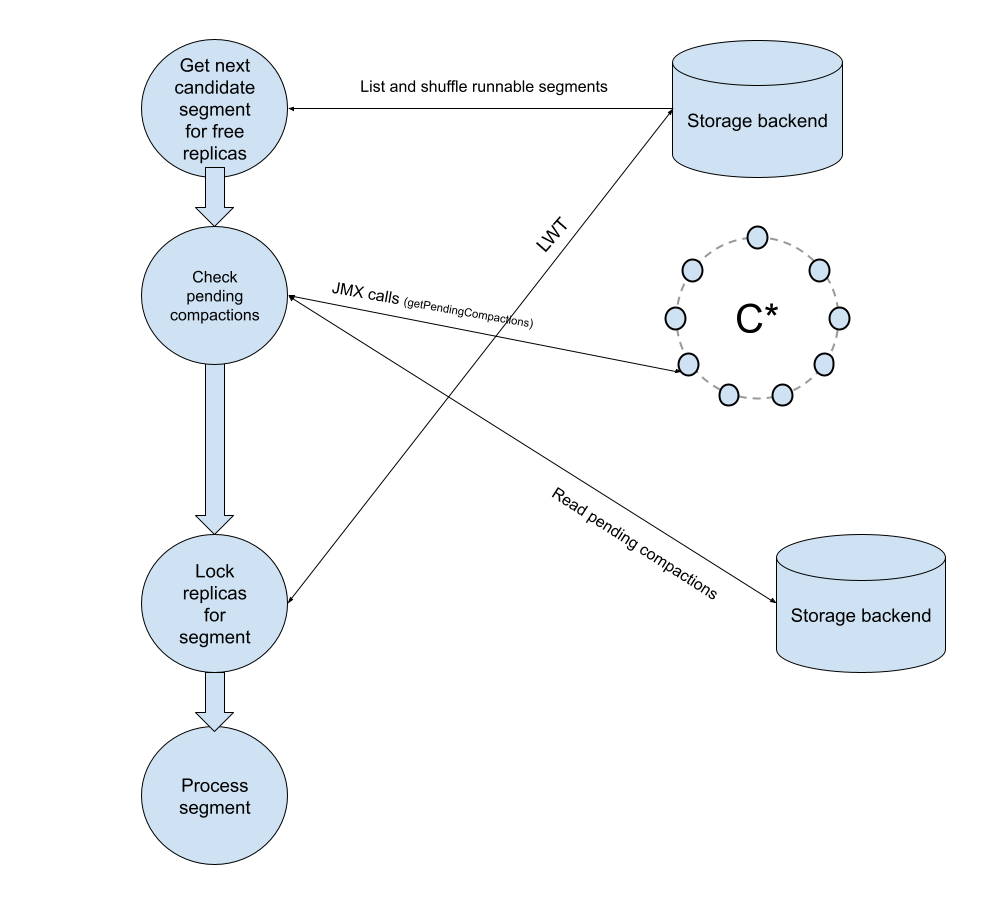

The new design is now much simpler than the initial one:

Segments are now filtered on those that have no replica locked to avoid wasting energy in trying to lock them and the pending compactions check also happens before any locking.

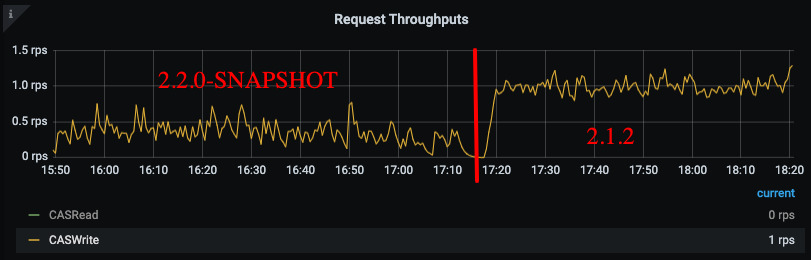

This reduces the number of LWTs by four in the simplest cases and we expect more challenging repairs to benefit from even more reductions:

At the same time, repair duration on a 9-node cluster showed 15%-20% improvements thanks to the more efficient segment selection.

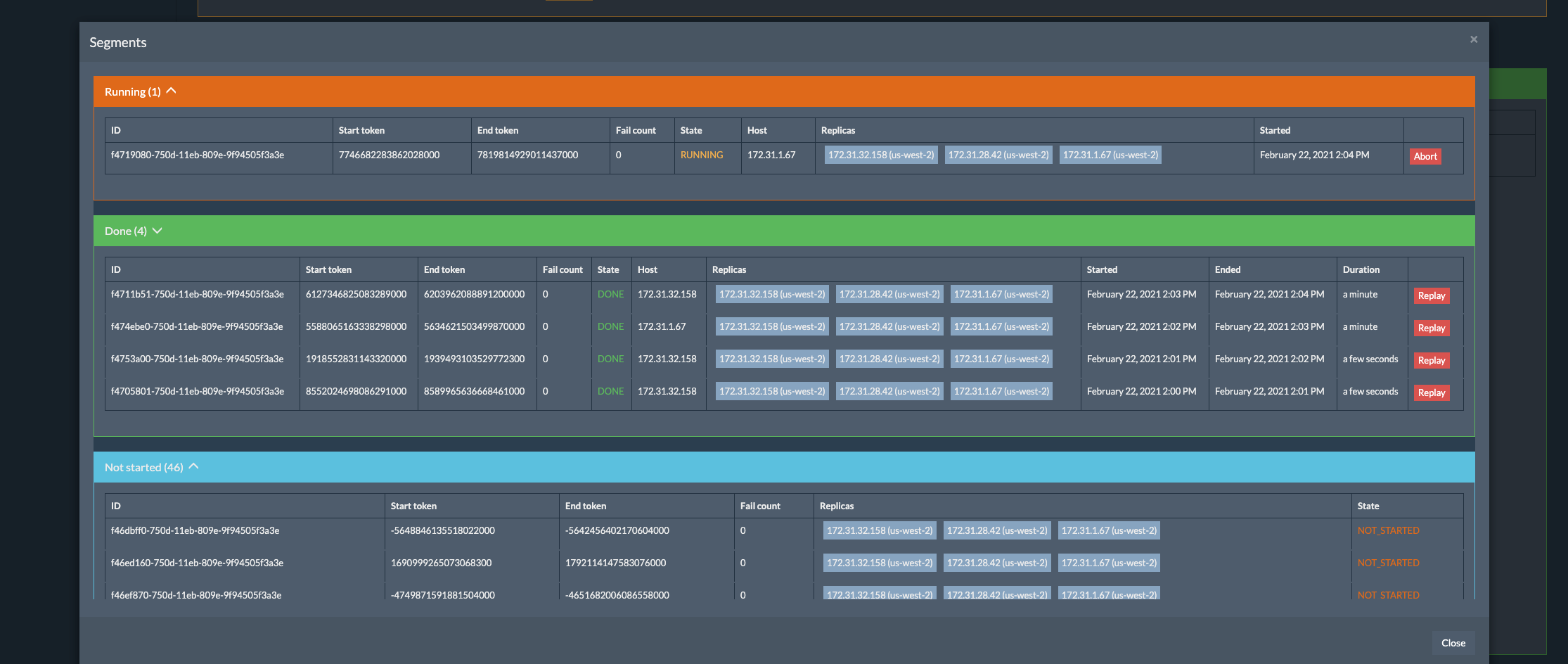

One prerequisite to make that design efficient was to store the replicas for each segment in the database when the repair run is created. You can now see which nodes are involved for each segment and which datacenter they belong to in the Segments view:

Using the repair id as the partition key for the node leader election table gives us another feature that was long awaited: Concurrent repairs.

A node could be locked by different Reaper instances for different repair runs, allowing several repairs to run concurrently on each node. In order to control the level of concurrency, a new setting was introduced in Reaper: maxParallelRepairs

By default it is set to 2 and should be tuned carefully as heavy concurrent repairs could have a negative impact on clusters performance.

If you have small keyspaces that need to be repaired on a regular basis, they won’t be blocked by large keyspaces anymore.

As some of you are probably aware, JFrog has decided to sunset Bintray and JCenter. Bintray is our main distribution medium and we will be working on replacement repositories. The 2.2.0 release is unaffected by this change but future upgrades could require an update to yum/apt repos. The documentation will be updated accordingly in due time.

We encourage all Reaper users to upgrade to 2.2.0. It was tested successfully by some of our customers which had issues with LWT pressure and blocking repairs. This version is expected to make repairs faster and more lightweight on the Cassandra backend. We were able to remove a lot of legacy code and design which were fit to single token clusters, but failed at spreading segments efficiently for clusters using vnodes.

The binaries for Reaper 2.2.0 are available from yum, apt-get, Maven Central, Docker Hub, and are also downloadable as tarball packages. Remember to backup your database before starting the upgrade.

All instructions to download, install, configure, and use Reaper 2.2.0 are available on the Reaper website.