Data Processing with SMACK: Spark, Mesos, Akka, Cassandra, and Kafka

Data Processing with SMACK: Spark, Mesos, Akka, Cassandra, and Kafka

This article introduces the SMACK (Spark, Mesos, Akka, Cassandra, and Kafka) stack and illustrates how you can use it to build scalable data processing platforms While the SMACK stack is really concise and consists of only several components, it is possible to implement different system designs within it which list not only purely batch or stream processing, but also contain more complex Lambda and Kappa architectures as well.

What is the SMACK stack?

First, let’s talk a little bit about what SMACK is. Here’s a quick rundown of the technologies that are included in it:

Spark — a fast and general engine for distributed large-scale data processing.

Mesos — a cluster resource management system that provides efficient resource isolation and sharing across distributed applications.

Akka — a toolkit and runtime for building highly concurrent, distributed, and resilient message-driven applications on the JVM.

• Cassandra — a distributed highly available database designed to handle large amounts of data across multiple datacenters.

• Kafka — a high-throughput, low-latency distributed messaging system/commit log designed for handling real-time data feeds.

Storage layer: Cassandra

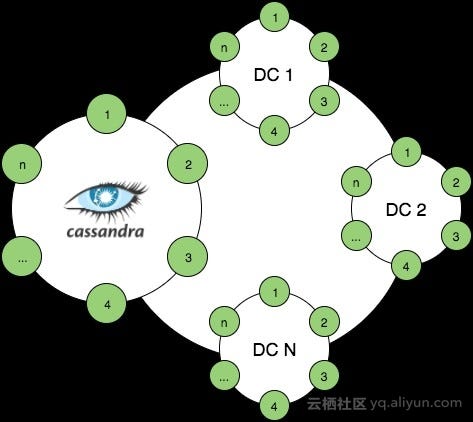

Although not in alphabetical order, first let’s start with the C in SMACK. Cassandra is well known for its high-availability and high-throughput characteristics and is able to handle enormous write loads and survive cluster nodes failures. In terms of CAP theorem, Cassandra provides tunable consistency/availability for operations.

What is most interesting here is that when it comes to data processing, Cassandra is linearly scalable (increased loads could be addressed by just adding more nodes to a cluster) and it provides cross-datacenter replication (XDCR) capabilities. Actually XDCR provides not only data replication but a set of use cases, including:

<bullet point — Start>

• Geo-distributed datacenters handling data specific for the region or located closer to customers.

• Data migration across datacenters: recovery after failures or moving data to a new datacenter.

• Separate operational and analytics workloads.

<bullet point — End>

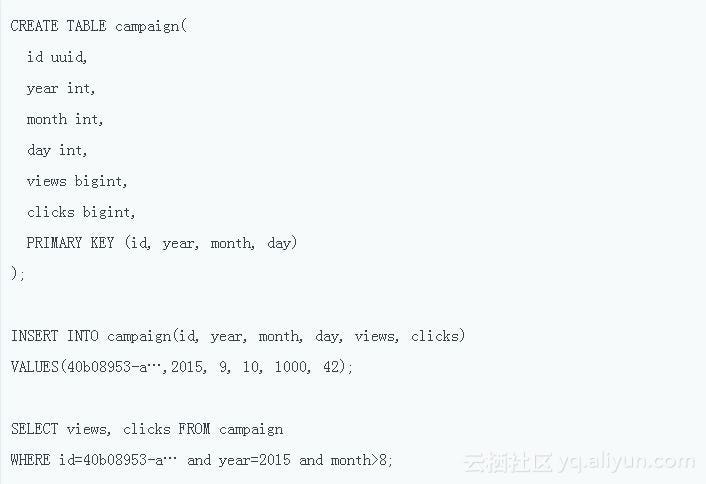

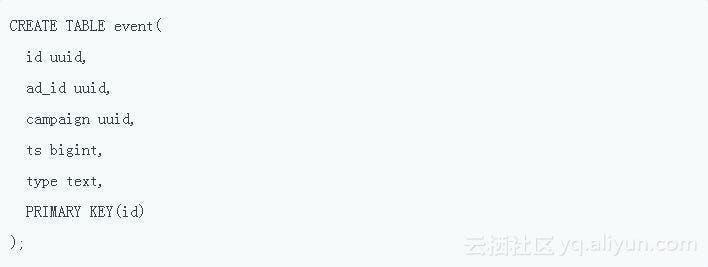

However, all these features come for their own price and with Cassandra this price is its data model. This could be thought of just as a nested sorted map distributed across cluster nodes by partition key and entries sorted/grouped by clustering columns. An example is provided below:

To get specific data in some range, the full key must be specified and no range clauses allowed except for the last column in the list. This constraint is introduced to limit multiple scans for different ranges which may produce random access to disks and lower down the performance. This means that the data model should be carefully designed against read queries to limit the amount of reads/scans which leads to lesser flexibility when it comes to support of new queries.

But what if one has some tables that need to be joined somehow with another tables? Let’s consider the next case: calculate total views per campaign for a given month for all campaigns.

With a given model, the only way to achieve this goal is to read all campaigns, read all events, sum the properties (with matched campaign IDs) and assign them to campaigns. It can be really challenging to implement such applications because the amount of data stored in Casandra may be huge and exceed the memory capacity. Therefore, such sort of data should be processed in a distributed manner and Spark perfectly fits this type of use cases.

Processing layer: Spark

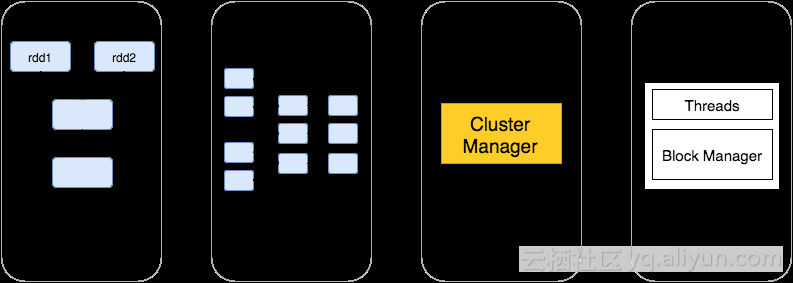

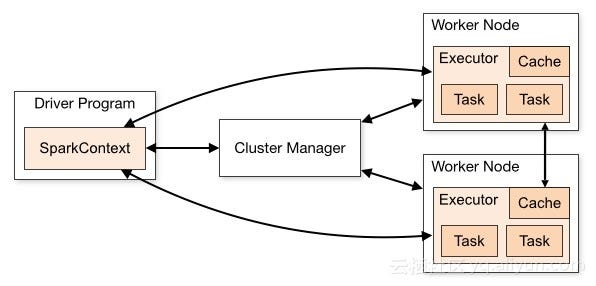

The main abstraction Spark operates with is RDD (Resilient Distributed Dataset, a distributed collection of elements) and the workflow consists of four main phases:

<bullet points — start>

• RDD operations (transformations and actions) form DAG (Direct Acyclic Graph).

• DAG is split into stages of tasks which are then submitted to the cluster manager.

• Stages combine tasks which don’t require shuffling/repartitioning.

• Tasks run on workers and results then return to the client.

<bullet points — end>

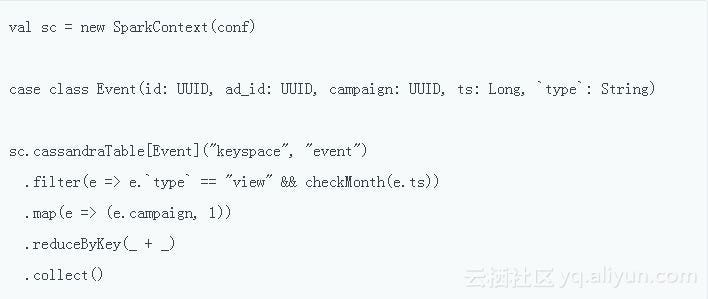

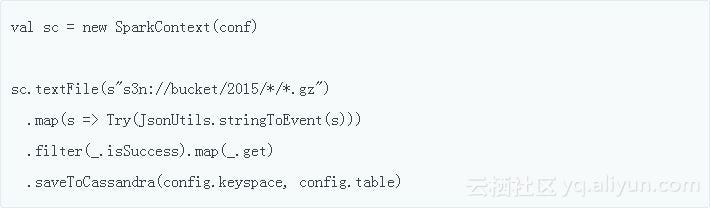

Here’s how one can solve the above problem with Spark and Cassandra:

Interaction with Cassandra is performed via Spark-Cassandra-connector, which makes the whole process easy and straightforward. There’s one more interesting option to work with NoSQL stores and that’s SparkSQL, which translates SQL statements into a series of RDD operations.

With several lines of code it’s possible to implement naive Lambda design which of course could be much more sophisticated, but this example shows just how easy this can be achieved.

Almost MapReduce: bringing processing closer to data

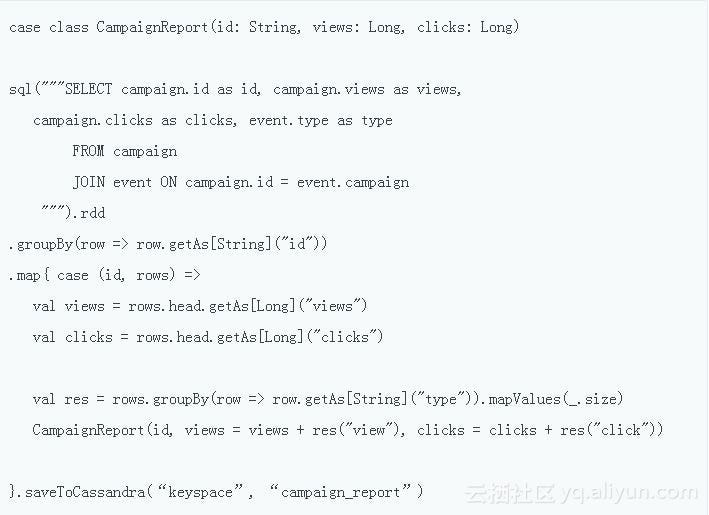

Spark-Cassandra connector is data-locality aware and reads the data from the closest node in a cluster, thus minimizing the amount of data transferred over the network. To fully facilitate Spark-C* connector data locality awareness, Spark workers should be collocated with Cassandra nodes.

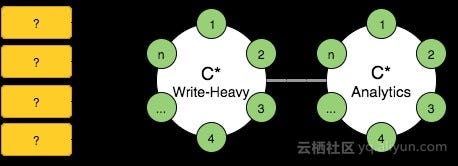

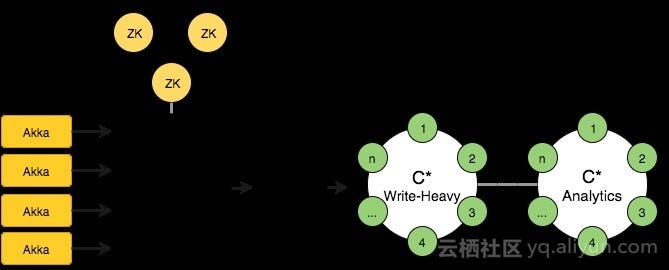

The above image illustrates Spark collocation with Cassandra. It makes sense to separate your operational (or Write-heavy) cluster from one for analytics. Here’s why:

<Bullet Points>

• Clusters can be scaled independently.

• Data is replicated by Cassandra, with no extra-work needed.

• The analytics cluster has different Read/Write load patterns.

• The analytics cluster could contain additional data (for example, dictionaries) and processing results.

• Spark resources impact is limited to only one cluster.

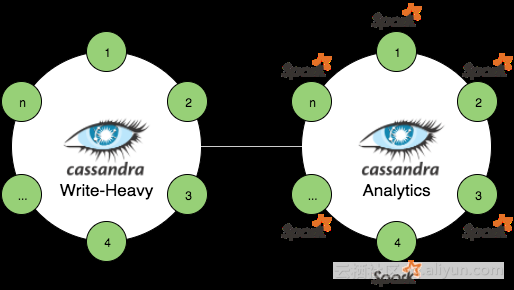

Let’s look at the Spark application deployment options one more time:

As can be seen above, there are three main options available for the cluster resource manager:

• Spark Standalone — Spark (as the master node) and workers are installed and executed as standalone applications (which obviously introduces some overhead and supports only static resource allocation per worker).

• YARN — Works very good if you already have Hadoop.

• Mesos — From the beginning, Mesos was designed for dynamic allocation of cluster resources, not only for running Hadoop applications but for handling heterogeneous workloads.

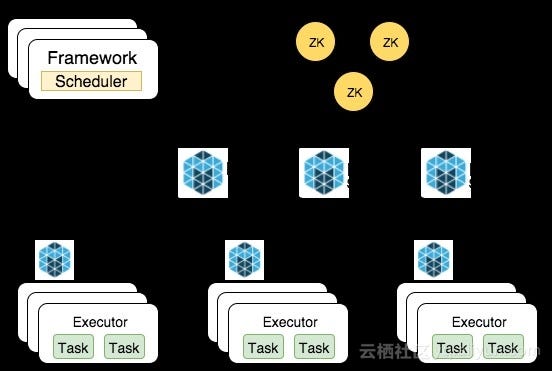

Mesos architecture

The M in SMACK stands for the Mesos architecture. A Mesos cluster consists of master nodes which are responsible for resource offerings and scheduling, and slave nodes which do the actual heavy lifting in the task execution.

In HA mode with multiple master nodes, ZooKeeper is used for leader election and service discovery. Applications executed on Mesos are called frameworks and utilize APIs to handle resource offers and submit tasks to Mesos. Generally the task execution process consists of the following steps:

1. Slave nodes provide available resources to the master node.

2. The master node sends resource offers to frameworks.

3. The scheduler replies with tasks and resources needed per task.

4. The master node sends tasks to slave nodes.

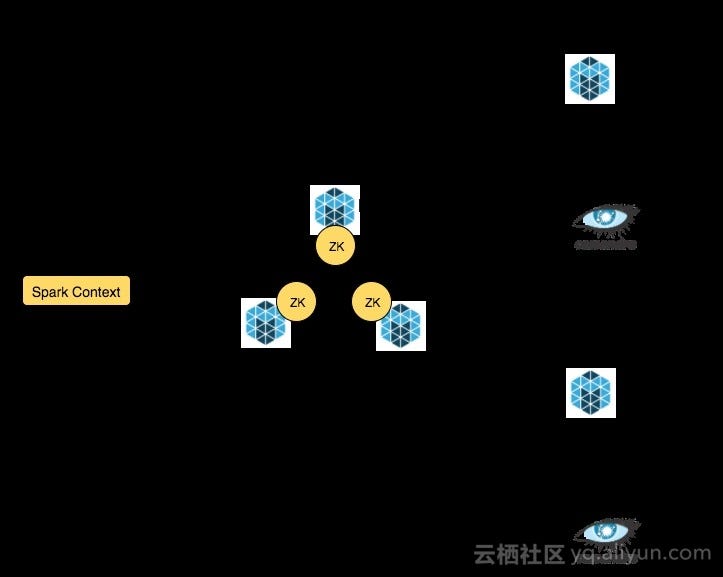

Bringing Spark, Mesos and Cassandra together

As said before Spark workers should be collocated with Cassandra nodes to enforce data locality awareness, thus lowering the amount of network traffic and Cassandra cluster load. Here’s one of the possible deployment scenarios on how to achieve this with Mesos:

<Bullet Points>

• Mesos master nodes and ZooKeepers are collocated.

• Mesos slave nodes and Cassandra nodes are collocated to enforce better data locality for Spark.

• Spark binaries are deployed to all worker nodes and spark-env.sh is configured with proper master endpoints and executor JAR location.

• The Spark executor JAR is uploaded to S3/HDFS.

With provided setup, the Spark job can be submitted to the cluster with simple spark-submit invocation from any worker nodes having Spark binaries installed and assembly JAR containing actual job logic uploaded.

There exist options to run Dockerized Spark, so that there’s no need to distribute binaries to every single cluster node.

Scheduled and Long-running Task Execution

Every data processing system sooner or later faces the necessity of running two types of jobs: scheduled/periodic jobs like periodic batch aggregations and long-running ones which are the case for stream processing. The main requirement for both of these types is fault tolerance — jobs must continue running even in case of cluster nodes failures. Mesos ecosystem comes with two outstanding frameworks supporting each of this types of jobs.

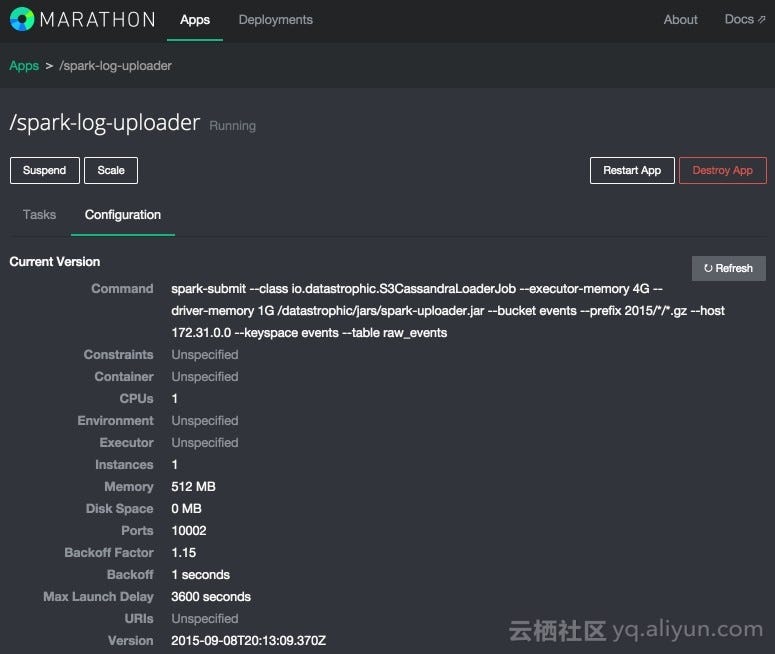

Marathon is a framework for fault-tolerant execution of long-running tasks supporting HA mode with ZooKeeper, able to run Docker and having a nice REST API. Here’s an example of using the shell command to run spark-submit for simple job configuration:

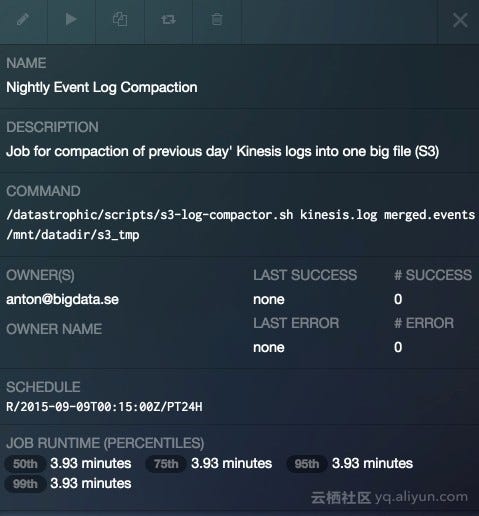

Chronos has the same characteristics as Marathon but is designed for running scheduled jobs and in general it is distributed HA cron supporting graphs of jobs. Here’s an example of S3 compaction job configuration which is implemented as a simple bashscript:

There are plenty of frameworks already available or under active development (such as Hadoop, Cassandra, Kafka, Myriad, Storm and Samza) which are targeted to integrate widely used systems with Mesos resource management capabilities.

Ingesting the Data

Up till now we have the storage layer designed, resource management set up and jobs are configured. The only thing which is not there yet is the data to process:

Assuming that incoming data will arrive at high rates, the endpoints which will receive it should meet the following requirements:

<Bullet Points>

• Provide high throughput/low latency

• Be resilient

• Allow easy scalability

• Support back pressure

On second thoughts, back pressure is not a must, but it would be nice to have this as an option to handle load spikes. Akka perfectly fits the requirements and basically it was designed to provide this feature set. Here is a quick run-down of the benefits you can expect to get from Akka:

• Actor model implementation for JVM

• Message-based and asynchronous

• Enforcement of the non-shared mutable state

• Easily scalable from one process to cluster of machines

• Actors form hierarchies with parental supervision

• Not only concurrency framework: akka-http, akka-streams, and akka-persistence

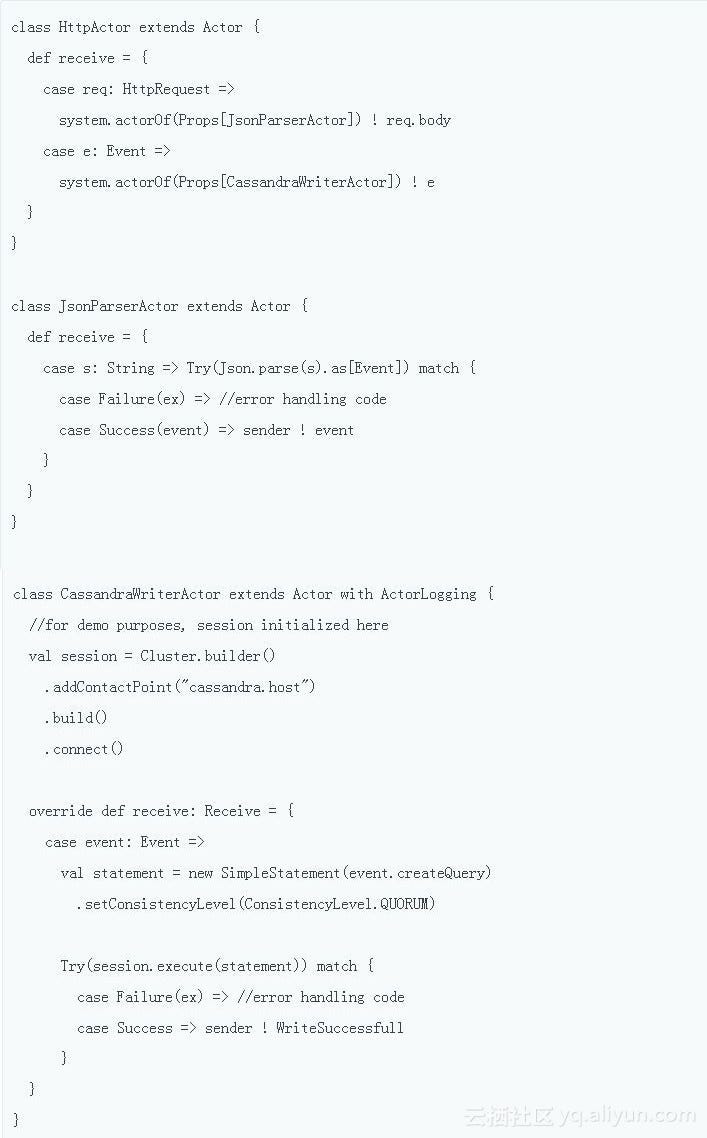

Here’s a simplified example of three actors which handle JSON HttpRequest, parse it into the domain model case class, and save it to Cassandra:

It looks like only several lines of code are needed to make everything work, but while writing raw data (events) to Cassandra with Akka, the following problems may be caused:

<Bullet Points- Start>

• Cassandra is still designed for fast serving but not batch processing, so pre-aggregation of incoming data is needed

• Computation time of aggregations/rollups will grow with amount of data

• Actors are not suitable for performing aggregation due to stateless design model

• Micro-batches could partially solve the problem

• Some sort of reliable buffer for raw data is still needed

<Bullet Points- end>

Kafka acts as a buffer for incoming data

For keeping incoming data with some retention and its further pre-aggregation/processing, some sort of distributed commit log could be used. In this case, consumers will read data in batches, process it and store it into Cassandra in form of pre-aggregates.

Here’s an example of publishing JSON data through HTTP to Kafka with akka-http:

Consuming the data: Spark Streaming

While Akka still could be used for consuming stream data from Kafka, having Spark in your ecosystem brings Spark Streaming as an option to solve the following problems:

<Bullet Points>

• It supports a variety of data sources

• It provides at-least-once semantics

• Exactly-once semantics available with Kafka Direct and idempotent storage

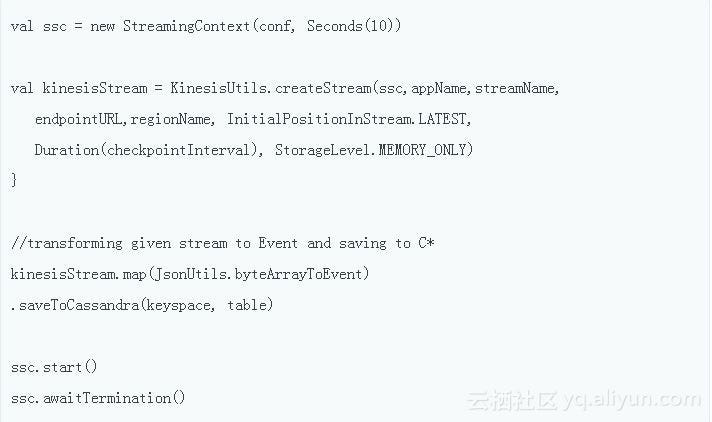

Here’s an example of consuming event stream from Kinesis with Spark Streaming:

Designing for failure: backups and patching

Usually this is the most boring part of any system, but it’s really important to protect data from loss in every possible way when the datacenter is unavailable or analysis is performed on the datacenter breakdowns.

So why not store the data in Kafka/Kinesis?

At the moment of writing this article, Kinesis is the only one solution that can retain data without backups when all processing results have been lost. While Kafka supports a long retention period, cost of hardware ownership should be considered because for example S3 storage is much cheaper than multiple instances running Kafka and S3 SLA are really good.

Apart from having backups, the restoring/patching strategies should be designed upfront and tested so that any problems with data could be quickly fixed. Programmers’ mistakes in aggregation job or duplicated data deletion may break the accuracy of the computation results. Therefore, it is very important to have the capability of fixing such errors. One thing to make all these operations easier is to enforce idempotency in the data model so that multiple repetition of the same operations produce the same results (for example, SQL update is an idempotent operation while counter increment is not).

Here is an example of Spark job which reads S3 backup and loads it into Cassandra:

SMACK: The Big Picture

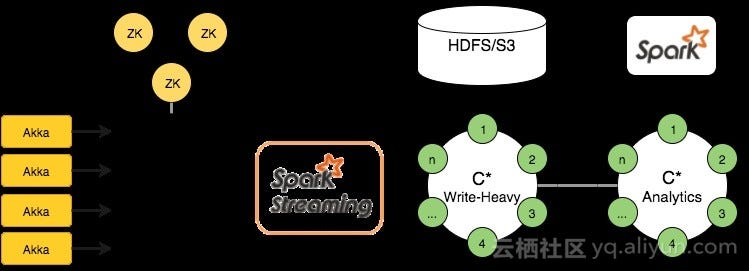

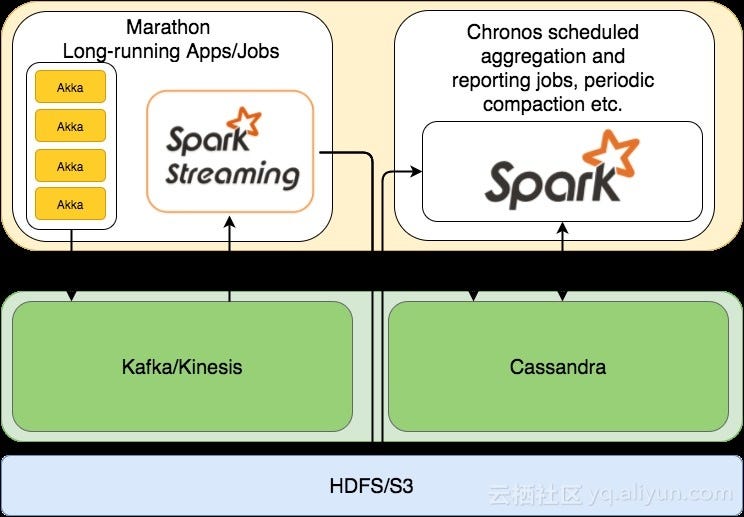

This concludes our broad description of SMACK. To allow you to better visualize the design of a data platform built with SMACK, here’s a visual depiction of the architecture:

In the above article we talked about some of the basic functions of using SMACK. To finish with here is a quick rundown of it’s main advantages:

<Bullet Points — start>

• Concise toolbox for wide variety of data processing scenarios

• Battle-tested and widely used software with large support communities

• Easy scalability and replication of data while preserving low latencies

• Unified cluster management for heterogeneous loads

• Single platform for any kind of applications

• Implementation platform for different architecture designs (batch, streaming, Lambda, or Kappa)

• Really fast time-to-market (for example, for MVP verification)

<Bullet Points — end>

Thanks for reading. If anyone has experience developing applications using SMACK. Please leave some comments.

Reference: