At Monzo, Cassandra is the database at the core of almost all persistent data storage. We've made a big bet on Cassandra because it can scale linearly, which has let us nearly quadruple our customer base every year without an issue. At peak time, we can now handle 300,000 reads per second.

Historically, we've taken a 'trusted network' approach to securing Cassandra: we make it very hard to get access to our network, and have Cassandra trust any client from within the network. This worked great, and we still have a lot of confidence in the security of that model. But in the security team we've been trying to move away from this assumption, towards a 'zero trust' cluster where an attacker inside our cluster would have no power.

Not only did we want to introduce authentication, requiring Cassandra clients to prove that they have permission to deal with customer data, but we also wanted to be able to authorise these clients. We want to ensure that the application that deals with storing receipt data isn't able to make changes to your balance, for example. This has incredible security benefits, as the blast radius of a compromised service becomes much smaller. Every service has a different Cassandra 'keyspace', which is a set of tables, and the keyspace is generally named after the service, for example service.ledger has the ledger keyspace. We have data isolation between different microservices by default, so most services never read data written by another service. There are a few exceptions to this, mostly for maintenance. So it's fairly easy to enforce very strict isolation on most services.

Cassandra offered some basic functionality

Cassandra ships with basic authentication and authorisation mechanisms that are similar to SQL databases: you can create users with a static password. Cassandra hashes that password with bcrypt and stores it in a table. You can grant permissions to a user with a CQL (Cassandra's limited flavour of SQL) statement. In simplified terms, you can allow operations like read or write, on objects like tables, keyspaces, or everything.

A very simple system based on top of this functionality would be to create a new user with the right permissions for every newly created service (and delete users when services are deleted). Then, you could store the passwords in Vault using our existing secret management processes. If we didn't care about rotating the passwords, this would be very simple to implement. But credentials naturally leak over time. They might end up written to disk by accident, or caught in a dump of TCP traffic. We wanted to design a system that allows for continuous credential rotation.

It's also not ideal that all the instances (pods, in Kubernetes terminology) of a service would share a user, as this makes our audit logs less specific. It would be great to issue new credentials when a new pod starts.

So we needed an application that could generate Cassandra credentials on demand to a pod (which needs to prove its identity), and expire them after a period. For this we looked towards Vault. Our applications already have a meaningful identity to Vault, which you can read about in our previous blog post on secret management. Vault natively handles expiry in an easy to understand way, we already have great audit logs, and it already has a plugin for Cassandra credential management! This meant that there was something that we could use pretty much out of the box.

We thought about how to configure Vault

Vault's database plugins are probably (and rightfully) designed for use cases with only a few different types of database client. We have over 500 services that use Cassandra, and each of them needs a different keyspace (and some need different Cassandra clusters too). In Vault's terminology, we'd need to define different 'roles' for each service which defines which cluster and keyspace it needs.

At first we thought about getting Vault to infer keyspace names based on a string transformation of the service name, as most followed a simple rule. But we decided this was fragile. We also knew there were a list of exceptions, both in terms of naming, but also the handful of services that do weird stuff, like reading data from other services (every sensible rule comes with some sensible exceptions!).

We also thought about writing a controller that'd write the appropriate roles to Vault when a service was created, and perhaps update them if they change. We wanted there to be a simple way for a service to define what keyspace and cluster it needed, that engineers could see and reason about. But we also didn't love that we'd have to reconcile this with Vault. We'd have critical state in two places, and we'd also have to give a service permissions to write arbitrary roles to Vault, which undermines Vault's security model.

We came up with a way to create 'dynamic' roles

In the end, we realised what we really wanted was a way for Vault to pull in information from Kubernetes about what a service needed access to, and dynamically generate a role for that service, instead of us needing to create each role. Our unit of identity to Vault is the Kubernetes ServiceAccount object, so we decided to add two annotations to this object:

monzo.com/keyspace- describes the keyspace that this service should get total access to.monzo.com/cluster- identifies the Cassandra cluster that this service needs to talk to.

We wrote a check that runs on every pull request and makes sure you provide a keyspace annotation if you use Cassandra.

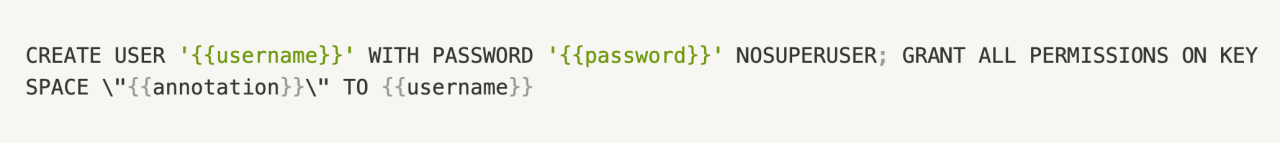

We then needed to come up with a 'default' role in Vault that these values would get interpolated into. We created a role k8s with database name cassandra-premium1 (the cluster most of our services use) and creation statements:

{{username}} and {{password}} are native features of Vault's database plugin: it interpolates ephemeral values for these when a caller requests a credential pair. The {{annotation}} variable is a property of our fork of the plugin. But this variable is replaced with the value of monzo.com/keyspace for the ServiceAccount associated with the caller. The database name is also overridden by the monzo.com/cluster annotation if provided.

Knowing the ServiceAccount of the caller requires some tricks. Instead of the caller requesting credentials based on the role k8s, they should request for the role k8s_s-ledger_default where default is their Kubernetes namespace and s-ledger is the name of their ServiceAccount. The forked plugin then interprets the name and knows to use the underlying k8s role with the annotation for s-ledger interpolated.

We use a Vault policy to enforce that a ServiceAccount can only call the path meant for them:

![# allow creation of database credentials on the rw role at path database/creds/k8s_rw_s-ledger_default

path "database/creds/k8s_rw_{{identity.entity.aliases.k8s.metadata.service_account_name}}_{{identity.entity.aliases.k8s.metadata.service_account_namespace}}"

{

capabilities = ["read"]

}](https://images.ctfassets.net/ro61k101ee59/th1aXKsPW0hdhMpbQ6l1c/9dd33fac433decec4db3a480ab7dd765/Screenshot_2019-12-17_at_12.09.45.png?w=1280&q=90)

An advantage of this approach is that you can still create a 'real' role with the same naming convention. This lets you specify a more complex creation statement for those few services that, for example, need access to more than one keyspace.

Another option would've been to fork the plugin to instead accept parameters to the credential creation endpoint which could override values in the underlying role. But our approach keeps the API the same by essentially 'simulating' roles for any ServiceAccount. This means existing tools and libraries which know how to request database credentials from Vault still work, if provided the right role name.

We didn't want Vault to look up the ServiceAccount object every time a caller wanted credentials. This would be slow, and if the Kubernetes API servers were down we wouldn't be able to issue credentials. Instead, we set up the plugin to keep an in-memory cache of ServiceAccounts, kept in sync by the standard Kubernetes api machinery libraries. To be extra safe, we also persist this cache in Vault's storage as a fallback, so that a newly started Vault can start serving database requests without waiting for the cache to sync.

You can see our fork of Vault's database plugin with all these features here. Contributions or suggestions are more than welcome!

We thought hard about resilience

We figured out how to use Vault to manage our credentials pretty quickly. We spent the bulk of the project thinking about how to keep it safe, both during the rollout and in the long term. For starters, we needed to edit our Cassandra client libraries to request credentials from Vault. We were concerned about what happens when a new instance of a service starts and Vault is down. We decided to prevent the service from fully starting and passing Kubernetes readiness checks until it's issued a Vault token. This has the advantage of blocking deployments from progressing while Vault is down, which has benefits for all Vault clients.

We set our credentials to expire every week. They get renewed about 80% of the way into their expiry time, using Vault's renewer library code, which means in practice that if they fail to get renewed, we have at least a day before they actually expire. In general, we handled problems issuing or renewing credentials by retrying with an exponential backoff. When a pod first starts, it will try several times to issue credentials before returning an error, and then will continue to retry in the background indefinitely. On error, we made it possible to fall back on some hardcoded credentials, which helped us roll out with confidence. We later removed these credentials as we became confident in our library code.

We also decided to move Vault's storage backend away from Cassandra to S3, to make Vault more available and avoid a near-circular dependency.

Performance was critical

We thought a lot about how to make sure we wouldn't affect the performance of our Cassandra cluster. The username and password are checked against the table only when a session starts, but the permissions of a given user are checked on every query, so it's really important that this check is quick. Fortunately Cassandra has in-memory caches for both users and permissions, so we decided to make the cache size really big, so that every query should hit the cache. This meant the time it takes to verify a query was negligible. The downside is that if credentials expire while a session is open, it takes a few hours for commands to actually start failing. But this wasn't a big concern for us.

One performance issue we noticed in our test environment was when a session starts, the password is hashed with 10 bcrypt rounds to compare against the table value. This is (deliberately) fairly slow. When a lot of clients connect at once (eg when a new node starts) this can burn all the CPU on the node. This actually took down our test cluster when we first enabled auth. We decided to configure Cassandra to only use 4 rounds of bcrypt to hash passwords, which is 64 times faster. The number of rounds doesn't matter much as we use randomly generated passwords with 95 bits of entropy, which would take much longer to crack than the universe has existed, even if you could hash very quickly.

Humans need credentials too

There are some use cases where a human needs read-only access to certain keyspaces, or even all keyspaces. To handle this, we adopted a similar approach to shipper secret, the tool we wrote for secret management. We wrote a CLI tool which exchanges a Monzo access token for a Vault access token with permissions based on the caller's team, and is able to ask Vault for credentials.

The tool is called auth, and has an auth cassandra command:

Inside Vault, this is very similar to how services obtain credentials: the tool requests credentials for a particular ServiceAccount, in this case s-ledger. We created a few different roles for this, depending on whether all keyspaces are needed, or just one. We set up some strict auditing on Vault so that we would know when people ask for credentials, and for what keyspaces.

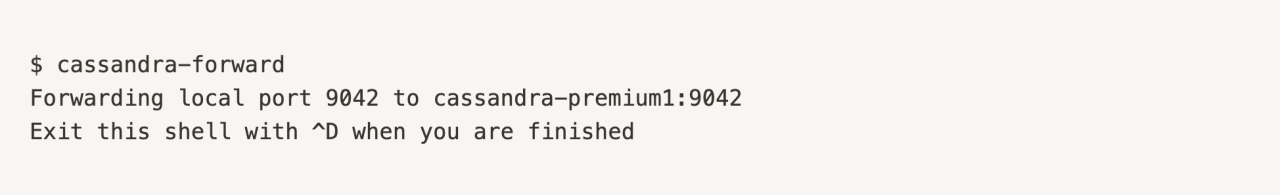

We encouraged people to run cqlsh commands locally, with a port forward set up through a bastion that allowed access to Cassandra's client port:

We planned a rollout

At a high level, there were a few stages to our rollout, which ended up taking quite a long time.

First, we came up with a default set of credentials: username

monzo, passwordmonzo, which would have access to all keyspaces. We needed services to be able to present these credentials to Cassandra before we could safely tell Cassandra to start asking for them. We updated the configuration of all services to be able to use this.We updated the configuration of all of our Cassandra nodes to enable authentication and authorisation. This step took weeks, because we paused at several points to monitor the performance impact on a subset of nodes.

We created a superuser for our Vault plugin, which would be able to create arbitrary other users. We enabled the Vault plugin, injected the password for this superuser, then told Vault to rotate its own password - a really cool feature of Vault!

We slowly rolled out code to services which would allow them to request database credentials from Vault, and then switched on this feature in batches of 100 services. We did this over the course of several weeks, as we were concerned about problems that could arise as the number of users grew.

We removed

monzouser and changed the password to thecassandrasuperuser which is installed by default. From this point, we were enforcing that clients have the tightly scoped, short lived credentials from Vault.

We decided to go through this full process in our test environment. We also migrated the Cassandra cluster we use to store logs, which handles a lot of load but doesn't need to be as available. This gave us useful insight into failure cases. In that time, we witnessed Vault outages and Cassandra outages in our test and logging environments, and with each problem we improved our monitoring and our controls to make the system more resilient. In the end, we were confident in the change, and going to production was completely routine.

We're now automatically managing and rotating secure, tightly scoped credentials for over 2300 Cassandra clients. The security benefits of this are huge: we've mitigated a major attack vector! In conjunction with network isolation, we've moved a lot closer to our 2020 goal of a completely zero trust platform.