So far, I've explained why you shouldn't migrate to C* and the origins and key terms. Now, I'm going to turn my attention to how Cassandra stores data.

At a very high level, Cassandra operates by dividing all data evenly around a cluster ofnodes, which can be visualized as aring. Nodes generally run on commodity hardware. Each C* node in the cluster is responsible for and assigned atoken range(which is essentially a range of hashes defined by a partitioner, which defaults to Murmur3Partitioner in C* v1.2+). By default this hash range is defined with a maximum number of possible hash values ranging from 0 to 2^127-1.

Each update or addition of data contains a unique row key (also known as a primary key). The primary key is hashed to determine a replica (or node) responsible for a token range inclusive of a given row key. The data is then stored in the cluster n times (where n is defined by the keyspace’s replication factor), or once on each replica responsible a given query’s row key. All nodes in Cassandra are peers and a client’s read or write request can be sent to any node in the cluster, regardless of whether or not that node actually contains and is responsible for the requested data. There is no concept of a master or slave, and nodes dynamically learn about each other and the state and health of other nodes thru the gossip protocol. A node that receives a client query is referred to as the coordinator for the client operation; it facilitates communication between all replica nodes responsible for the query (contacting at least n replica nodes to satisfy the query’s consistency level) and prepares and returns a result to the client.

Reads and Writes

Clients may interface with Cassandra for reads and writes via either the native binary protocol or Thrift. CQL queries can be made over both transports. As a general recommendation, if you are just getting started with Cassandra you should stick to the native binary protocol and CQL and ignore Thrift.

When a client performs a read or write request, the coordinator node contacts the number of required replicas to satisfy the consistency level included with each request. For example, if a read request is processed using QUORUM consistency, and the keyspace was created with a “replication factor” of 3, 2 of the 3 replicas for the requested data would be contacted, their results merged, and a single result returned to the client. With write requests, the coordinator node will send a write requests with all mutated columns to all replica nodes for a given row key.

Processing a Local Update

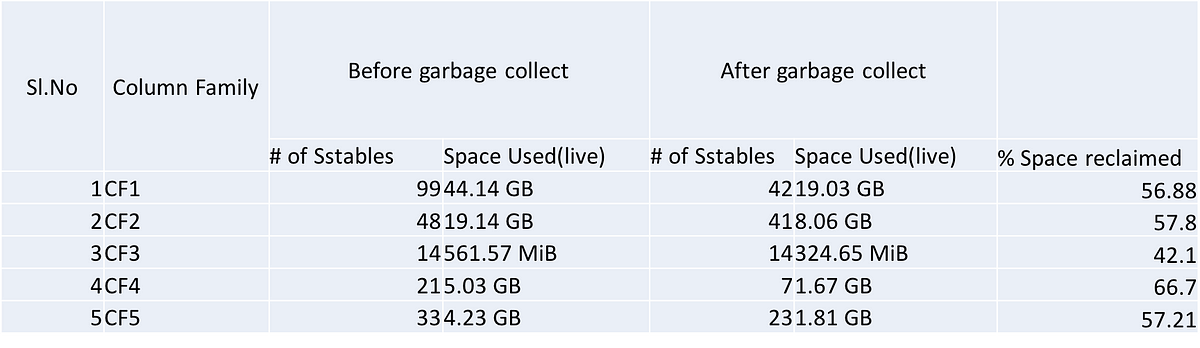

When an update is processed – also known as a mutation -- an entry is first added to the commit log, which ensures durability of the transaction. Next, it is also added to the memtable. A memtable is a bounded in memory write-back cache that contains recent writes which have not yet been flushed to an SSTable (a permanent, immutable, and serialized on disk copy of the tables data). When updates cause a memtable to reach it’s configured maximum in-memory size, the memtable is flushed to an immutable SSTable, persisting the data from the memtable permanently on disk while making room for future updates. In the event of a crash or node failure, events are replayed from the commit log, which prevents the loss of any data from memtables that had not been flushed to disk prior to an unexpected event such as a power outage or crash.

CAP Theorem: Why you should know it

Finally, before we discuss some Cassandra specific capabilities, features, and limitations, I’d like to introduce an important theorem known as the CAP theorem. This is an important theorem to be aware of when first starting with Cassandra and distributed systems in general. Authored by Eric Brewer, the theorem states that it is impossible for a distributed system to provide all three guarantees: 1) Consistency 2) Availability and 3) Partition tolerance simultaneously. So at best, a distributed system will only ever be able to provide 2 of the 3 guarantees. Keep this theorem in mind, as it will help you understand some of Cassandra’s (a distributed database) limitations.

Stay tuned this week for more posts from Michael Kjellman. This post is an excerpt from 'Frustrated with MySQL? Improving the scalability, reliability and performance of your application by migrating from MySQL to Cassandra.' In the meantime, check out our other Cassandra Week posts.