my Patch mentioned in this post (RFE-7068625) for JVM garbage collector was accepted into HotSpot JDK code base and available starting from 7u40 version of HotSport JVM from Oracle.

This was a reason for me to redo some of my GC benchmarking experiments. I have already mentioned ParGCCardsPerStrideChunk in article related to patch. This time, I decided study effect of this option more closely.

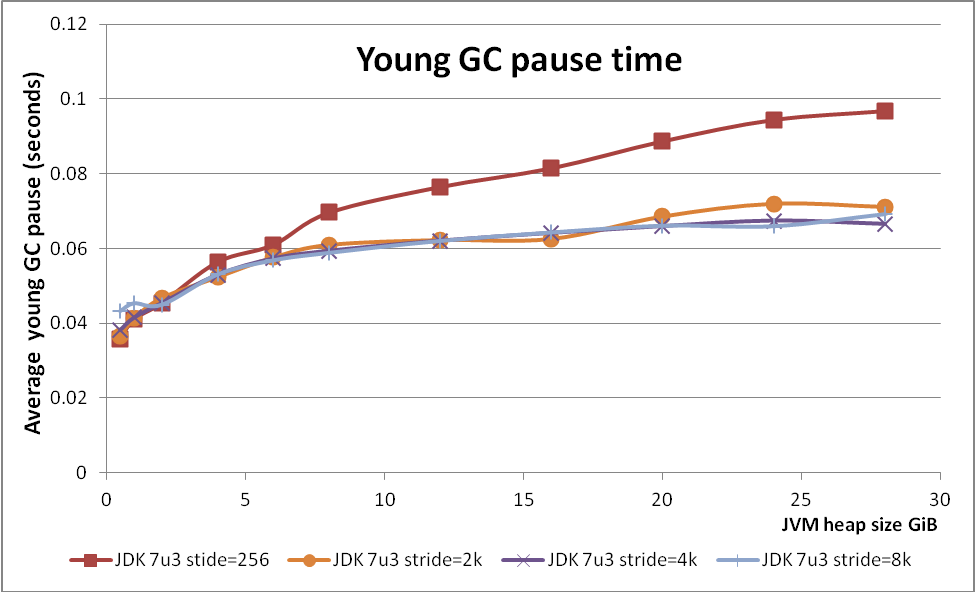

Parallel copy collector (ParNew), responsible for young collection in CMS, use ParGCCardsPerStrideChunk value to control granularity of tasks distributed between worker threads. Old space is broken into strides of equal size and each worker responsible for processing (find dirty pages, find old to young references, copy young objects etc) a subset of strides. Time to process each stride may vary greatly, so workers may steal work from each other. For that reason number of strides should be greater than number of workers.

By default ParGCCardsPerStrideChunk =256 (card is 512 bytes, so it would be 128KiB of heap space per stride) which means that 28GiB heap would be broken into 224 thousands of strides. Provided that number of parallel GC threads is usually 4 orders of magnitude less, this is probably too many.

Synthetic benchmark

Benchmark above is synthetic and very simple. Next step is to choose more realistic use case. I usual, my choice is to use Oracle Coherence storage node as my guinea pig.

Benchmarking Coherence storage node

Coherence node with 28GiB of heap

JVM

|

Avg. pause

|

Improvement

|

7u3

|

0.0697

|

0

|

7u3, stride=4k

|

0.045

|

35.4%

|

0.0546

|

21.7%

|

|

Patched OpenJDK 7,

stride=4k

|

0.0284

|

59.3%

|

Coherence node with 14GiB of heap

JVM

|

Avg. pause

|

Improvement

|

7u3

|

0.05

|

0

|

7u3, stride=4k

|

0.0322

|

35.6%

|