Running Cassandra in Kubernetes presents some interesting challenges. Performance is almost always an important consideration with Cassandra, no less so when running in Kubernetes. In this post, I demonstrate how to deploy tlp-stress in Kubernetes and run it against a Cassandra cluster that is also running in the same Kubernetes cluster.

tlp-stress is a workload-centric tool for stress testing and benchmarking Cassandra. It is developed and maintained by my colleagues at The Last Pickle. If you are not familiar with it, checkout this introductory article along with this one for a great overview.

Enough with formalities. Let’s get to the good stuff!

In order to run tlp-stress in Kubernetes we need a Docker image. The project does not (yet) publish Docker images, but it easy to create one.

First, I need to clone the git repo:

$ git clone https://github.com/thelastpickle/tlp-stress.git

Take a look at build.gradle. Around line 12 you should see a plugins section that looks like:

plugins {

id 'com.google.cloud.tools.jib' version '0.9.11'

}jib is a project that builds optimized Docker images for Maven and Gradle projects. It builds the image without using Docker which means you do not need the Docker daemon running locally. Pretty cool. The images are optimized in that they use distroless base images. Distroless images are minimal images that contain only the application and its runtime dependencies. This results in very small image sizes.

In my local branch I have update the jib plugin to the latest version, which is 1.3.0 at the time of this writing.

To create and push the image to docker hub I run:

# If you are following the steps be sure to replace jsanda with your docker hub username$ ./gradlew jib --image=jsanda/tlp-stress:demo

You will find the image on docker hub here.

We need a Cassandra cluster running before we can successfully deploy tlp-stress. I am not going to cover how to deploy Cassandra in Kubernetes in this article. If you are not familiar with deploying Cassandra in Kubernetes check out Deploying Cassandra with Stateful Sets. In some future posts however, I will explore more robust deployment options.

For now, let’s take a look at small cluster that I have running in GKE:

$ kubectl get statefulset

NAME READY AGE

cassandra-demo-dc1-rack1 2/2 4m21s$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cassandra-demo ClusterIP None <none> 7000/TCP,7001/TCP,7199/TCP,9042/TCP 5m45s

The stateful set that manages the two pods in which Cassandra runs. The headless service allows clients, like tlp-stress, to connect to the Cassandra cluster.

tlp-stress is not designed to run indefinitely. It terminates when it finishes. It is therefore better suited to run as a Job than as a Deployment.

Here is the job spec:

apiVersion: batch/v1

kind: Job

metadata:

name: tlp-stress

spec:

template:

spec:

restartPolicy: OnFailure

containers:

- name: tlp-stress

image: jsanda/tlp-stress:demo

imagePullPolicy: IfNotPresent

args: ["run", "KeyValue", "--host", "cassandra-demo", "--duration", "30m"]

Next, deploy the job:

$ kubectl apply -f tlp-stress-job.yaml

Check that the job is running:

$ kubectl get jobs

NAME COMPLETIONS DURATION AGE

tlp-stress 0/1 92s 92s$ kubectl get pods -l job-name=tlp-stress

NAME READY STATUS RESTARTS AGE

tlp-stress-hwd69 1/1 Running 0 2m57s

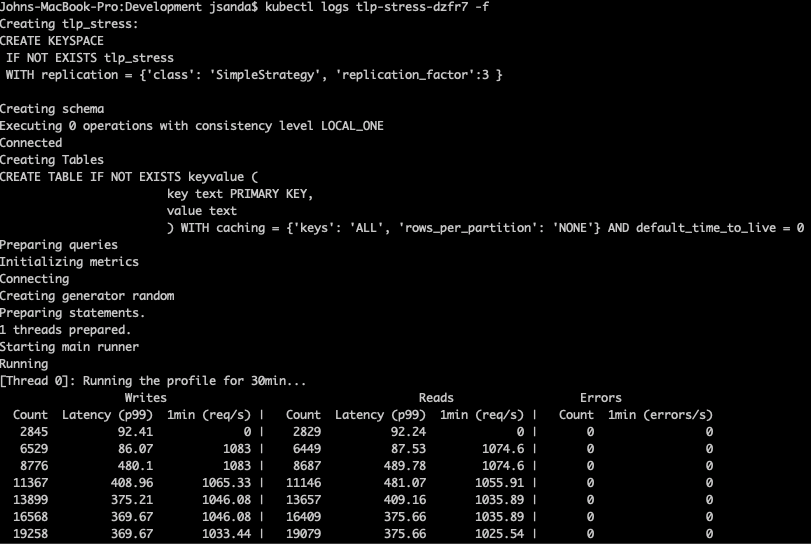

Here is a screenshot of the pod logs that verify tlp-stress is running:

This post demonstrates how to quickly and easily get tlp-stress running in Kubernetes. This is a just PoC to show how things work. In future posts, I will report on testing some real-world cluster configurations. It will be interesting to see how Cassandra performance compares when running inside versus outside of Kubernetes.