Previously on Jepsen, we learned about Kafka’s proposed replication design.

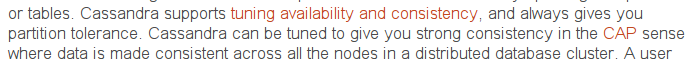

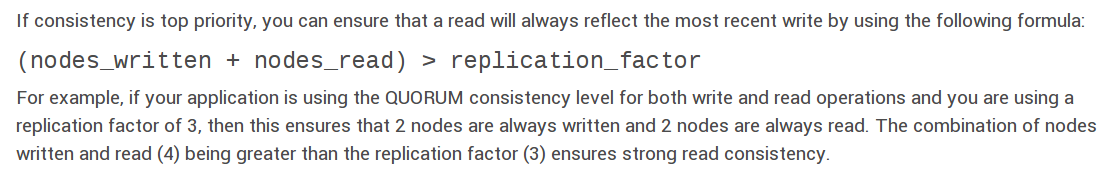

Cassandra is a Dynamo system; like Riak, it divides a hash ring into a several chunks, and keeps N replicas of each chunk on different nodes. It uses tunable quorums, hinted handoff, and active anti-entropy to keep replicas up to date. Unlike the Dynamo paper and some of its peers, Cassandra eschews vector clocks in favor of a pure last-write-wins approach.

Some Write Loses

If you read the Riak article, you might be freaking out at this point. In Riak, last-write-wins resulted in dropping 30-70% of writes, even with the strongest consistency settings (R=W=PR=PW=ALL), even with a perfect lock service ensuring writes did not occur simultaneously. To understand why, I’d like to briefly review the problem with last-write-wins in asynchronous networks.

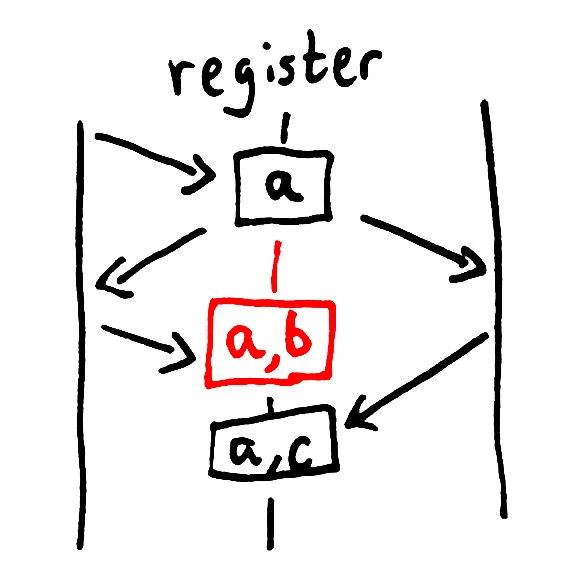

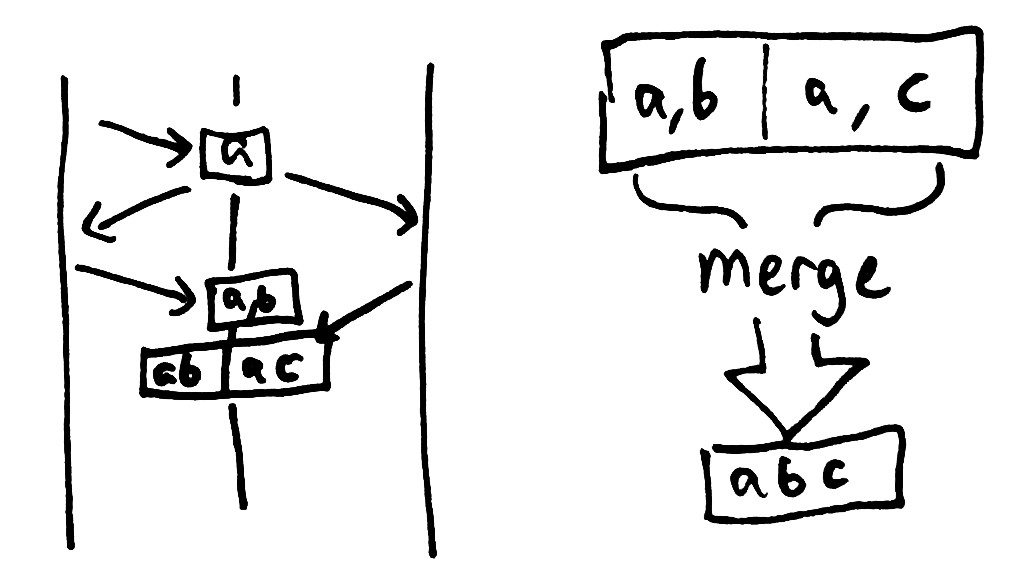

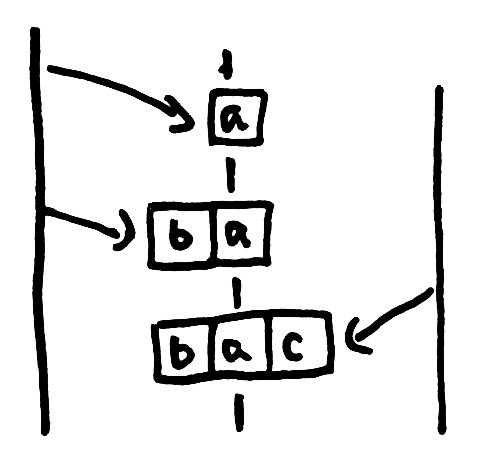

In this causality diagram, two clients (far left and far right) add the elements “a”, “b”, and “c” to a set stored in an LWW register (middle line). The left client adds a, which is read by both clients. One client adds b, constructing the set [a b]. The other adds c, constructing the set [a c]. Both write their values back. Because the register is last-write-wins, it preserves whichever arrives with the highest timestamp. In this case, it’s as if the write from the client on the left never even happened. However, it could just as easily have discarded the write from the right-hand client. Without a strong external coordinator, there’s just no way to tell whose data will be preserved, and whose will be thrown away.

Again: in an LWW register, the only conditions under which you can guarantee your write will not be silently ignored are when the register’s value is immutable. If you never change the value, it doesn’t matter which copy you preserve.

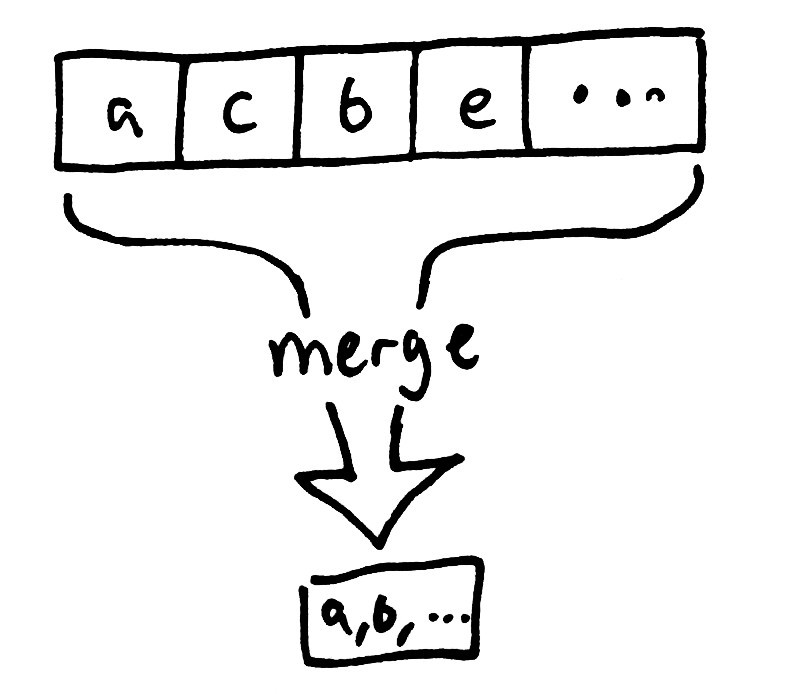

Vector clocks avoid this problem by identifying conflicting writes, and allowing you to merge them together.

Because there’s no well-defined order for potential conflicts, the merge function needs to be associative, commutative, and idempotent. If it satisfies those three properties (in essence, if you can merge any values in any order and get the same result), the system forms a semilattice known as a CRDT, and you recover a type of order-free consistency known as lattice consistency. Last-write-wins is a particular type of CRDT–albeit, not a particularly good one, because it destroys information nondeterministically.

Early in Cassandra’s history, Cassandra chose not to implement vector clocks for performance reasons. Vclocks (typically) require a read before each write. By using last-write-wins in all cases, and ignoring the causality graph, Cassandra can cut the number of round trips required for a write from 2 to 1, and obtain a significant speedup. The downside is that there is no safe way to modify a Cassandra cell.

Some people claim you can serialize updates to a cell by perfectly synchronizing your clocks, using ConsistencyLevel.QUORUM or ALL, and using an external lock service to prevent simultaneous operations. Heck, the official Cassandra documentation even claims this:

As we’ll see throughout this post, the Cassandra documentation can be less than accurate. Here’s a Jepsen test which mutates the same cell repeatedly, using perfectly synchronized clocks, QUORUM consistency, and a perfect lock service:

$ lein run lock cassandra

...

Writes completed in 200.036 seconds

2000 total

1009 acknowledged

724 survivors

285 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

1 3 6 8 11 13 ... 1986 1988 1991 1993 1996 1998

0.5045 ack rate

0.2824579 loss rate

0.0 unacknowledged but successful rateLosing 28% of your supposedly committed data is not linearizable by any definition. Next question.

CQL and CRDTs

Without vector clocks, Cassandra can’t safely change a cell–but writing immutable data is safe. Consequently, Cassandra has evolved around those constraints, allowing you to efficiently journal thousands of cells to a single row, and to retrieve them in sorted order. Instead of modifying a cell, you write each distinct change to its own UUID-keyed cell. Then, at read time, you read all the cells back and apply a merge function to obtain a result.

Cassandra’s query language, CQL, provides some collection-oriented data structures around this model: sets, lists, maps, and so forth. They’re CRDTs, though the semantics don’t align with what you’ll find in the INRIA paper–no G-sets, 2P-sets, OR-sets, etc. However, some operations are safe–for instance, adding elements to a CQL set:

0 unrecoverable timeouts

Collecting results.

Writes completed in 200.036 seconds

2000 total

2000 acknowledged

2000 survivors

All 2000 writes succeeded. :-DThat’s terrific! This is the same behavior we saw with G-sets in Riak. However, not all CQL collection operations are intuitively correct. In particular, I’d be wary of the index-based operations for lists, updating elements in a map, and any type of deletions. Deletes are implemented by writing special tombstone cells, which declare a range of other cells to be ignored. Because Cassandra doesn’t use techniques like OR-sets, you can potentially delete records that haven’t been seen yet–even delete writes from the future. Cassandra users jokingly refer to this behavior as “doomstones”.

The important thing to remember is that because there are no ordering constraints on writes, one’s merge function must still be associative and commutative. Just as we saw with Riak, AP systems require you to reason about order-free data structures. In fact, Cassandra and Riak are (almost) formally equivalent in their consistency semantics–the primary differences are in the granularity of updates, in garbage collection/history compaction, and in performance.

Bottom line: CQL collections are a great idea, and you should use them! Read the specs carefully to figure out whether CQL operations meet your needs, and if they don’t, you can always write your own CRDTs on top of wide rows yourself.

Counters

If you’re familiar with CRDTs, you might be wondering whether Cassandra’s counter type is a PN-counter–a commutative, monotonic data structure which can be incremented and decremented in an eventually consistent way. The answer is no: Cassandra (via Twitter, politics, etc), wound up with a less safe type of data structure. Consequently, Cassandra counters will over- or under-count by a wide range during a network partition.

If partitioned for about half of the test run, I found counters could drift by up to 50% of the expected value. Here’s a relatively well-behaved run, drifting by less than a percent.

10000 total

9700 acknowledged

9921 survivorsIsolation

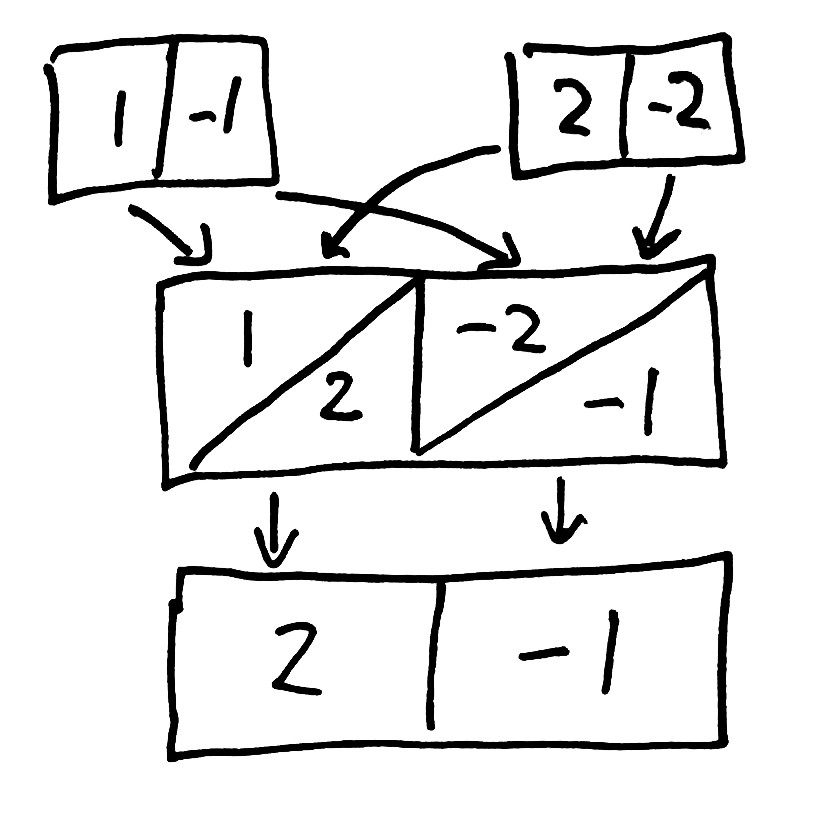

In Coming up in Cassandra 1.1: Row Level Isolation, and Atomic batches in Cassandra 1.2, DataStax asserts that a write which updates multiple keys in the same row will be atomic and isolated.

Cassandra 1.1 guarantees that if you update both the login and the password in the same update (for the same row key) then no concurrent read may see only a partial update.

And from the official documentation on concurrency control:

Full row-level isolation is now in place so that writes to a row are isolated to the client performing the write and are not visible to any other user until they are complete. From a transactional ACID (atomic, consistent, isolated, durable) standpoint, this enhancement now gives Cassandra transactional AID support.

We know what “atomic” means: either all of the changes in the transaction complete, or none of them do. But what does “isolated” mean? Isolated in the sense of ACID? Let’s ask Hacker News what they think Cassandra’s isolation provides:

Peter Bailis pointed me at two really excellent papers on isolation and consistency, including Berenson et al’s A Critique of ANSI SQL Isolation Levels–I really recommend digging into them if you’re curious about this problem. Isolation comes in many flavors, or strengths, depending on what sorts of causal histories are allowed. Serializability is one of the strongest: all transactions appear to occur in a single well-defined non-interleaved order. Cursor Stability (CS) and Snapshot Isolation (SI) are somewhat weaker.

ANSI SQL defines four levels of isolation, which really have more to do with the historical behavior of various database systems than with behavior that any sane person would consider distinguishible, so I’m not going to get into the details–but suffice it to say that there are a range of phenomena which are prohibited by those isolation levels. In order from least to most awful:

- P3: Phantom

- P2: Fuzzy read

- P1: Dirty read

- P0: Dirty write

ANSI SQL’s SERIALIZABLE level prohibits P3-P0; REPEATABLE READ prohibits P2 and below, READ COMMITTED prohibits P1 and below, and READ UNCOMMITTED only prohibits P0.

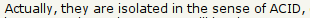

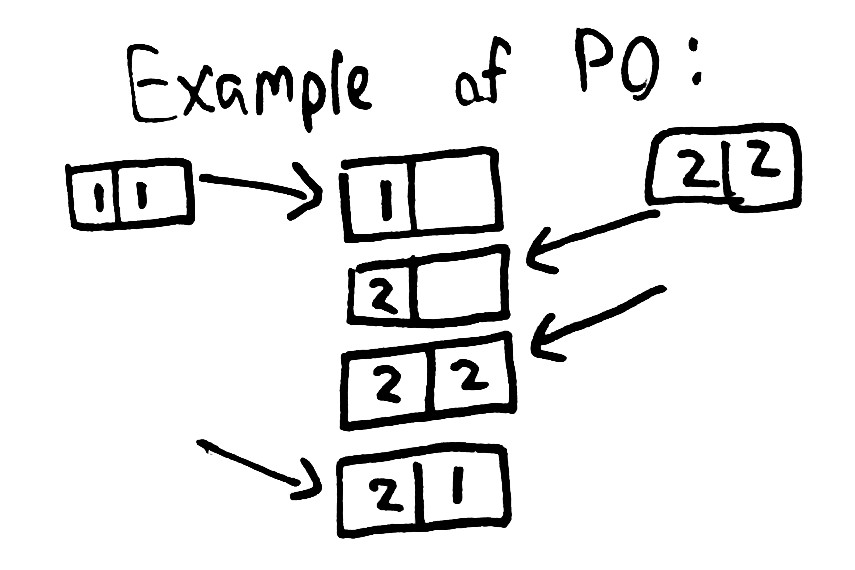

P0, or “dirty write” is especially important because all isolation levels must prohibit it. In P0, one transaction modifies some data; then a second transaction also modifies that data, before the first transaction commits. We never want writes from two different transactions to be mixed together, because it might violate integrity relationships which each transaction held independently. For instance, we might write [x=1, y=1] in one transaction, and [x=2, y=2] in a different transaction, assuming that x will always be equal to y. P0 allows those transactions to result in [x=1, y=2], or [x=2, y=1].

Cassandra allows P0.

The key thing to remember here is that in Cassandara, the order of writes is completely irrelevant. Any write made to the cluster could eventually wind up winning, if it has a higher timestamp. But–what happens if Cassandra sees two copies of a cell with the same timestamp?

It picks the lexicographically bigger value.

That means that if the values written to two distinct cells don’t have the same sort order (which is likely), Cassandra could pick final cell values from different transactions. For instance, we might write [1 -1] and [2 -2]. 2 is greater than 1, so the first cell will be 2. But -1 is bigger than -2, so -1 wins in the second cell. The result? [2 -1].

“But,” you might protest, “In order for that to happen, you’d need two timestamps to collide. It’s really unlikely that two writes will get the same microsecond-resolution timestamp, right? I’ve never seen it happen in my cluster.”

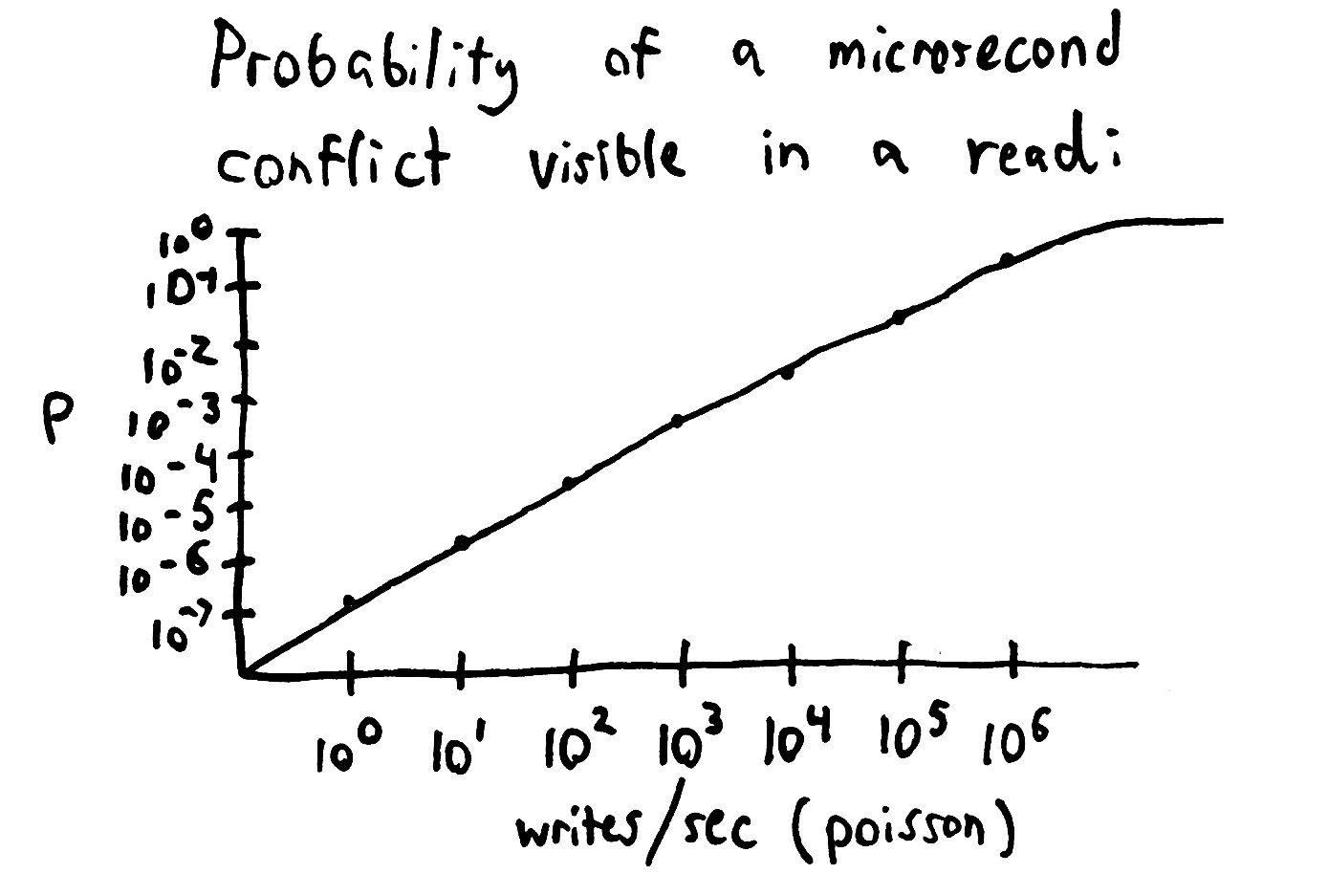

Well, it depends. If we assume N writes per second by Poisson processes to the same row, the probability of any given read seeing a conflicting value grows as the writes come closer together.

rate probability of conflict/read ------------------------------------ 1 1.31E-7 10 5.74E-6 100 5.30E-5 1000 5.09E-4 10000 0.00504 100000 0.0492 1000000 0.417

So if you do 100,000 writes/sec, on any given read you’ve got a 5% chance of seeing corrupt data. If you do 10 writes/sec and 1 read/sec, in each day you’ve got about a 1/3 chance of seeing corrupt data in any given day.

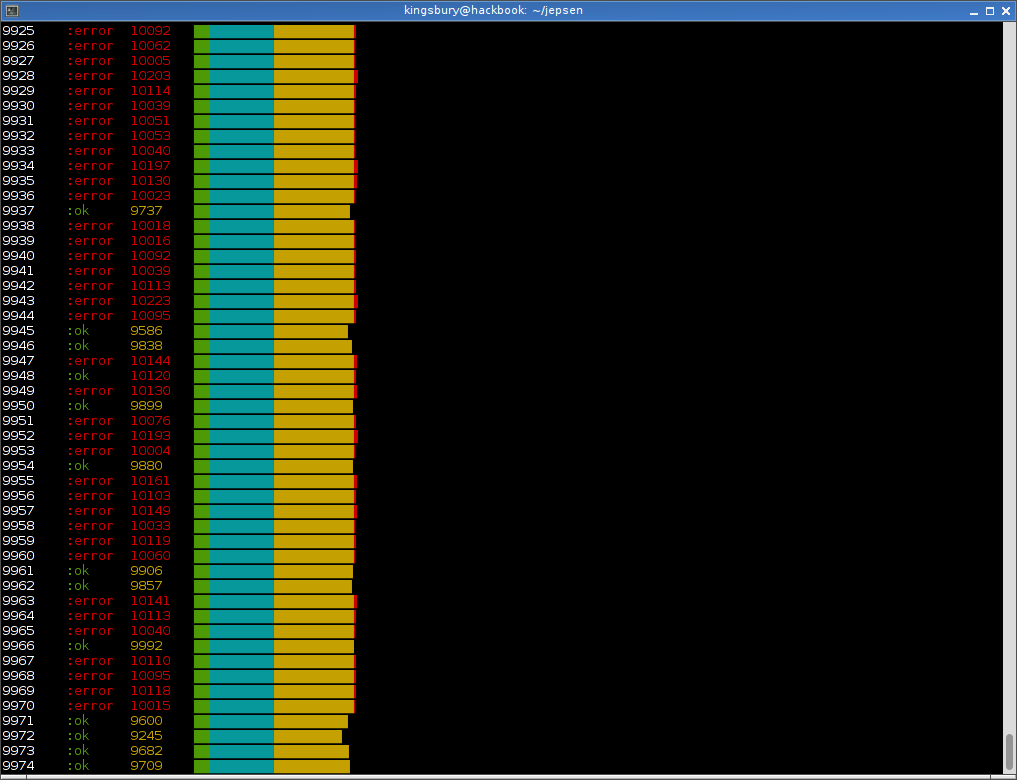

What if you write many rows over time–maybe 2 writes to each row, separated by a mean delta of 100 milliseconds? Then the theoretical probability of any given row being corrupt is about 5 × 10-6. That’s a pretty small probability–and remember, most applications can tolerate some small degree of corrupt data. Let’s confirm it with an experiment:

10000 total

9899 acknowledged

9942 survivors

58 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

127 253 277 339 423 434 ... 8112 8297 8650 8973 9096 9504

101 unacknowledged writes found! ヽ(´ー`)ノ

1059 1102 1139 1142 1143 1158 ... 2701 2720 2721 2800 2815 2860

0.9899 ack rate

0.0058591776 loss rate

0.01020305 unacknowledged but successful rateNote that “writes lost” here means corrupted rows: entirely missing rows are treated as successes. Roughly 1 in 200 rows were corrupt! That’s way worse than 10-6! What gives?

It turns out that somewhere in this maze of software, either Cassandra, the DataStax Java driver, or Cassaforte is taking the current time in milliseconds and tacking on three zeroes to the end, calling it good. The probability of millisecond conflicts is significantly higher than microsecond conflicts, which is why we saw so much corrupt data.

Long story short, Cassandra row isolation is probabilistic at best; and remember, the only reason you actually want isolation is because you plan on doing two operations at the same time. If you rely on isolation, in any sense of the word, in Cassandra, you need to consider your tolerance for data corruption, and verify that you’re actually generating timestamps with the expected distribution. A strong external coordinator which guarantees unique timestamps might be of use.

Lightweight Transactions

In Cassandra 2.0.0, Lightweight Transactions offer linearizable consistency for compare-and-set operations. The implementation is based on naive Paxos–requiring four round trips for each write–but the performance can be improved with time. The important thing is that Cassandra is first to have a distributed linearizable data store, or something.

That said, sometimes you really do need linearizable operations. That’s why we added lightweight transactions in Cassandra 2.0 This is a sign of Cassandra maturing — Cassandra 1.0 (released October 2011) was the fulfilment of its designers original vision; Cassandra 2.0 takes it in new directions to make it even more powerful.

Open source has had the reputation of producing good imitations, but not innovation. Perhaps Cassandra’s origins as a hybrid of Dynamo and Bigtable did not disprove this, but Apache Cassandra’s development of lightweight transactions and CQL are true industry firsts.

The first thing you’ll notice if you try to test the new transaction system is that the Java driver doesn’t support it. It’ll throw some weird exceptions like “unknown consistency level SERIAL”, because it doesn’t support the v2 native Cassandra protocol yet. So you’ll need to use the Python Thrift client, or, in my case, get a patched client from DataStax.

The second thing you’ll notice is deadlocks. In my Jepsen tests, the cluster would go unresponsive after the first 10 or so transactions–and it would never recover. Any further attempts to modify a cell via transaction would spin endlessly in failed transactions, until I manually truncated the system.paxos table.

You can’t make this shit up.

So you confer with DataStax for a while, and they manage to reproduce and fix the bug: #6029 (Lightweight transactions race render primary key useless), and #5985 (Paxos replay of in progress update is incorrect). You start building patched versions of Cassandra.

git checkout paxos-fixed-hopefullyLet’s give it a whirl. In this transaction test, we perform repeated compare-and-set operations against a single cell, retrying failed attempts for up to 10 seconds. The first thing you’ll notice is that those four round-trips aren’t exactly lightweight, which means that at 50 transactions/sec, the majority of transaction attempts time out:

But we’re less concerned with performance or availability than safety. Let’s slow down the test to 5 transactions/sec to reduce contention, and check: are lightweight transactions actually linearizable?

2000 total

829 acknowledged

827 survivors

3 acknowledged writes lost! (╯°□°)╯︵ ┻━┻

(102 1628 1988)

1 unacknowledged writes found! ヽ(´ー`)ノ

(283)

0.4145 ack rate

0.0036188178 loss rate

0.0012062726 unacknowledged but successful rateNo. Cassandra lightweight transactions are not even close to correct. Depending on throughput, they may drop anywhere from 1-5% of acknowledged writes–and this doesn’t even require a network partition to demonstrate. It’s just a broken implementation of Paxos. In addition to the deadlock bug, these Jepsen tests revealed #6012 (Cassandra may accept multiple proposals for a single Paxos round) and #6013 (unnecessarily high false negative probabilities).

Paxos is notoriously difficult to implement correctly. The Chubby authors note:

Our tests start in safety mode and inject random failures into the system. After running for a predetermined period of time, we stop injecting failures and give the system time to fully recover. Then we switch the test to liveness mode. The purpose for the liveness test is to verify that the system does not deadlock after a sequence of failures.

This test proved useful in finding various subtle protocol errors, including errors in our group membership implementation, and our modifications to deal with corrupted disks…. We found additional bugs, some of which took weeks of simulated execution time (at extremely high failure rates) to find.

Our hooks can be used to crash a replica, disconnect it from other replicas for a period of time or force a replica to pretend that it is no longer the master. This test found five subtle bugs in Chubby related to master failover in its first two weeks.

And in particular, I want to emphasize:

By their very nature, fault-tolerant systems try to mask problems. Thus they can mask bugs or configuration problems while insidiously lowering their own fault-tolerance.

The bugs I found were low-hanging fruit: anyone who ran a few hundred simple transactions could reproduce them, even without causing a single node or network failure. Why didn’t DataStax catch this in the release process? Why publish glowing blog posts and smug retrospectives if the most fundamental safety properties of the application haven’t been trivially verified? And if I hadn’t reported these bugs, how many users do you suppose would have been subject to silent data loss or corruption in prod?

I can’t say this strongly enough: One way or another, software is always tested: either by the maintainers, by users, or by applications in production. One of my goals in this series is to push database vendors to test their software prior to release, so that we can all enjoy safer, faster systems. If you’re writing a database, please try to verify its correctness experimentally. You don’t need to do a perfect job–testing is tough!–but a little effort can catch 90% of the bugs.

Final thoughts

DataStax and the open-source community around Cassandra have been working hard on the AP storage problem for several years, and it shows. Cassandra runs on thousand-node clusters and accepts phenomenal write volume. It’s extraordinarily suited for high-throughput capture of immutable or otherwise log-oriented data, and its AAE and tunable durability features work well. It is, in short, a capable AP datastore, and though I haven’t deployed it personally, many engineers I respect recommend it from their production experience wholeheartedly.

Jonathan Ellis, Aleksey Yeschenko, and Patrick McFadin were all very helpful in helping me understand Cassandra’s model, and I hope that I have depicted it accurately here. Any errors are mine alone. I’m especially thankful that they volunteered so much of their time on nights and weekends to help someone tear apart their hard work, and that they’ve fixed the bugs I’ve found so quickly. Reproducing and fixing distributed systems bugs is an especially challenging task, and it speaks to the skill of the entire Cassandra team.

DataStax has adapted some of these Jepsen tests for use in their internal testing process, and, like Basho, may use Jepsen directly to help test future releases. I’m optimistic that they’ll notify users that the transactional features are unsafe in the current release, and clarify their documentation and marketing. Again, there’s nothing technically wrong with many of the behaviors I’ve discussed above–they’re simply subtle, and deserve clear exposition so that users can interpret them correctly.

I’m looking forward to watching a good database improve.