Goal

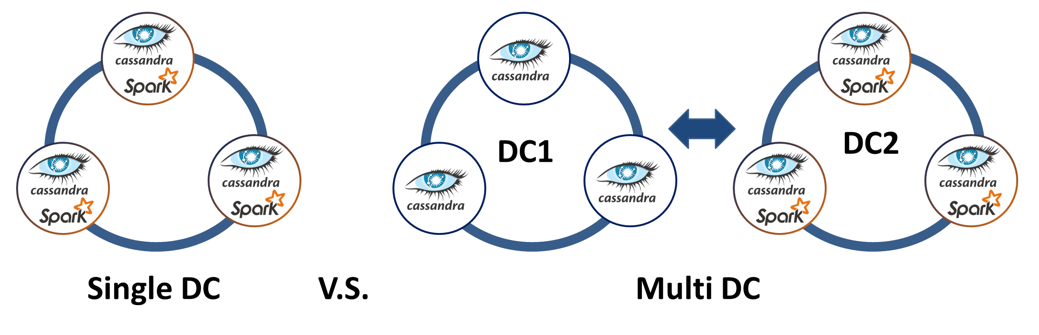

The aim of this benchmark is to compare performances between a one-data-center setting, where Spark and Cassandra are collocated, versus a two-data-center setting where Spark is running on the second data center. The idea behind a two-data-center setting is to use the first data-center (DC1) to serve Cassandra reads/writes, while using the second data-center (DC2) for Spark analytic purpose. This can be achieved by performing reads/writes on a node of DC1 with the consistency level set to LOCAL_QUORUM (or LOCAL_ONE). This setting ensures that writes will be acknowledge only by DC1 (enabling high throughput / low latency) while data are sent asynchronously to DC2. This separation of workload results in a few benefits:

- The first DC remains consistently responsive for operational reads/writes.

- The second DC can serve heavy analytic workload without interfering with the first DC.

- All the data becomes eventually consistent between the first and the second DC.

Benchmark setup

The single data center cluster was composed of three m4xl-400 nodes, each of them with Spark and Cassandra. The two data center clusters had its first data center (DC1) with three m4xl-400 nodes running Cassandra, the second data center was composed of three m4xl-400 nodes running Cassandra and Spark. In our offering, m4xl-400 nodes have 4 CPU, 16G of RAM, and 400GB of AWS EBS storage. Two separate stress boxes (one for each cluster) were created to run Cassandra stress and a custom Spark job. The stress boxes were connected via VPC peering, in the same AWS region that the clusters were running. Importantly, all the Cassandra stress and spark benchmark job were run at the same time against the two clusters. This is to ensure that each cluster had the same amount of time to perform background compaction and to recover their full AWS EBS credits when the EBS burst was used. Therefore, the comparison between the single DC cluster and the multi DC cluster performances are always meaningful. On the other hand, the raw performance number should be interpreted with caution, especially due to the drop of EBS performance once its burst credit is exhausted.

Benchmark

Initial loading

For each cluster, two sets of data (“data1” and “data2”) were created using the cassandra-stress tool. The command ran was:

cassandra-stress write n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=400 -schema replication\(strategy=NetworkTopologyStrategy,spark=3,AWS_VPC_US_EAST_1=3\) keyspace="data1" -node $IP

Note that:

- The number of records inserted (400 million) is high, resulting in about 120 GB of data stored on each node. This is to ensure that upon future read, the OS will not have the capacity to cache the sstables in RAM, as is observed when using smaller data sets. This is necessary to expose the underlying I/O performance.

- Although two data centers are specified, only AWS_VPC_US_EAST_1 is used for the single data center cluster.

- The consistency level is set to LOCAL_QUORUM. For the two data center clusters, the data will be written and acknowledged by DC1 (AWS_VPC_US_EAST_1) as the IP used for the stress command was the one of a node in DC1. In the background and asynchronously, the data is streamed to DC2. For both clusters LOCAL_QUORUM means that two out of the three nodes in the queried data center will need to respond for the operation to be successful.

- We also created a second data set named “data2”. “data1” will be used later for running a simple spark analytic job, while “data2” will be used for running a background read/write Cassandra stress command, which will result in an increase in its size. The size of “data1” being constant, it can be used to fairly compare the spark performance.

- Importantly, the performance measured under stress condition in this benchmark should not be considered as baseline performance to use in production. With Cassandra, it is critical to keep some resources free for background activities (large compaction, node repairs, backup, fail over recovery etc…)

The write performances were:

| Cassandra write performance | Single DC | Multi DC |

| Inserts rate | 27439 / s | 23141 / s |

The Single DC cluster has to asynchronously stream the data to its second data center, explaining an expected drop of write performances of about 15%.

Maximum read performance

After the initial loading of “data1” and “data2”, both the single DC and multi DC cluster were unused for a few hours, giving time for the pending compaction to complete. The baseline read performance was assessed with the following cassandra-stress command:

cassandra-stress read n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=500 -schema keyspace="data2" -node $IP

The read performances were:

| Cassandra read performance | Single DC | Multi DC |

| Read rate | 5283 / s | 5253 / s |

As can be expected, the read performance is effectively identical as only one data center is being used to serve the reads (Consistency level = LOCAL_QUORUM)

Maximum read/write performance

The baseline read/write performance was assessed with:

cassandra-stress mixed ratio\(write=1,read=3\) n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=500 -schema replication\(strategy=NetworkTopologyStrategy,spark=3,AWS_VPC_US_EAST_1=3\) keyspace="data2" -node $IP

The read / write benchmark is an important indication of peak performances in production as it combines both type of operations. The ratio between read and write is application specific, a ratio of 1 to 3 is somewhat a good representation of what is typically of many production scenarios. In the table below we also report the latency measurement as they are an important indication of performances.

| Single DC | Multi DC | ||

| Operation rate | 7071 / s | 7016 / s | |

| Latency (ms) | Mean | 64.3 | 66 |

| Median | 61.0 | 77.2 | |

| 95th percentile | 177.5 | 200.7 | |

| 99th percentile | 215.9 | 249.7 | |

| Latency when limiting read/write to half capacity: 3500 op/s (ms) | Mean | 3.1 | 3.2 |

| Median | 2.6 | 2.7 | |

| 95th percentile | 6.6 | 7.0 | |

| 99th percentile | 83.9 | 92.2 | |

| Latency when limiting read/write to a third of capacity: 2300 op/s (ms) | Mean | 2.7 | 2.8 |

| Median | 2.5 | 2.6 | |

| 95th percentile | 4.6 | 4.5 | |

| 99th percentile | 34.2 | 36.8 | |

In this scenario, both clusters perform identically in term of number of operations per seconds and latency. In the following sections, we will run a spark job without any background read /write, with a peak background read/write (7000 op/s), half (3500 op/s) and a third (2300 op/s).

Spark benchmark without background Cassandra activity.

As there is no standard spark-cassandra stress tool available at the moment, we used the following Spark application that we ran on both clusters. The Spark application uses the data written by cassandra-stress. The application is not particularly relevant, but it uses a combination of map and reduceByKey that are commonly employed in real life spark application.

val rdd1 = sc.cassandraTable("data1", "standard1") .map(r => (r.getString("key"), r.getString("C1"))) .map{ case(k, v) => (v.substring(5, 8), k.length())} val rdd2 = rdd1.reduceByKey(_+_) rdd2.collect().foreach(println)

The job is using the key and the “C1” column of the stress table “standard1”. The key is shortened to the 5 to 8 characters with a map call, allowing a reduce by key with 16^3=4096 possibilities. The reduceByKey is a simple yet commonly used spark rdd transformation that allows to group keys together while applying a reduce operation (in this case a simple addition). This is somewhat a similar example as the classic word count example. Importantly, this job will read the full data set.

| Spark performance, no background Cassandra activity. | Single DC | Multi DC |

| Spark time | 3829 s | 3857 s |

As can be expected, the performance are identical for the two clusters.

Spark benchmark: with maximum background Cassandra read/write.

The same spark job was run with a background Cassandra read/write stress command:

cassandra-stress mixed ratio\(write=1,read=3\) n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=500 -schema keyspace="data2" replication\(strategy=NetworkTopologyStrategy,spark=3,AWS_VPC_US_EAST_1=3\) -node $IP

| Spark performance, full read/write Cassandra activity. | Single DC | Multi DC |

| Spark time | 11528 s | 4894 s |

| Effective Cassandra operation rate | 2866 / s | 5630 / s |

This test result demonstrates the gain in having a separate DC for Spark activity. Note that a large quantity of read/writes against the single DC timed-out as Cassandra cannot keep up with serving simultaneously Spark read requests and Cassandra-stress read/write requests.

Spark benchmark: with half background Cassandra read/write.

The cassandra-stress command was used with a limit factor so that the number of cassandra operation would be limited to half of the capacity (3500 op/s):

cassandra-stress mixed ratio\(write=1,read=3\) n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=500 limit=3500/s -schema keyspace="data2" replication\(strategy=NetworkTopologyStrategy,spark=3,AWS_VPC_US_EAST_1=3\) -node $IP

The test result were:

| Spark performance, full read/write Cassandra activity. | Single DC | Multi DC |

| Spark time | 12914 s | 4919 s |

Note that this test was run shortly after the previous benchmark (with full read/write cassandra-stress), preventing the EBS volumes of both clusters to fully recover their burst credit. During this test, we still observed a large amount of Cassandra timeout on the single DC cluster.

Spark benchmark: with a third background Cassandra read/write.

The limit rate was set to a third of the baseline read/write performance which is about 2300 operation per seconds. Unlike in the previous test, the EBS volume had enough time to recover its full burst capacity credit.

cassandra-stress mixed ratio\(write=1,read=3\) n=400000000 no-warmup cl=LOCAL_QUORUM -rate threads=500 limit=2300/s -schema keyspace="data2" replication\(strategy=NetworkTopologyStrategy,spark=3,AWS_VPC_US_EAST_1=3\) -node $IP

| Spark performance, full read/write Cassandra activity. | Single DC | Multi DC |

| Spark time | 7877 s | 4572 s |

During this test, we again observed a large amount of Cassandra timeout on the single DC cluster.

Conclusion

Through this benchmark, we compared the performance between a single DC and a multi DC cluster when simultaneously running some Cassandra read/write operations and executing a Spark application. In order to perform a fair comparison, we ran every stress command at the same time on both clusters, ensuring that any protocol bias (file cached in memory, time to perform background Cassandra compaction, EBS burst recovery etc…) would apply to both clusters. The multi DC cluster was able to process the spark job up to 2.3 times faster than the single DC cluster when running cassandra-stress (without limiting the rate) in the background. Maybe more importantly, the muti DC cluster was able to process twice the rate of Cassandra operations per seconds when compared with the single DC cluster. This demonstrates that a multi DC topology ensure overall higher performance both for Cassandra and Spark, with more stability (the cassandra-stress ran on the single DC cluster resulted in a large number of timeout errors during read operations). The multi DC topology is therefore particularly relevant when running a large amount of spark analytic jobs on a cluster hosting mission critical data.