O slideshow foi denunciado.

Lambda at Weather Scale - Cassandra Summit 2015

![Attempt[0] Architecture

Operational

Analytics

Business

Analytics

Executive

Dashboards

Data

Discovery

Data

Science

3rd Part...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Data Model

CREATE TABLE events (

timebucket bigint,

timestamp bigint,

eventtype varchar,

eventid varchar,

platf...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Data Model

CREATE TABLE events (

timebucket bigint,

timestamp bigint,

eventtype varchar,

eventid varchar,

platf...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Data Model

CREATE TABLE events (

timebucket bigint,

timestamp bigint,

eventtype varchar,

eventid varchar,

platf...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Data Model

CREATE TABLE events (

timebucket bigint,

timestamp bigint,

eventtype varchar,

eventid varchar,

platf...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] tl;dr

● C* everywhere

● Streaming data via custom ingest process

● Kafka backed by RESTful service

● Batch data...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] tl;dr

● C* everywhere

● Streaming data via custom ingest process

● Kafka backed by RESTful service

● Batch data...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Lessons

● Batch loading large data sets into C* is silly

● … and expensive

● … and using Informatica to do it i...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[0] Lessons

● Schema-less == bad:

○ Must parse JSON to extract key data

○ Expensive to analyze by event type

○ Cann...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] Architecture

Data Lake

Operational

Analytics

Business

Analytics

Executive

Dashboards

Data

Discovery

Data

Scienc...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] Data Model

● Each event type gets its own table

● Tables individually tuned based on workload

● Schema applied ...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] tl;dr

● Use C* for streaming data

○ Rolling time window (TTL depends on type)

○ Real-time access to events

○ Da...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] tl;dr

● Everything else in S3

○ Batch data loads (mostly logs)

○ Daily C* backups

○ Stored as Parquet

○ Cheap, ...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] tl;dr

● Kafka replaced by SQS:

○ Scalable & reliable

○ Already fronted by a RESTful interface

○ Nearly free to ...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] tl;dr

● STCS in lieu of DTCS (and LCS)

○ Because it’s bulletproof

○ Partitions spanning sstables is acceptable

...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

![Attempt[1] tl;dr

● STCS in lieu of DTCS (and LCS)

○ Because it’s bulletproof

○ Partitions spanning sstables is acceptable

...](https://pt.slideshare.net/rastrick/lambda-at-weather-scale-cassandra-summit-2015)

Próximos SlideShares

Carregando em…5

×

Sem downloads

Nenhuma nota no slide

- 1. Lambda at Weather Scale Robbie Strickland

- 2. Who Am I? Robbie Strickland Director of Engineering, Analytics rstrickland@weather.com @rs_atl

- 3. Who Am I? ● Contributor to C* community since 2010 ● DataStax MVP 2014/15 ● Author, Cassandra High Availability ● Founder, ATL Cassandra User Group

- 4. About TWC ● ~30 billion API requests per day ● ~120 million active mobile users ● #3 most active mobile user base ● ~360 PB of traffic daily ● Most weather data comes from us

- 5. Use Case ● Billions of events per day ○ Web/mobile beacons ○ Logs ○ Weather conditions + forecasts ○ etc. ● Keep data forever

- 6. Use Case ● Efficient batch + streaming analysis ● Self-serve data science ● BI / visualization tool support

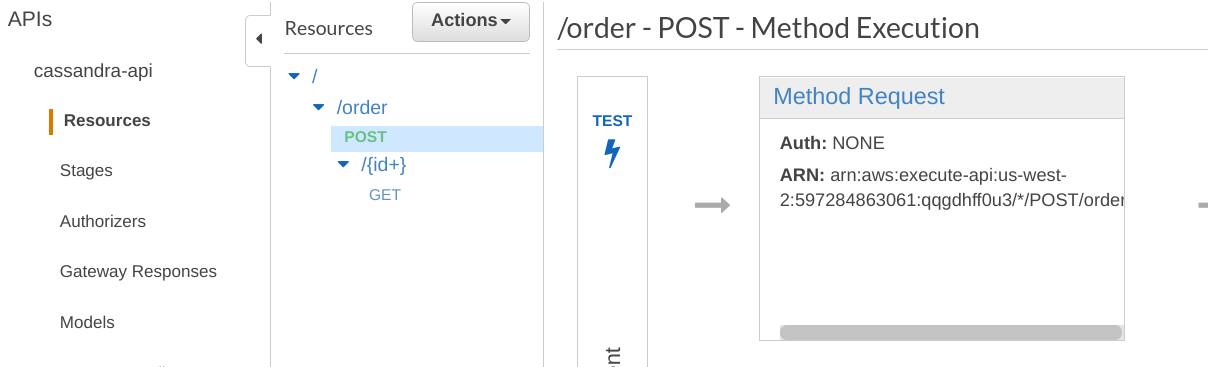

- 7. Architecture

- 8. Attempt[0] Architecture Operational Analytics Business Analytics Executive Dashboards Data Discovery Data Science 3rd Party System Integration Events 3rd Party Other DBs S3 Stream Processing Batch Sources Storage and Processing Consumers Data Access Kafka Streaming Custom Ingestion Pipeline ETL Streaming Sources RESTful Enqueue service SQL

- 9. Attempt[0] Data Model CREATE TABLE events ( timebucket bigint, timestamp bigint, eventtype varchar, eventid varchar, platform varchar, userid varchar, version int, appid varchar, useragent varchar, eventdata varchar, tags set<varchar>, devicedata map<varchar, varchar>, PRIMARY KEY ((timebucket, eventtype), timestamp, eventid) ) WITH CACHING = 'none' AND COMPACTION = { 'class' : 'DateTieredCompactionStrategy' };

- 10. Attempt[0] Data Model CREATE TABLE events ( timebucket bigint, timestamp bigint, eventtype varchar, eventid varchar, platform varchar, userid varchar, version int, appid varchar, useragent varchar, eventdata varchar, tags set<varchar>, devicedata map<varchar, varchar>, PRIMARY KEY ((timebucket, eventtype), timestamp, eventid) ) WITH CACHING = 'none' AND COMPACTION = { 'class' : 'DateTieredCompactionStrategy' }; Event payload == schema-less JSON

- 11. Attempt[0] Data Model CREATE TABLE events ( timebucket bigint, timestamp bigint, eventtype varchar, eventid varchar, platform varchar, userid varchar, version int, appid varchar, useragent varchar, eventdata varchar, tags set<varchar>, devicedata map<varchar, varchar>, PRIMARY KEY ((timebucket, eventtype), timestamp, eventid) ) WITH CACHING = 'none' AND COMPACTION = { 'class' : 'DateTieredCompactionStrategy' }; Partitioned by time bucket + type

- 12. Attempt[0] Data Model CREATE TABLE events ( timebucket bigint, timestamp bigint, eventtype varchar, eventid varchar, platform varchar, userid varchar, version int, appid varchar, useragent varchar, eventdata varchar, tags set<varchar>, devicedata map<varchar, varchar>, PRIMARY KEY ((timebucket, eventtype), timestamp, eventid) ) WITH CACHING = 'none' AND COMPACTION = { 'class' : 'DateTieredCompactionStrategy' }; Time-series data good fit for DTCS

- 13. Attempt[0] tl;dr ● C* everywhere ● Streaming data via custom ingest process ● Kafka backed by RESTful service ● Batch data via Informatica ● Spark SQL through ODBC ● Schema-less event payload ● Date-tiered compaction

- 14. Attempt[0] tl;dr ● C* everywhere ● Streaming data via custom ingest process ● Kafka backed by RESTful service ● Batch data via Informatica ● Spark SQL through ODBC ● Schema-less event payload ● Date-tiered compaction

- 15. Attempt[0] Lessons ● Batch loading large data sets into C* is silly ● … and expensive ● … and using Informatica to do it is SLOW ● Kafka + REST services == unnecessary ● No viable open source C* Hive driver ● DTCS is broken (see CASSANDRA-9666)

- 16. Attempt[0] Lessons ● Schema-less == bad: ○ Must parse JSON to extract key data ○ Expensive to analyze by event type ○ Cannot tune by event type

- 17. Attempt[1] Architecture Data Lake Operational Analytics Business Analytics Executive Dashboards Data Discovery Data Science 3rd Party System Integration Stream Processing Long Term Raw Storage Short Term Storage and Big Data Processing Consumers Amazon SQS Streaming Custom Ingestion Pipeline Events 3rd Party Other DBs S3 Batch Sources Streaming Sources ETL Data Access SQL

- 18. Attempt[1] Data Model ● Each event type gets its own table ● Tables individually tuned based on workload ● Schema applied at ingestion: ○ We’re reading everything anyway ○ Makes subsequent analysis much easier ○ Allows us to filter junk early

- 19. Attempt[1] tl;dr ● Use C* for streaming data ○ Rolling time window (TTL depends on type) ○ Real-time access to events ○ Data locality makes Spark jobs faster

- 20. Attempt[1] tl;dr ● Everything else in S3 ○ Batch data loads (mostly logs) ○ Daily C* backups ○ Stored as Parquet ○ Cheap, scalable long-term storage ○ Easy access from Spark ○ Easy to share internally & externally ○ Open source Hive support

- 21. Attempt[1] tl;dr ● Kafka replaced by SQS: ○ Scalable & reliable ○ Already fronted by a RESTful interface ○ Nearly free to operate (nothing to manage) ○ Robust security model ○ One queue per event type/platform ○ Built-in monitoring

- 22. Attempt[1] tl;dr ● STCS in lieu of DTCS (and LCS) ○ Because it’s bulletproof ○ Partitions spanning sstables is acceptable ○ Testing Time-Window compaction (thanks Jeff Jirsa)

- 23. Attempt[1] tl;dr ● STCS in lieu of DTCS (and LCS) ○ Because it’s bulletproof ○ Partitions spanning sstables is acceptable ○ Testing Time-Window compaction (thanks Jeff Jirsa)

- 24. Fine Print ● Use C* >= 2.1.8 ○ CASSANDRA-9637 - fixes Spark input split computation ○ CASSANDRA-9549 - fixes memory leak ○ CASSANDRA-9436 - exposes rpc/broadcast addresses for Spark/cloud environments ● Version incompatibilities abound (check sbt file for Spark-Cassandra connector)

- 25. Fine Print ● Two main Spark clusters: ○ Co-located with C* for heavy analysis ■ Predictable load ■ Efficient C* access ○ Self-serve in same DC but not co-located ■ Unpredictable load ■ Favors mining S3 data ■ Isolated from production jobs

- 26. Data Modeling

- 27. Partitioning ● Opposite strategy from “normal” C* modeling ○ Model for good parallelism ○ … not for single-partition queries ● Avoid shuffling for most cases ○ Shuffles occur when NOT grouping by partition key ○ Partition for your most common grouping

- 28. Secondary Indexes ● Useful for C*-level filtering ● Reduces Spark workload and RAM footprint ● Low cardinality is still the rule

- 29. Secondary Indexes (Client Access)

- 30. Secondary Indexes (with Spark)

- 31. Full-text Indexes ● Enabled via Stratio-Lucene custom index (https://github.com/Stratio/cassandra-lucene-index) ● Great for C*-side filters ● Same access pattern as secondary indexes

- 32. Full-text Indexes CREATE CUSTOM INDEX email_index on emails(lucene) USING 'com.stratio.cassandra.lucene.Index' WITH OPTIONS = { 'refresh_seconds':'1', 'schema': '{ fields: { id : {type : "integer"}, user : {type : "string"}, subject : {type : "text", analyzer : "english"}, body : {type : "text", analyzer : "english"}, time : {type : "date", pattern : "yyyy-MM-dd hh:mm:ss"} } }' };

- 33. Full-text Indexes SELECT * FROM emails WHERE lucene='{ filter : {type:"range", field:"time", lower:"2015-05-26 20:29:59"}, query : {type:"phrase", field:"subject", values:["test"]} }'; SELECT * FROM emails WHERE lucene='{ filter : {type:"range", field:"time", lower:"2015-05-26 18:29:59"}, query : {type:"fuzzy", field:"subject", value:"thingy", max_edits:1} }';

- 34. WIDE ROWS Caution:

- 35. Wide Rows ● It only takes one to ruin your day ● Monitor cfstats for max partition bytes ● Use toppartitions to find hot keys

- 36. Avoid Nulls ● Nulls are deletes ● Deletes create tombstones ● Don’t write nulls! ● Beware of nulls in prepared statements

- 37. Data Exploration

- 38. Data Warehouse Paradigm - Old Ingest Model Transform Design Visualize

- 39. Data Warehouse Paradigm - New Ingest Explore Analyze Deploy Visualize

- 40. Visualization ● Critical to understanding your data ● Reduced time to visualization ● … from >1 month to minutes (!!) ● Waterfall to agile

- 41. Zeppelin ● Open source Spark notebook ● Interpreters for Scala, Python, Spark SQL, CQL, Hive, Shell, & more ● Data visualizations ● Scheduled jobs

- 42. Zeppelin

- 43. Zeppelin

- 44. Zeppelin

- 45. Final Thoughts

- 46. Should I use DSE? ● Open source culture? ● On-staff C* expert(s)? ● Willingness to contribute/fix stuff? ● Moderate degree of risk is acceptable? ● Need/desire for latest features? ● Need/desire to control tool versions? ● Don’t have the budget for licensing?

- 47. We’re Hiring! Robbie Strickland rstrickland@weather.com

Painéis de recortes públicos que contêm este slide

Nenhum painel de recortes público que contém este slide

Selecionar outro painel de recortes

×

Parece que você já adicionou este slide ao painel

Criar painel de recortes