February 16, 2017 | Cassandra

One of the default Cassandra strategies to deal with more sophisticated queries is to create CQL tables that contain the data in a structure that matches the query itself (denormalization). Cassandra 3.0 introduces a new CQL feature, Materialized Views which captures this concept as a first-class construct.

We decided to take a closer look.

Materialized Views are essentially standard CQL tables that are maintained automatically by the Cassandra server – as opposed to needing to manually write to many denormalized tables containing the same data, like in previous releases of Cassandra. At glance, this looks like a great feature: automating a process that was previously done by hand, and the server taking the responsibility for maintaining the various data structures.

For example, let’s suppose that we want to capture payment transaction information for a set of users. You can have the following structure as your base table which you would write the transactions to:

This table can be used to record transactions of users for each year, and is suitable for querying the transaction log of each of our users.

Let’s suppose there is a requirement for an administrative function allowing to see all the transactions for a given day.

CQL has been extended by the CREATE MATERIALIZED VIEW command, which can be used in the following manner:

Let’s insert some data:

As you would expect, you can then execute the following queries:

And:

The Materialized View is not a fundamentally special construct. Behind the scene, Cassandra will create “standard” table, and any mutation / access will go through the usual write and read paths.

If we look into the data directory for this keyspace, we should expect to find two separate subdirectories, containing SSTables for the base table and the Materialized View:

Let’s investigate the declaration of the Materialized View in a bit more detail:

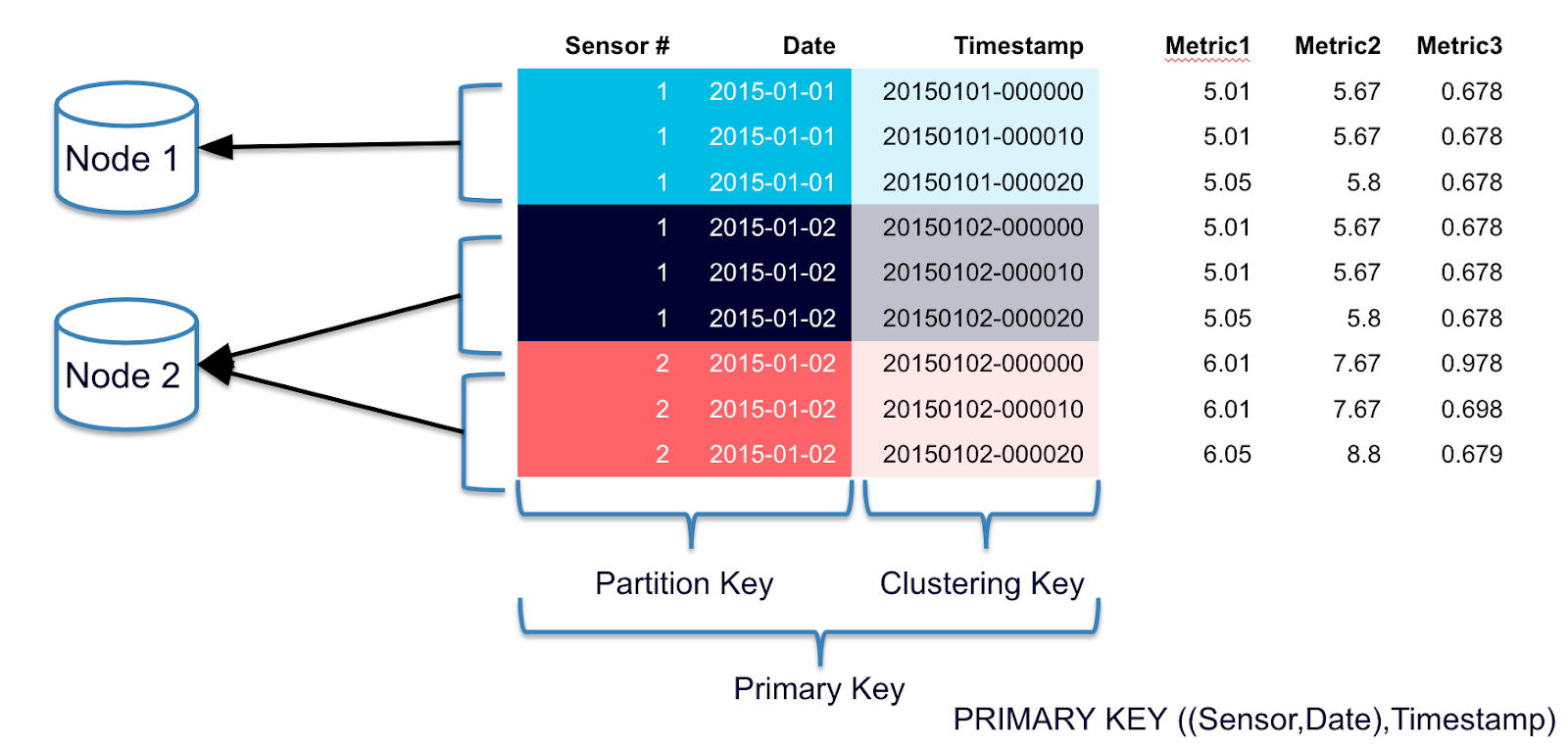

Note the PRIMARY KEY clause at the end of this statement. This is much what you would expect from Cassandra data modeling: defining the partition key and clustering columns for the Materialized View’s backing table. As such it should always be chosen carefully and the usual best practices apply to it:

- Avoid unbounded partitions

- Avoid too large partitions

- Choose your partition key in a way that distributes the data correctly, avoiding cluster hotspots (the partition key chosen above is not a good one as it leads to temporal hotspots)

Also note the NOT NULL restrictions on all the columns declared as primary key. This is to ensure that no records in the Materialized View can exist with an incomplete primary key. This is currently a strict requirement when creating Materialized Views and trying to omit these checks will result in an error: Primary key column 'year' is required to be filtered by 'IS NOT NULL'

In the current versions of Cassandra there are a number of limitations on the definition of Materialized Views.

A primary key of a Materialized View must contain all columns from the primary key of the base table

Any materialized view must map one CQL row from the base table to precisely one other row in the materialized view. This in practice means that all columns of the original primary key (partition key and clustering columns) must be represented in the materialized view, however they can appear in any order, and can define different partitioning compared to the base table.

Accustomed to relational database systems, this may feel like an odd restriction. It actually makes sense if you consider how Cassandra manages the data in the Materialized View. Since the View is nothing more under the hood than another Cassandra table, and is being updated via the usual mechanisms, when the base table is updated; an appropriate mutation is automatically generated and applied to the View.

In case a single CQL row in the Materialized View would be a result of potentially collapsing multiple base table rows, Cassandra would have no way of tracking the changes from all these base rows and appropriately represent them in the Materialized View (this is especially problematic on deletions of base rows).

As a result you are not allowed to define a Materialized View like this:

This attempt will result in the following error: Cannot create Materialized View transactions_by_card without primary key columns from base cc_transactions (day,month,userid)

This may be somewhat surprising – the ID column is a unique transaction identifier after all. However this is additional knowledge that is due to the semantics of the data model, and Cassandra has no way of understanding (or verifying and enforcing) that it is actually true or not. As a developer you have additional knowledge of the data being manipulated than what is possible to declare in the CQL models.

A primary key of a Materialized View can contain at most one other column

As established already, the full base primary key must be part of the primary key of the Materialized View. It is possible to add another column from the original base table that was not part of the original primary key, but this is restricted in only a single additional column.

Again, this restriction feels rather odd. In this case the explanation is much more subtle: in certain concurrent update cases when both columns of the base table are manipulated at the same time; it is technically difficult to implement a solution on Cassandra’s side that guarantees no data (or deletions) are lost and the Materialized Views are consistent with the base table.

This restriction may be lifted in later releases, once the following tickets are resolved:

https://issues.apache.org/jira/browse/CASSANDRA-9928

https://issues.apache.org/jira/browse/CASSANDRA-10226

Advanced WHERE filtering criteria on columns that are not part of the base table’s primary key are only supported in Cassandra 3.10

Let’s suppose you want to create a View for “suspicious” transactions – those have too large of an amount associated with them. A possible way of implementing this is via a Materialized View with a more complex filter criteria:

This works on Cassandra 3.10 (the latest release at the time of writing this blog), and produces the results you would expect:

After executing:

When we query:

However on Cassandra 3.9 we get the error: Non-primary key columns cannot be restricted in the SELECT statement used for materialized view creation (got restrictions on: amount)

Maintaining the consistency between the base table and the associated Materialized Views comes with a cost. Since a Materialized View is effectively a Cassandra table, there is the obvious cost of writing to these tables. There is more to it though. Writing to any base table that has associated Materialized Views will result in the following:

- Locking of the entire partition

- Reading the current partition contents

- Calculating all the view mutations

- Creating a batch of the base mutation + the view mutations

- Executing all the changes

The first two steps are to ensure that a consistent state of the data is persisted across all Materialized Views – no two updates on the based table are allowed to interleave, therefore we are certain to read a consistent state of the full row and generate any Materialized View updates based on it.

Creating a batch of the mutations is for atomicity – using Cassandra’s batching capabilities ensures that if the base table mutation is successful, all the views will eventually represent the correct state. In practice this adds a significant overhead to write operations. Especially considering a read operation is executed before the write this transforms the expected characteristics quite dramatically (writes in Cassandra normally don’t require random disk I/O but in this case they will).

A tracing session with on a standard write with Consistency Level ONE would look like this:

Executing the same insert with one Materialized View on the table results in the following trace:

As you can see from the traces, the additional cost on the writes is significant.

Bear in mind that this is not a fair comparison – we are comparing a single-table write with another one that is effectively writing to two tables. The reason for including is to demonstrate the the difference in executing the same CQL write with or without a Materialized View.

In a realistic situation you would execute two writes on the client side, one to the base table and another to the Materialized View, or more likely a batch of two writes to ensure atomicity. According to DataStax performance tests, in such cases the built-in Materialized Views perform better than the manual denormalization (with batching), especially for single-row partitions.

Deletes and updates generally work the way you would expect. Given the following state:

If we execute

Then

Tombstones when updating

There are some unexpected cases worth keeping in mind. When updating a column that is made part of a Materialized View’s primary key, Cassandra will execute a DELETE and an INSERT statement to get the View into the correct state – thus resulting in a tombstone.

To demonstrate this, let’s suppose we want to be able to query transactions for a user by status:

Truncating the base table and executing:

After nodetool flush and taking a look at the SSTable of transactions_by_status:

Notice the tombstoned row for partition (“Bob”, “2017”, “PENDING”) – this is a result of the initial insert and subsequent update. This is because by updating status in the base table, we have effectively created a new row in the Materialized View, deleting the old one.

This particular data structure is strongly discouraged: it will result in having a lot of tombstones in the (“Bob”, “2017”, “PENDING”) partition and is prone to hitting the tombstone warning and failure thresholds. Even worse – it is not immediately obvious that you are generating tombstones.

Instead of using a Materialized View, a SASI index is a much better choice for this particular case.

It is also possible to create a Materialized View over a table that already has data. In such cases Cassandra will create a View that has all the necessary data. As this might take a significant amount of time depending on the amount of data held in the base table, it is possible to track status via the system.built_views metadata table.

Materialized Views sounds like a great feature. Pushing the responsibility to maintain denormalizations for queries to the database is highly desirable and reduces the complexity of applications using Cassandra.

However the current implementation has many shortcomings that make it difficult to use in most cases. Most importantly the serious restrictions on the possible primary keys of the Materialized Views limit their usefulness a great deal. In addition any Views will have to have a well-chosen partition key and extra consideration needs to be given to unexpected tombstone generation in the Materialized Views.

And, there is a definite performance hit compared to simple writes. If an application is sensitive to write latency and throughput, consider the options carefully (Materialized Views, manual denormalisation) and do a proper performance testing exercise before making a choice.

To summarise – Materialized Views is an addition to CQL that is, in its current form suitable in a few use-cases: when write throughput is not a concern and the data model can be created within the functional limitations.

SIMILAR POSTS