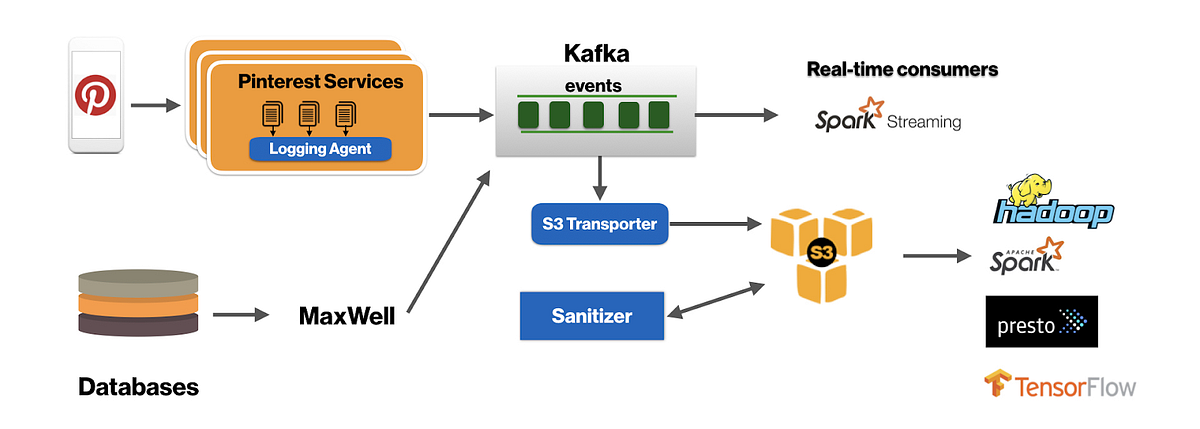

Apache Cassandra is an open source non-relational/NOSQL database. It is massively scalable and is designed to handle large amounts of data across multiple servers (Here, we shall use Amazon EC2 instances), providing high availability. In this blog, we shall replicate data across nodes running in multiple Availability Zones (AZs) to ensure reliability and fault tolerance. We will also learn how to ensure that the data remains intact even when an entire AZ goes down.

The initial setup consists of a Cassandra cluster with 6 nodes with 2 nodes (EC2s) spread across AZ-1a , 2 in AZ-1b and 2 in AZ-1c.

Initial Setup:

Cassandra Cluster with six nodes.

- AZ-1a: us-east-1a: Node 1, Node 2

- AZ-1b: us-east-1b: Node 3, Node 4

- AZ-1c: us-east-1c: Node 5, Node 6

Next, we have to make changes in the Cassandra configuration file. cassandra.yaml file is the main configuration file for Cassandra. We can control how nodes are configured within a cluster, including inter-node communication, data partitioning and replica placement etc., in this config file. The key value which we need to define in the config file in this context is called Snitch. Basically, a snitch indicates as to which Region and Availability zones does each node in the cluster belongs to. It gives information about the network topology so as to the requests are routed efficiently. Additionally, Cassandra has replication strategies which place the replicas based on the information provided by the snitch. There are different types of snitches available. But, in this case, we shall use EC2Snitch as all of our nodes in the cluster are within a single region.

We shall set the snitch value as shown below:

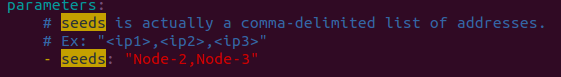

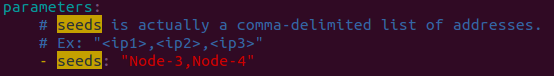

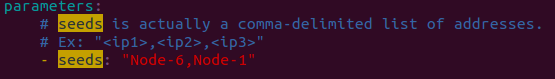

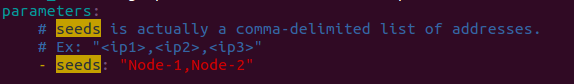

Also, since we are using multiple nodes, we need to group our nodes. We shall do so, by defining seeds key in the configuration file (Cassandra.yaml) . Cassandra nodes use seeds for finding each other and learning the topology of the ring. It is used during startup to discover the cluster.

For instance:

Node 1: Set the seeds value as shown below:

Similarly on the other nodes:

Node 2:

Node 3:

Node 4:

Node 5:

Node 6:

Cassandra nodes use this list of hosts to find each other and learn the topology of the ring. The nodetool utility is a command line interface for managing a cluster. We shall check the status of the cluster using this command as shown below:  The owns field above indicates the percentage of data owned by each node. As we can see, the owns field above is nil as there are no keyspaces/databases created. So, let us go ahead and create a sample keyspace. We shall create a keyspace with data replication strategy & replication factor. Replication strategy indicates the nodes where replicas are placed. And, the total number of replicas across the cluster is known as replication factor. We shall use NetworkTopology replication strategy since we have our cluster deployed across multiple availability zones. NetworkTopologyStrategy places replicas on distinct racks/AZs as sometimes, nodes in the same rack/AZ might usually fail at the same time due to power, cooling or network issues.

The owns field above indicates the percentage of data owned by each node. As we can see, the owns field above is nil as there are no keyspaces/databases created. So, let us go ahead and create a sample keyspace. We shall create a keyspace with data replication strategy & replication factor. Replication strategy indicates the nodes where replicas are placed. And, the total number of replicas across the cluster is known as replication factor. We shall use NetworkTopology replication strategy since we have our cluster deployed across multiple availability zones. NetworkTopologyStrategy places replicas on distinct racks/AZs as sometimes, nodes in the same rack/AZ might usually fail at the same time due to power, cooling or network issues.

Let us set the replication factor to 3 for our “first” keyspace:

The above CQL command creates a database/keyspace ‘first’ with class as NetworkTopologyStrategy and 3 replicas in us-east (In this case, one replica in AZ/rack 1a, one replica in rack AZ/1b and one replica in rack AZ/1c). Cassandra uses a command prompt called Cassandra Query Language Shell, also known as CQLSH, which acts as an interface for users to communicate with it. Using CQLSH, you can execute queries using Cassandra Query Language (CQL).

Next, we shall create a table user with 5 records for tests.

Now, let us insert some queries in this table:

Now that our keyspace/database consists of data, let us check for ownership & effectiveness:

As we can see here, the owns field above is NOT nil after defining the keyspace. The owns field indicates the percentage of data owned by the node.

As we can see here, the owns field above is NOT nil after defining the keyspace. The owns field indicates the percentage of data owned by the node.

Let us perform some tests to make sure the data was replicated intact across multiple Availability Zones.

Test 1:

- Node 1 was stopped.

- Connection was made to the Cluster on remaining nodes and records were read from the table user.

- All records were intact.

- Node 1 was started.

- On Node 1, ‘nodetool repair -h hostname_of_Node1 repair first’ was run.

- Connection was made to the Cluster on Node 1 and records were read from the table user.

- All records were intact.

Test 2:

- Node 1 and Node 2 were stopped. (Scenario wherein an entire AZ i.e; us-east-1a would go down)

- Connection was made to the Cluster on remaining nodes in the other AZs (us-east-1b, us-east-1c) and records were read from the table user.

- All records were intact.

- Node 1 and Node 2 were started.

- ‘nodetool repair -h hostname_of_Node1 repair first’ was run on Node 1

- ‘nodetool repair -h hostname_of_Node2 repair first’ was run on Node 2

- Connection was made to the Cluster on Node 1 and Node 2 and records were read from the table user.

- All records were intact.

Similar tests were done by shutting down nodes in us-east-1b & us-east-1c AZs to check if the records were intact even when an entire Availability Zone goes down. Hence, from the above tests, it is quite clear and is recommended to use 6 node cassandra cluster spread across three availability zones and with minimum replication factor of 3 (1 replica in all the 3 AZs) to make cassandra fault tolerant from one whole Availability Zone going down. This strategy will also help in case of disaster recovery.

Stay tuned for more blogs!!