Some clients have asked us to change the number of num_tokens as their requirement changes.

For example lower number of num_tokens are recommended is using DSE search etc..

The most important thing during this process is that the cluster stays up, and is healthy and fast. Anything we do needs to be deliberate and safe, as we have production traffic flowing through.

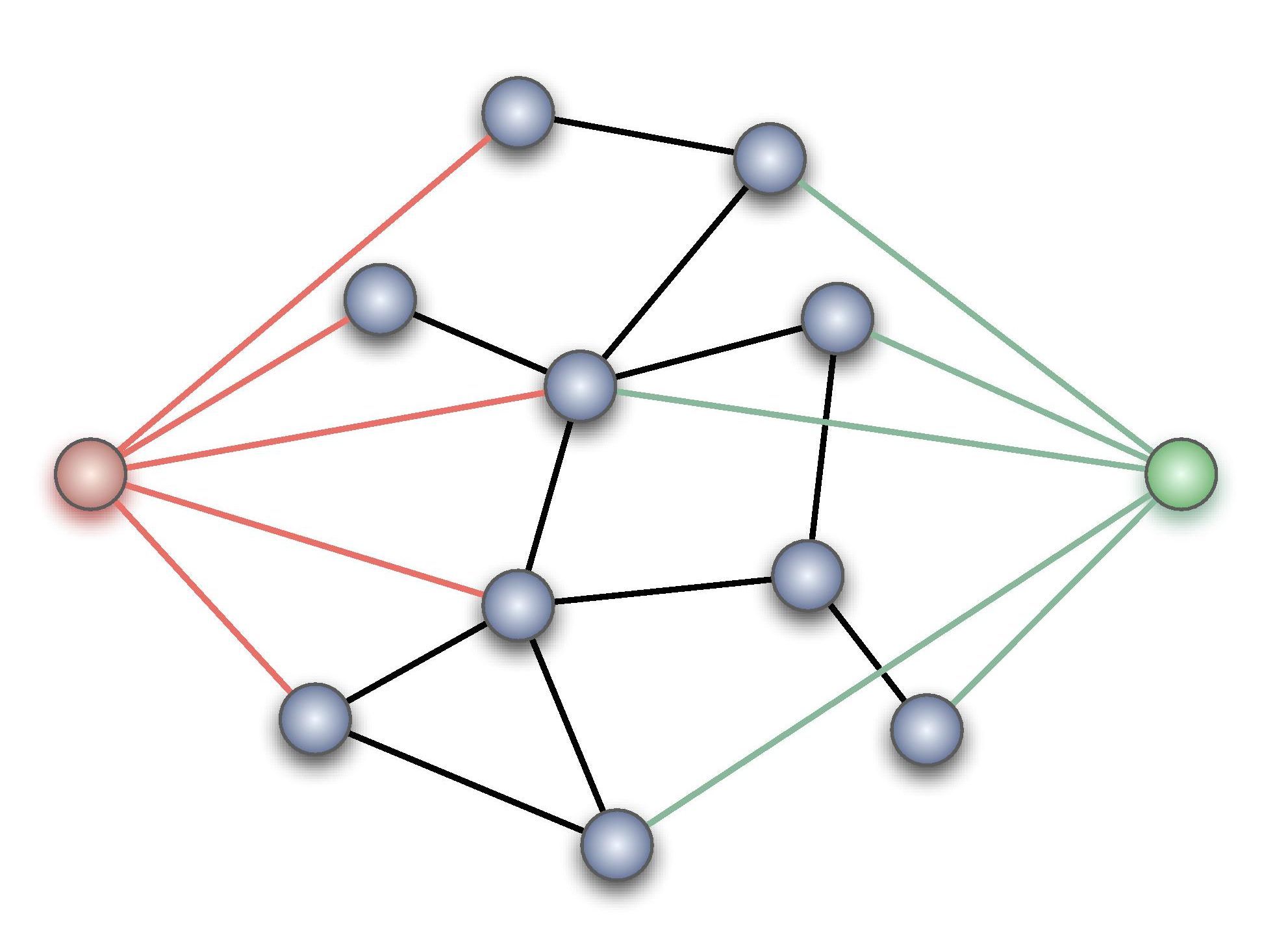

The process includes adding a new DC with a changed number of num_tokens, decommissioning the old DC one by one, and letting Cassandra automatic mechanisms distribute the existing data into the new nodes.

The below procedure is based on the assumption that you have 2 DC DC1 & DC2.

1. Run repair to keep data consistent across cluster

Make sure to run a full repair with nodetool repair. More detail about repairs can be found here. This ensures that all data is propagated from the datacenter which is being decommissioned.

2. Add new DC DC3 and decommission old Datacenter DC1

Step 1: Download and install a similar Cassandra version to the other nodes in the cluster, but do not start.

| How to stop Cassandra

Note: Don’t stop any node in DC1 unless DC3 added. If you used the Debian package, Cassandra starts automatically. You must stop the node and clear the data. |

|---|

Step 2: Clear the data from the default directories once the node is down.

| sudo rm -rf /var/lib/cassandra/* |

|---|

Step 3: Configure the parameter by similar settings of other nodes in the cluster.

Properties which should be set by comparing to other nodes.

Cassandra.yaml:

- Seeds: This should include nodes from live DC because new nodes have to stream data from them.

- snitch: Keep it similar to the nodes in live DC.

- cluster_name: Similar to the nodes in another live DC.

- num_tokens: Number of vnodes required.

- initial_tokne: Make sure this is commented out.

Set the local parameters below:

- auto_bootstrap: false

- listen_address: Local to the node

- rpc_address: Local to the node

- data_directory: Local to the node

- saved_cache_directory: Local to the node

- commitlog_directory: Local to the node

Cassandra-rackdc.properties: Set the parameter for new datacenter and rack:

- dc: “dc name”

- rack: “rack name”

Set the below configurations files, as needed:

Cassandra-env.sh

Logback.xml

Jvm.options

Step 4: Start Cassandra on each node, one by one.

Step 5: Now that all nodes are up and running, alter Keyspaces to set RF in a new datacenter with the number of replicas, as well.

| ALTER KEYSPACE Keyspace_name WITH REPLICATION = {‘class’ : ‘NetworkTopologyStrategy’, ‘dc1’ : 3, ‘dc2’ : 3, ‘dc3’ : 3}; |

|---|

Step 6: Finally, now that the nodes are up and empty, we should run “nodetool rebuild” on each node to stream data from the existing datacenter.

| nodetool rebuild “Existing DC Name” |

|---|

Step 7: Remove “auto_bootstrap: false” from each Cassandra.yaml or set it to true after the complete process.

| auto_bootstrap: true |

|---|

Decommission DC1:

Now that we have added DC3 into a cluster, it’s time to decommission DC1. However, before decommissioning the datacenter in a production environment, the first step should be to prevent the client from connecting to it and ensure reads or writes do not query this datacenter.

Step 1: Prevent clients from communicating with DC1

- First of all, ensure that the clients point to an existing datacenter.

- Set DCAwareRoundRobinPolicy to local to avoid any requests.

Make sure to change QUORUM consistency level to LOCAL_QUORUM and ONE to LOCAL_ONE.

Step 2: ALTER KEYSPACE to not have a replica in decommissioning DC.

| ALTER KEYSPACE “Keyspace_name” WITH REPLICATION = {‘class’ : ‘NetworkTopologyStrategy’, ‘dc2’ : 3, ‘dc3’ : 3}; |

|---|

Step 3: Decommission each node using nodetool decommission.

| nodetool decommission |

|---|

Step 4: Remove all data from data, saved caches, and commitlog directory after all nodes are decommissioned to reclaim disk space.

| sudo rm -rf “Data_directory”/“Saved_cache_directory”/“Commitlog_directory” |

|---|

Step 5: Finally, stop Cassandra as described in Step 1.

Step 6: Decommission each node in DC2 by following the above procedure.

3. Add new DC DC4 and decommission old DC2

Hopefully, this blog post will help you to understand the procedure for changing the number of vnodes on a live Cluster. Keep in mind that bootstrapping/rebuilding/decommissioning process time depends upon data size.