This post is authored by Ujwala Kini

Introduction

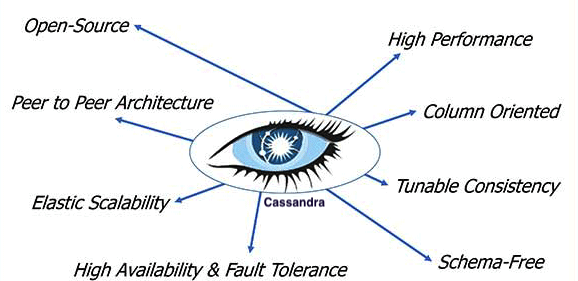

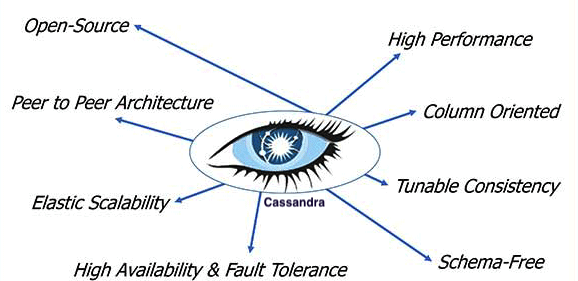

Cassandra is a NoSQL distributed database used widely in the industry because it provides availability and high scalability without compromising performance. In a product, the quality of the security measures taken are crucial. You expect the integrity and confidentiality of data and operation; and protection from security breaches, man in the middle attacks, and unauthorized access Encryption is a way of keeping your data safe and confidential as it is sent over the internet. Cassandra provides a mechanism to secure communication between a client machine and a database cluster, and between nodes within a cluster. Enabling encryption ensures that data in flight is not compromised and is transferred securely. This article looks at Cassandra Internode Encryption and how you can enable it without data loss.

Challenges in achieving Internode Encryption without data loss

For Cassandra version 3.x.x, unlike the client-node encryption configuration, internode encryption configuration doesn’t have an OPTIONAL flag which when set TRUE supports both encrypted and unencrypted connections. This feature would have given us the flexibility to support both the connections, and once all the nodes in the cluster are configured to support an encrypted connection, the unencrypted channel can be turned off. This ensures a successful internode encryption setup across all the nodes without any data loss.

Now that we don’t have the above-mentioned flexibility, we found an alternative approach to set up internode encryption without any data loss. We describe our approach in this article.

Cluster details and configuration

Cluster info

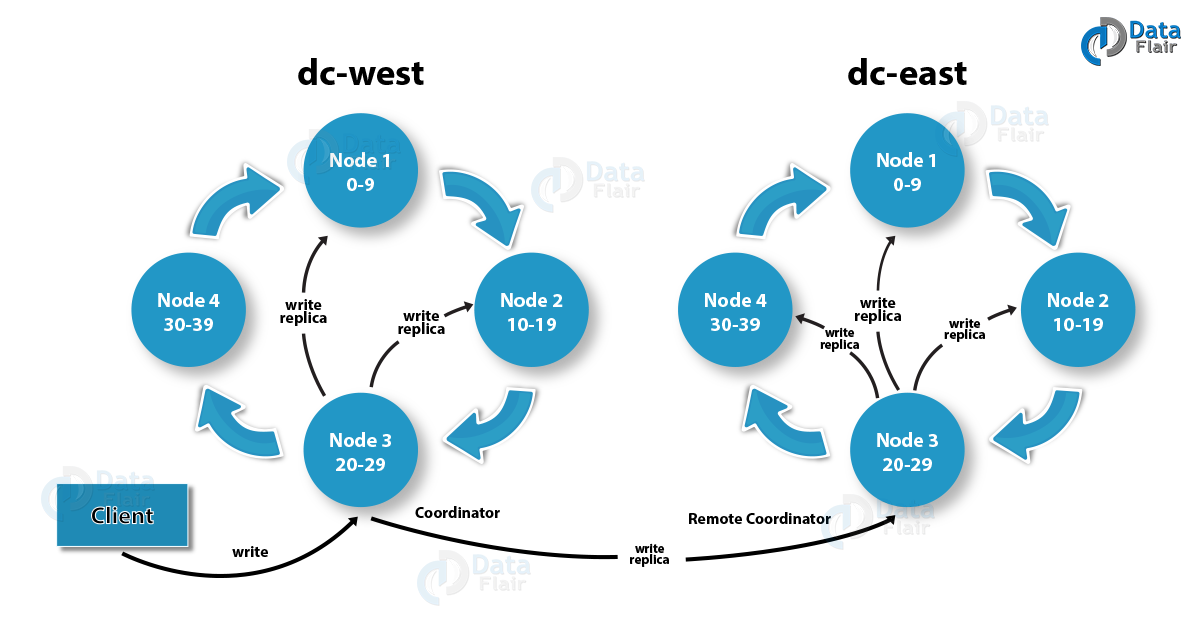

We have two data centers with a NetworkTopologyStrategy schema containing 4 nodes: 2 seeds and 2 members in each of the data centers. The data is replicated across all the nodes with a replication factor of 4. In a NetworkTopologyStrategy, replicas are set for each data center separately. NetworkTopologyStrategy places replicas in the clockwise direction in the ring until reaches the first node in another rack.

Network topology diagram by DataFlair

Java KeyStore and TrustStore

We set up the required Java KeyStore and TrustStore using OpenSSL and Keytool. We used the PKCS12 format for all the certificate stores.

KeyStore is a repository of security certificates using either authorization certificates or public key certificates, plus corresponding private keys used, for instance, in TLS encryption. Each Cassandra node represented this KeyStore while communicating with other nodes over TLS.

|

openssl pkcs12 -export -password env:<keystore_pass> -chain -CAfile <ca.pem> -in <certificate.pem> -inkey <private_key.pem> -out <keystore_name> -name <alias_name> |

TrustsStore is used to store certificates from certified authorities (CA) that verify the certificate presented by the server in a TLS connection. Each Cassandra node used this Truststore to verify the certificate presented by the other nodes while communicating over TLS.

|

keytool -importcert -noprompt -v -alias <alias_name> -keystore <truststore_name> -file <ca.pem> -storepass <truststore_pass> -storetype pkcs12 |

Internode Encryption Configuration

The settings for managing internode encryption are found in cassandra.yaml in the server_encryption_options section. To enable internode encryption, we changed the setting from its default value of none to one value from: rack, data center, all

|

server_encryption_options: internode_encryption: all keystore: <keystore_path> keystore_password: <keystore_pass> truststore: <truststore_path> truststore_password: <truststore_pass> store_type: PKCS12 require_client_auth: true require_endpoint_verification: true # More advanced defaults below: # protocol: TLS # algorithm: SunX509 # cipher_suites:[TLS_RSA_WITH_AES_128_CBC_SHA] |

Our approaches and best practices

Approach 1 – didn’t work

We started with enabling the internode encryption in one of the seeds in the data center. The encrypted seed was not able to communicate with other unencrypted nodes to initialize the data, hence it booted as a fresh database with the loss of existing data.

Approach 2 – didn’t work

We configured all 4 nodes in one of the data centers with the KeyStore and TrustStore required for successful internode encryption, but with internode_encryption set to ‘none’. We started all the nodes in that data center. The nodes were able to communicate with each other over the non-TLS channel because the encryption was off, and they were able to successfully initialize the data, which was available in the instance.

Next, we manually set the internode_encryption to ‘all’ in all 4 nodes in the same data center. We restarted one of the seeds. In this scenario, even though the encrypted seed was not able to communicate with other unencrypted nodes, the seed booted up successfully without any data loss because the data was already available in the instance. We restarted the remaining 3 nodes in the data center, and all the nodes booted up successfully.

Since we had not set up KeyStore and TrustStore in the other data center, the nodes failed to boot up in the second data center.

Approach 3 – success!

We configured all 8 nodes in both the data centers with the KeyStore and TrustStore required for successful internode encryption, but with internode_encryption set to ‘none’. We started all the nodes in both the data centers, and the nodes were able to communicate with each other over the non-TLS channel because the encryption was off, and then we were able to successfully initialize the data, which was available in the instance.

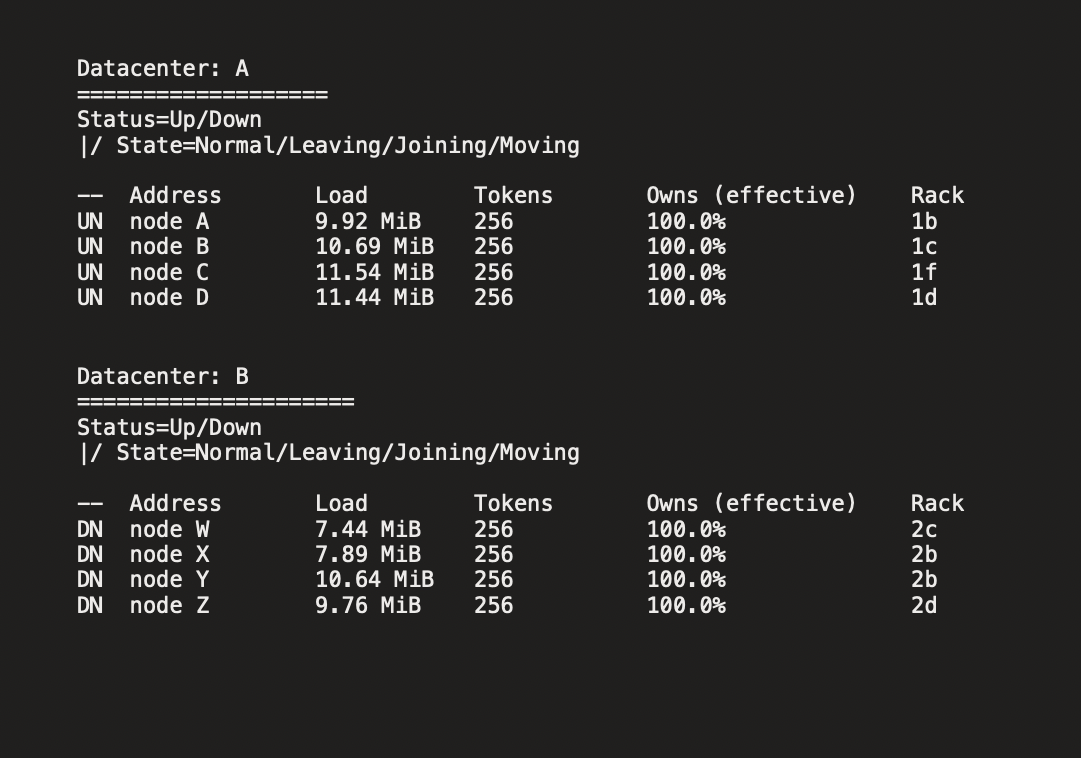

Nodetool status:

We started with data center A and routed all the traffic to data center B (the serving region):

- On all 4 nodes in data center A, we manually set the internode_encryption in cassandra.yaml to ‘all’ and didn’t restart any of the nodes yet.

- We stopped both the members and one of the seeds and restarted the other seed. Even though the seed was not able to communicate with other nodes, it booted up successfully without any data loss because the data was already available in the instance.

- We started the second seed. This seed successfully communicated with the previous seed over TLS and had its data initialized.

- We started the 2 members. Both the members successfully communicated with the seeds over TLS and had their data initialized.

- We ran nodetool repair on all 4 nodes in data center A.

The nodetool status showed all the nodes in data center A as Up & Normal, while the nodes in data center B showed as Down, which was expected because the internode encryption was not yet enabled in data center B and therefore:

– The nodes in data center A couldn’t communicate with the nodes in data center B.

– The incoming new data was stored in data center B (the serving region) and was not replicated to data center A.

Nodetool status:

Next, on data center B, we routed all the traffic to data center A (the serving region):

The traffic was routed to data center A, but the nodes in data center B couldn’t communicate with the nodes in data center A yet, so the new data stored was not available, so we noticed data inconsistency. Once the encryption was configured in both the data centers successfully, the data was replicated across the nodes.

- On all 4 nodes in data center B, we manually set the internode_encryption in cassandra.yaml to all and did not restart any of the nodes yet.

- We stopped both the members and one of the seeds, and restarted the other seed. Because internode encryption was enabled in the data center A, this seed successfully communicated with the seeds in data center A over TLS and had its data initialized.

- We started the second seed. This node successfully communicated with the seed in the same data center, as well as with the seeds in data center A over TLS and had its data initialized.

- We started the two members. Both the members successfully communicated with the seeds over TLS and had their data initialized.

- We ran nodetool repair on all 4 nodes in data center B.

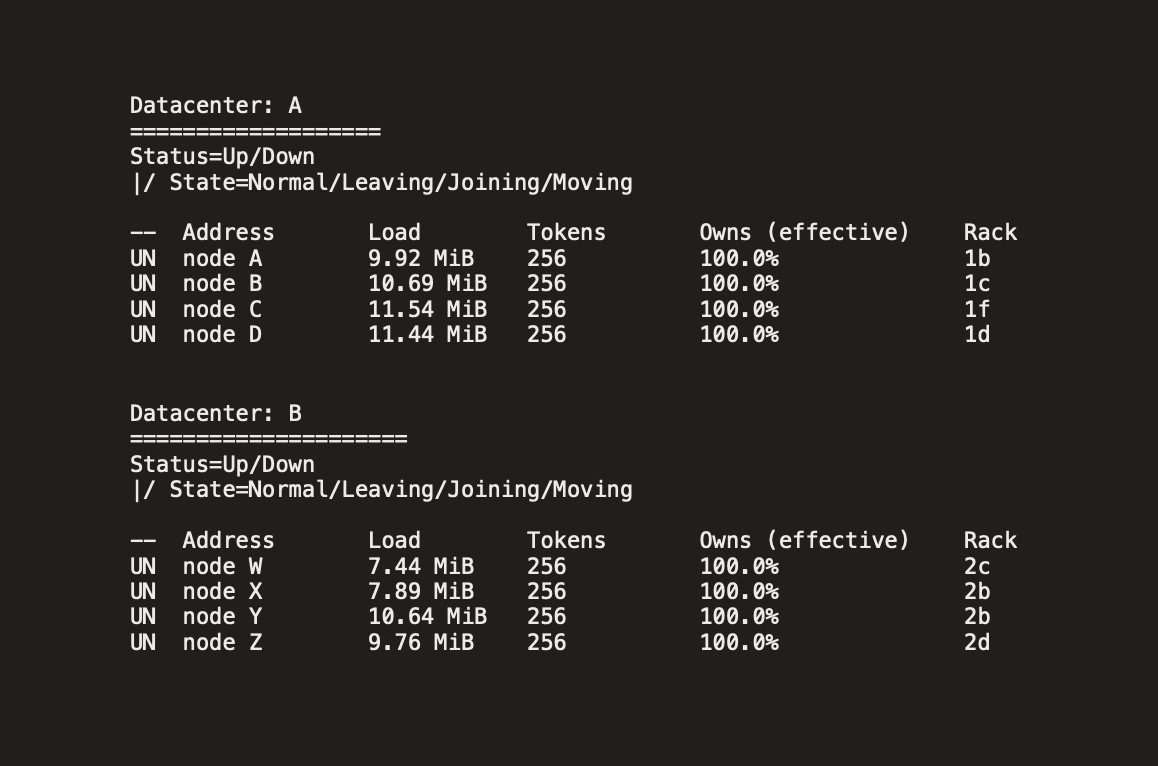

At this point, all the nodes in both the data centers were configured with internode encryption, the nodes successfully communicated with each other over TLS, and the nodetool status in both the data centers showed all the nodes as Up & Normal. The data was replicated across all the nodes, and we no longer noticed the data inconsistency.

Nodetool status:

Conclusion

We chose not to wait for the OPTIONAL flag feature in the server_encryption_options configuration (cassandra.yaml), which may or may not be available in the next Cassandra version, but rather tried different approaches. As a result, we found the best way to turn on internode encryption for all the nodes in the cluster. We followed the same procedure for our production cluster and successfully turned on the encryption without any data loss.