Successfully reported this slideshow.

Cassandra Backups and Restorations Using Ansible (Joshua Wickman, Knewton) | C* Summit 2016

![Backup metadata

{

"ips": [

"123.45.67.0",

"123.45.67.1",

"123.45.67.2"

],

"ts": "2016-09-01T01:23:45.987654",

"version": "...](https://www.slideshare.net/DataStax/cassandra-backups-and-restorations-using-ansible-joshua-wickman-knewton-c-summit-2016)

Upcoming SlideShare

Loading in …5

×

No Downloads

No notes for slide

Not called out directly:Run once

Ignore errorsAZ loss problem removed if each AZ has a complete copy of the data4) Nice is used for low impact

6) Bucket lifecycle policies are also used. Separate process is for higher granularity.Hostnames:

We use these in S3 paths as a unique source identifier. May not be needed depending on implementation.Impact: nice

Automation: cron, Tower, etcConsistency: data agrees to within C* internals

Integrity: no corruption induced by restore

Time: ~few hoursFiltered keyspaces:

Peers

Local

NOT schema!

Highlight box:

Minimum metadata collected

Combination of old & new config settings:

Critical: stored in S3

Non-critical: use what’s in repo

Approach assumes:

Snapshot being restored is recent

Config changes are rare

Could store more config details, up to entire cassandra.yaml

Quorum loss threshold:

For RF=3 and same # nodes in each AZ: 9 total nodes

- 1. Cassandra backups and restorations using Ansible Dr. Joshua Wickman Database Engineer Knewton

- 2. Relevant technologies ● AWS infrastructure ● Deployment and configuration management with Ansible ○ Ansible is built on: ■ Python ■ YAML ■ SSH ■ Jinja2 templating ○ Agentless - less complexity

- 3. Ansible playbook sample --- - hosts: < host group specification > serial: 1 pre_tasks: - name: ask for human confirmation local_action: module: pause prompt: Confirm action on {{ play_hosts | length }} hosts? run_once: yes tags: - always - hostcount < more setup tasks > roles: - role: base - role: cassandra-install - role: cassandra-configure post_tasks: - name: wait to make sure cassandra is up wait_for: host: '{{ inventory_hostname }}' port: 9160 delay: "{{ pause_time | default(15) }}" timeout: "{{ listen_timeout | default(120) }}" ignore_errors: yes < more post-startup tasks > - name: install and configure alerts include: monitoring.yml < more plays > A single “play” Roles define complex, repeatable rule sets Can execute on local or remote host Tags allow task filtering One host at a time (default: all in parallel) Import other playbooks Built-in variables Template with default ansible-playbook path/to/sample_playbook.yml -i host_file -e "listen_timeout=30" Sample command:

- 4. Knewton’s Cassandra deployment ● Running on AWS instances in a VPC ● Ansible repo contains: ○ Dynamic host inventory ○ Configuration details for Cassandra nodes ■ Config file templates (cassandra.yaml, etc) ■ Variable defaults ○ Roles and playbooks for Cassandra node operations: ■ Create / provision new nodes ■ Rolling restart a cluster ■ Upgrade a cluster ■ Backups and restores

- 5. Backups for disaster recovery Data loss Data corruption AZ/rack loss Data center loss

- 6. But that’s not all... Restored backups are also useful for: ● Benchmarking ● Data warehousing ● Batch jobs ● Load testing ● Corruption testing ● Tracking down incident causes

- 7. Backups Those sound like a good idea. I can get those for you, no sweat!

- 8. ● Simple to use ● Centralized, yet distributed ● Low impact ● Built with restores in mind Backups — requirements Easy with Ansible Obvious, but super important to get right!

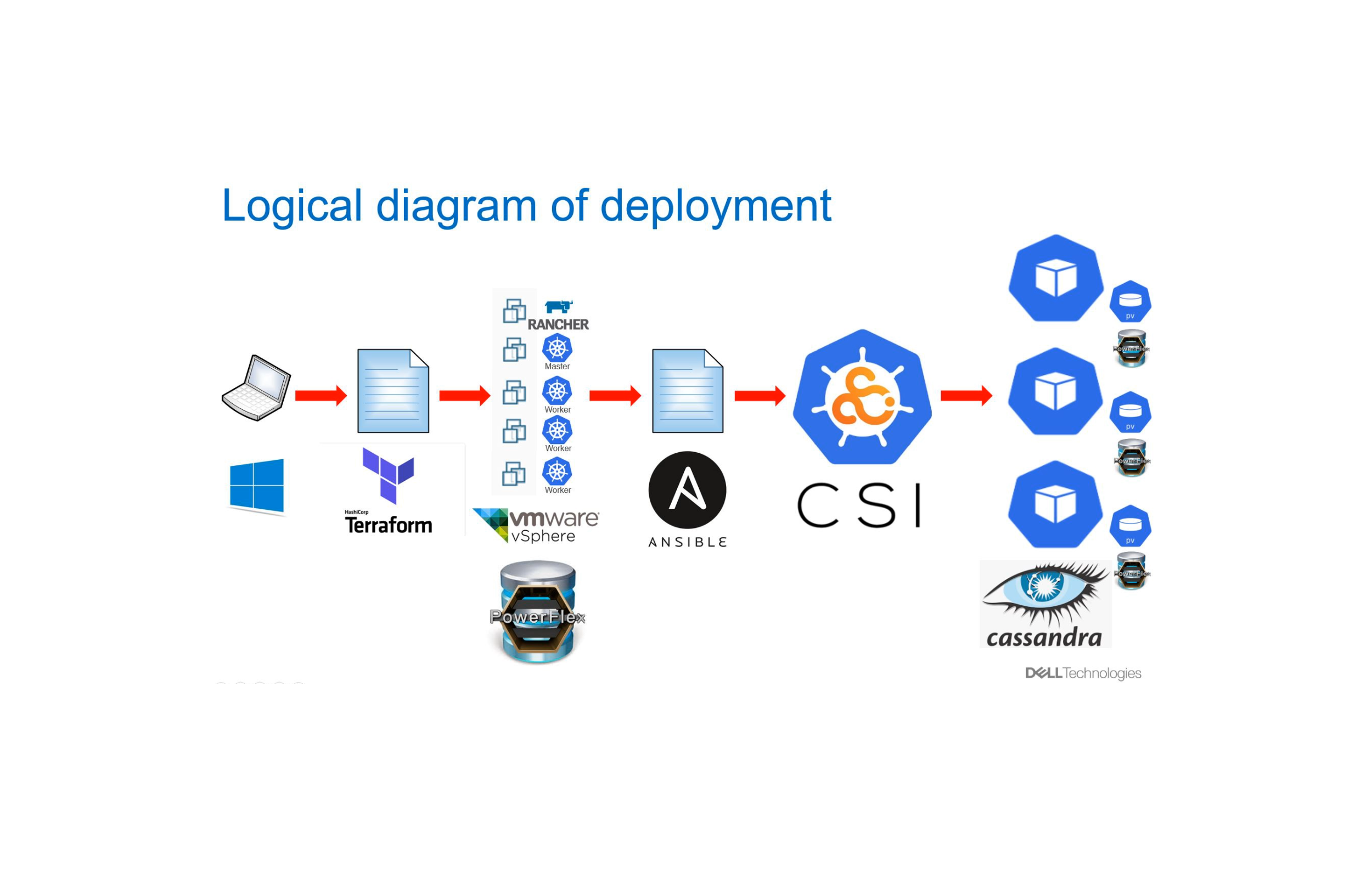

- 9. Backup playbook 1. Ansible run initiated 2. Commands sent to each Cassandra node over SSH 3. nodetool snapshot on each node 4. Snapshot uploaded to S3 Via AWS CLI 5. Metadata gathered centrally by Ansible and uploaded to S3 6. Backup retention policies enforced by separate process Ansible Cassandra cluster AWS S3 Retention enforcement SSH AWS CLI

- 10. Backup metadata { "ips": [ "123.45.67.0", "123.45.67.1", "123.45.67.2" ], "ts": "2016-09-01T01:23:45.987654", "version": "2.1", "tokens": { "1a": [ { "tokens": [...], "hostname": "sample-0" }, "1c": [ { "tokens": [...], "hostname": "sample-2" }, ... ] } } ● IP list for cluster history / backup source tracking ● Needed for restores: ○ Cassandra version ○ Token ranges ○ AZ mapping SSTable compatibility For partitioner More on this later

- 11. Backups — results ● Simple and predictable ● Clusterwide snapshots ● Low impact ● Automation-ready Everything’s good! ...right?

- 12. Restores Oh, you actually wanted to use that data again? That’s… harder.

- 13. ● Primary ○ Data consistency across nodes ○ Data integrity maintained ○ Time to recovery ● Secondary ○ Multiple snapshots at a time ○ Can be automated or run on-demand ○ Versatile end state Restores — requirements Spin up new cluster using restored data

- 14. Contained in backup metadata • Cassandra version • Number of nodes • Token ranges • Rack distribution – On AWS: availability zones (AZs) Restored cluster — requirements Entirely separate from live cluster • No common members • No common seeds • Distinct provisioning identifiers – For us: AWS tags Same configuration as at snapshot Restore-focused backups

- 15. Ansible in the cloud — a caveat Programmatic launch of servers + Ansible host discovery happens once per playbook = Launching cluster requires 2 steps: 1. Create instances 2. Provision instances as Cassandra nodes

- 16. Restore playbook 1: create nodes 1. Get metadata from S3 2. Find number of nodes in original cluster 3. Create new nodes New cluster name is stamped with snapshot ID, allowing: • Easy distinction from live cluster • Multiple concurrent restores per cluster Ansible New Cassandra cluster S3

- 17. 1. Get metadata from S3 (again) 2. Parse metadata – Map source to target 3. Find matching files in S3 – Filter out some Cassandra system tables 4. Partially provision nodes – Install Cassandra • Use original C* version – Mount data partition 5. Download snapshot data to nodes 6. Configure Cassandra and finish provisioning nodes Restore playbook 2: provision nodes Ansible New Cassandra cluster S3 S3 LOADED

- 18. Restores: node mapping Source ⇒ Target Include token ranges Source AZs ⇒ Target AZs

- 19. Restores: random AZ assignment Source cluster Restored cluster 1a 1c 1d 1a 1c 1d 1a 1c 1d 1a 1c 1d

- 20. Why is this a problem? With NetworkTopologyStrategy and RF ≤ # of AZs, Cassandra would distribute replicas in different AZs… ...so data appearing in the same AZ will be skipped on read. ● Effectively fewer replicas ● Potential quorum loss ● Inconsistent access of most recent data

- 21. Restores: AZ aware Source cluster Restored cluster 1a 1c 1d 1a 1c 1d 1a 1c 1d 1a 1c 1d

- 22. Implementation details ● Snapshot ID ○ Datetime stamp (start of backup) ○ Restore defaults to latest ● Restores use auto_bootstrap: false ○ Nodes already have their data! ● Anti-corruption measures ○ Metadata manifest created after backup has succeeded ○ If any node fails, entire restore fails

- 23. Extras ● Automated runs using cron job, Ansible Tower or CD frameworks ● Restricted-access backups for dev teams using internal service

- 24. Conclusions ● Restore-focused backups are imperative for consistent restores ● Ansible is easy to work with and provides centralized control with a distributed workload ● Reliable backup restores are powerful and versatile

- 25. Thank you! Questions?

Public clipboards featuring this slide

No public clipboards found for this slide