Successfully reported this slideshow.

Cassandra Architecture FTW

Upcoming SlideShare

Loading in …5

×

- 1. Cassandra Architecture FTW!

- 2. Cassandra Architecture FTW!

- 3. Cassandra won because it solved a problem that hadn’t yet been solved

- 4. What’s wrong with RDBMS? • Pros • Relational data modeling is well understood • SQL is easy to use and ubiquitous • ACID transactions - data integrity • Cons • Scaling is hard • Sharding and replication have side-effects (performance, reliability, cost) • Denormalize to get performance gains For fun: Relational Scaling and the Temple of Gloom

- 5. The NoSQL Revolution (~2009) • Key-value – Dynamo, Riak, Voldemort, Redis, Memcached • Column-oriented – BigTable, Hbase • Document – Mongo DB, Document DB, CouchDB • Graph – Neo4J, DSE Graph • Multi-model – DataStax Enterprise, CosmosDB • Relational counter-revolution – AWS Aurora, Google Spanner

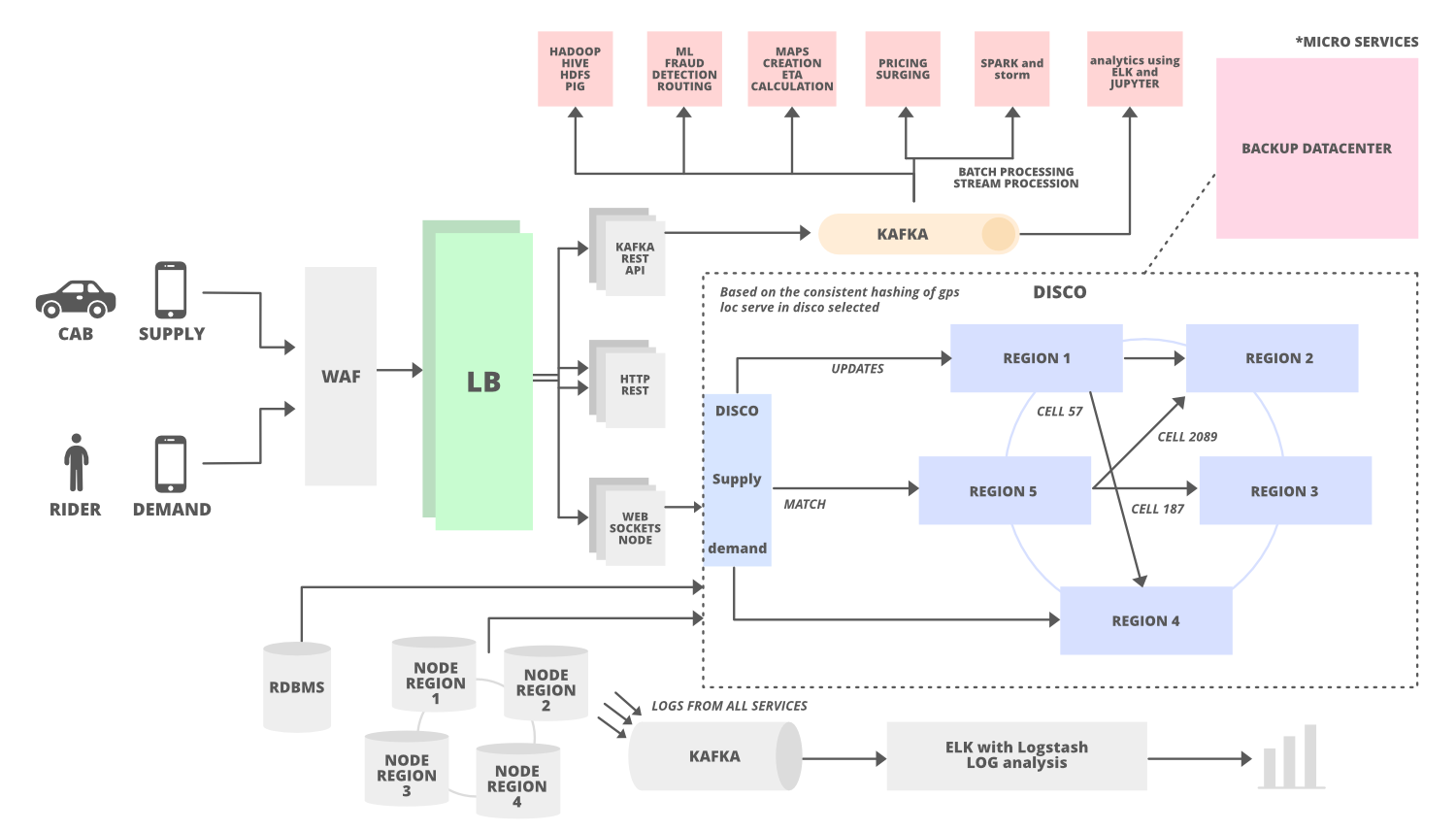

- 6. Apache Cassandra Architecture Qualities • Distributed, decentralized • Elastic scalability • Commodity hardware? • High performance • High availability / fault tolerant • Tuneable consistency Apache Cassandra ® Apache Software Foundation

- 7. Two lies and a truth • Cassandra is column-oriented • Cassandra is a schemaless database • Cassandra is eventually consistent They’re all lies, in a way

- 8. Problems Cassandra is Especially Good At • Large scale storage • >10s of TB • Lots of writes • Time-series data, IoT • Statistics and analytics • For example, as a Spark data source • Geographic distribution • Multiple data centers Personalization Customer 360 Recommendation Fraud Detection Inventory Management Identity Management Security Supply Chain

- 9. A system must be designed for distribution from the beginning in order to scale the most effectively © DataStax, All Rights Reserved.10

- 10. Cluster Topology • Organization – Nodes – Racks – Data Centers • Goals – Distribute copies (replicas) for high availability – Route queries to nearby nodes for high performance • Approaches – Gossip – Snitches

- 11. Clusters and Rings • Organization – Tokens – Token Ring • Goals – Distribute data evenly across nodes • Approaches – Partitioners – Virtual nodes

- 12. Replication and Consistency • Organization – Client (with driver) – Coordinator Node – Replica nodes • Goals – Consistent data even when nodes are down/unresponsive • Approaches – Replication Factor / Strategy – Consistency Level

- 13. Cassandra works because it leverages proven distributed system design patterns

- 14. Wait, who is this guy?

- 15. Proven Distributed System Techniques • Gossip • Failure detection (Phi) • Partitioning • Leaderless replication • Hinted Handoff • Bloom filters • Consensus algorithms (Paxos) • Log Structured Merge Storage Engine – Memtables – SSTables – Commit log – Compaction – Tombstones • Thrift API • SEDA

- 16. Log Structured Merge Storage Engine

- 17. Cassandra’s data model is the key enabler of its high performance and scalability

- 18. Terminology - Cassandra’s Data Model

- 19. Terminology – Partition and Clustering Keys

- 20. Partition and Clustering Key Example Labeling: K – partition key C – clustering key PRIMARY KEY ((customer_id), contact_time)

- 21. Cassandra survived because it didn’t over-extend itself

- 22. Tuneable Consistency and Consistency Levels • ONE, TWO, THREE • Useful for speed • ANY (Write only, use with care) • ALL • Number of nodes to respond = RF • Overly restrictive? • QUORUM • (RF / 2) + 1 • Frequently very useful • LOCAL_ONE, LOCAL_QUORUM – Similar to above, but nodes must be in local data center • EACH_QUORUM – Quorum of nodes must respond in each data center

- 23. Strong Consistency vs. Eventual Consistency • Eventual consistency • i.e. W ONE, R QUORUM • Use cases • Write heavy • Data not read immediately • Strong Consistency • i.e. W QUORUM, R QUORUM • Use cases • Read after write • Data loading with validation • Strong Consistency Formula • R + W > RF = strong consistency • R: read replica count required by consistency level • W: the write replica count required by consistency level • RF: replication factor • Example: W QUORUM, R QUORUM, RF = 3 • 2 + 2 > 3 • Implication: all client reads will see the most recent write

- 24. Inventory and Tuneable Consistency © DataStax, All Rights Reserved.28 Approach Example Scope QUORUM consistency for reads and writes Ensuring latest inventory counts are always read Data Tier Lightweight Transaction Updating inventory counts Data Tier Logged Batch Writing to multiple denormalized tables Data Tier Retrying failed calls Data synchronization, reservation processing Service / Application Tier Compensating processes Verifying reservation processing System Customer service remediation Rebooking, back order, substitution System Eventual consistency Strong consistency

- 25. Core application data Microservices and Polyglot Persistence © DataStax, All Rights Reserved.29 Servic e A Service B Tabular Key-value Servic e C RelationalDocument Graph Service D Service E Reference data Content Customer relationship data Legacy, low volume data

- 26. Cassandra will remain viable for many years because of its extensibility and pluggability

- 27. Snitch Partitioner Compaction Replication Secondary Index Storage Engine? Auth/Auth

- 28. Cassandra’s deployment flexibility can’t be matched by cloud vendor database offerings

- 29. Consistency Level and Deployment Active-Active, Multi-Region – EACH_QUORUM may limit availability if connection to one region/data center is down – LOCAL_QUORUM, relying on Cassandra to complete writes to remote data centers – QUORUM is a reasonable middle-ground approach

- 30. Consistency Level and Deployment Separate data center for analytics – Writes in ”online DC” – LOCAL_QUORUM • Writes to analytics DC in background • Analytic DC availability/performance decoupled from online DC • EACH_QUORUM, QUORUM overkill, unless “real-time” analytics required – Reads in “analytics DC” – LOCAL_QUORUM • Or even LOCAL_ONE

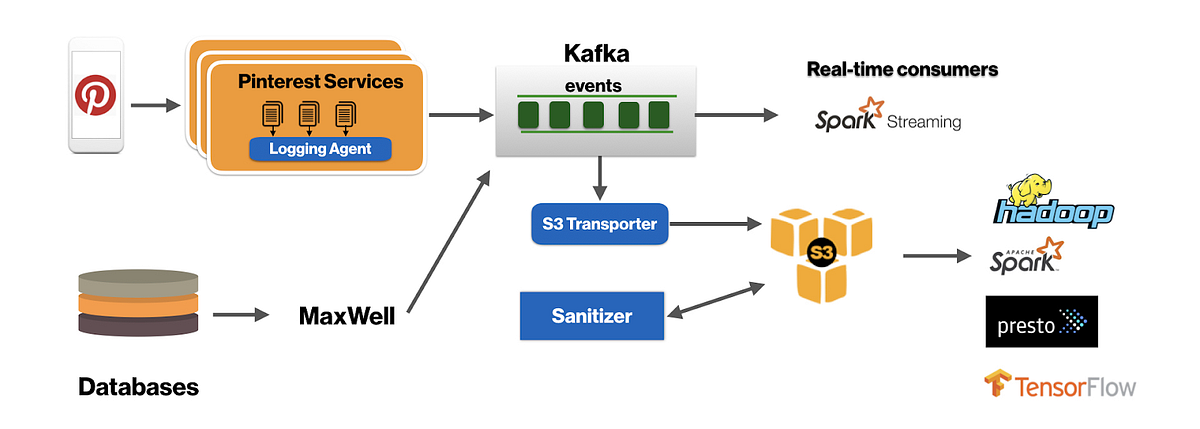

- 31. Cassandra is most powerful when combined with complementary technologies to form a data platform

- 32. Spark + Cassandra • Access Cassandra from Spark via DataStax connector DataStax is a registered trademark of DataStax, Inc. and its subsidiaries in the United States and/or other countries. 3 6 • Co-locate Spark and Cassandra Spa rk SQL Spark Streami ng MLi b Grap hX Spar k R Spark Core Engine DataStax Spark-Cassandra Connector Cassandra

- 33. DSE Analytics DataStax is a registered trademark of DataStax, Inc. and its subsidiaries in the United States and/or other countries. Application Real Time Operations Cassandra Analytics Analytics Queries Your Analytics Real Time Replication Single DSE Custer Streaming, ad-hoc, and batch • High-performance • Workload management • SQL reporting Compared to self-managed Spark cluster: • No ETL • True HA without Zookeeper

- 34. DSE Core - Certified Apache Cassandra • The best distribution of Apache Cassandra™ • Production certified Cassandra • Performance improvements • Advanced Security • Multi-tenancy through row-level access control • Advanced Replication • Great for retail and IoT use cases DataStax is a registered trademark of DataStax, Inc. and its subsidiaries in the United States and/or other countries.

- 35. https://github.com/killrvideo KillrVideo – a reference application

- 36. https://academy.datastax.com DataStax Academy – a place to learn

- 37. 41 Contact jeff.carpenter@ datastax.com @jscarp jeffreyscarpenter medium.com/@jscarp

Public clipboards featuring this slide

No public clipboards found for this slide