Successfully reported this slideshow.

Building a Multi-Region Cluster at Target (Aaron Ploetz, Target) | Cassandra Summit 2016

Upcoming SlideShare

Loading in …5

×

-

Be the first to like this

No Downloads

No notes for slide

- 1. Building a Multi-Region Cluster at Target Presenters: Andrew From and Aaron Ploetz

- 2. 1 Introduction 2 Target’s DCD Cluster 3 Problems 4 Solutions 5 Current State 6 Lessons Learned 2© DataStax, All Rights Reserved.

- 3. © DataStax, All Rights Reserved. 3 TTS - CPE Cassandra Data model consulting Deployment Operations

- 4. $ whoami_ • B.S.-MCS University of Wisconsin-Whitewater. • M.S.-SED Regis University. • 10+ years experience with distributed storage tech. • Supporting Cassandra in production since v0.8. • Contributor to the Apache Cassandra project (cqlsh). • Contributor to the Cassandra tag on Stack Overflow. • 3x DataStax MVP for Apache Cassandra (2014-17). © DataStax, All Rights Reserved. 4 Aaron Ploetz

- 5. $ whoami_ • B.S. Computer Engineering University of Minnesota • Using Cassandra in production since v2.0 • Contributor to the Ratpack Framework Project. • Contributor to the Cassandra tag on Stack Overflow. • Maintainer for statsd-kafka-backend plugin on the Target public Github org • I am very passionate and interested in metrics, monitoring, and alerting (not just on Cassandra) © DataStax, All Rights Reserved. 5 Andrew From

- 6. Introduction Cassandra at Target

- 7. Cassandra clusters at Target © DataStax, All Rights Reserved. 7 Cartwheel Personalization GAM DCD Subscriptions Enterprise Services GDMItem LocationsCheckoutAdaptive-SEO

- 8. Versions used at Target • Depends on experience of the application team. • Most clusters run DSE 4.0.3. • Some clusters have been built with Apache Cassandra 2.1.12-15. • Most new clusters built on 2.2.7. © DataStax, All Rights Reserved. 8

- 9. Target’s DCD Cluster • Data footprint = 350Gb • Multi-tenant cluster, supporting several teams: • Rating and Reviews • Pricing • Item • Promotions • Content • A/B Testing • Back To School, Shopping Lists • …and others © DataStax, All Rights Reserved. 9

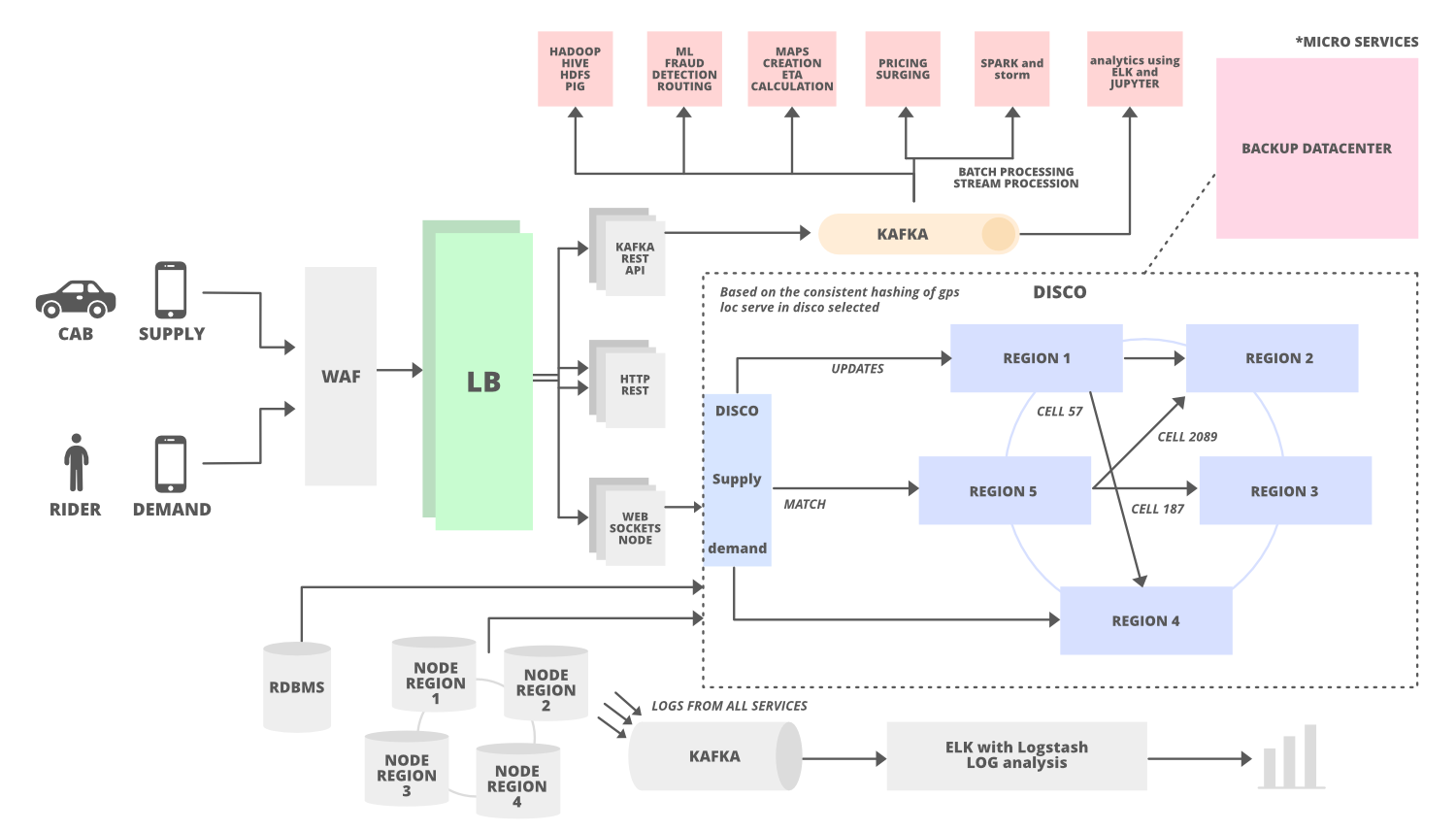

- 10. 2015 Peak (Black Friday -> Cyber Monday) • DataStax Enterprise 4.0.3 (Cassandra 2.0.7.31) • Java 1.7 • CMS GC • 18 nodes (256GB RAM, 6-core, 24 HT CPUs, 1TB disk): • 6 nodes at two Target data centers (3 on-the-metal nodes each). • 12 nodes at two tel-co data centers (6 on-the-metal nodes each). • 500 Mbit connection with Tel-co datacenters. • Sustained between 5000 and 5500 TPS during Peak. • Zero downtime! © DataStax, All Rights Reserved. 10

- 11. 2016 Q1: Expand DCD to the cloud Plan to expand DCD Cassandra cluster to cloud East and West regions: • Add six cloud instances in each region. • VPN connection to Target network. • Data locality: Support teams spinning-up scalable application servers in the cloud. • Went “live” early March 2016: • Cloud-West 6 nodes • Cloud-East 6 nodes • TTC – 3 nodes • TTCE – 3 nodes • Tel-co 1 – 6 nodes • Tel-co 2 – 6 nodes © DataStax, All Rights Reserved. 11

- 12. Problems Cassandra at Target

- 13. Problems • VPN not stable between Cloud and Target. • GC Pauses causing application latency. • Orphaned repair streams building up over time. • Data inconsistency between Tel-co and Cloud. • Issues with large batches. • Issues with poor data models. © DataStax, All Rights Reserved. 13

- 14. Solutions Cassandra at Target

- 15. • VPN not stable between Cloud and Target • Gossip between Tel-co and Cloud was sketchy. • Nodes reporting as “DN” that were actually ”UN.” • Worked with Cloud Provider admins, reviewed architecture. • Set-up increased monitoring to determine DCD Cassandra downtimes, cross-referenced that with VPN connection logs with both our (Target) Cloud Platform Engineering (CPE) Network team and Cloud Provider admins. • Our CPE Network team worked with Cloud Provider to: • Ensure proper network configuration. • “Bi-directional” dead peer detection (DPD). • “DPD Responder” handles dead peer requests from Cloud endpoint. • Upgrade our VPN connections to 1Gbit. © DataStax, All Rights Reserved. 15

- 16. • GC Pauses causing application latency • STW GC pauses of 10-20 seconds (or more) rendering nodes unresponsive, during nightly batch jobs (9pm – 6am). Most often around 2am. • Was a small issue just prior to 2015 Peak Season; became more of a problem once we expanded to the cloud. • Worked with our teams to refine their data models and write patterns. • Upgraded to Java 1.8. • Enabled G1GC…biggest “win.” © DataStax, All Rights Reserved. 16

- 17. • Orphaned repair streams building up over time • Due to VPN inconsistency, repair streams between Cloud and Tel-co could not complete consistently. • Orphaned Streams (pending repairs) built-up over time, system load average in Tel-co and Target nodes rose, nodes eventually became unresponsive. • Examined our use cases, scheduled ”focused” repair jobs, and only for certain applications. nodetool –h <node_ip> repair –pr -hosts <source_ip1,source_ip2,etc…> • Only run repairs between Cloud and Target or Tel-co and Target. © DataStax, All Rights Reserved. 17

- 18. • Data inconsistency between Tel-co and Cloud • Data inconsistency issues causing problems for certain teams. • Upgraded our Tel-co connection to 1Gbit, expandable to 10Gbit on request. • But this was ultimately the wall we could not get around. • Problems with repairs, but also issues with boot-strapping and decommissioning nodes • Met with ALL application teams: • Discussed a future plan to split-off the Cloud nodes (with six new Target nodes) into a new “DCD Cloud” cluster. • Talked through application requirements, determined who would need to move/split to “DCD Cloud.” • Also challenged the application teams on who really needed to be in both Cloud and Tel-co. Turns out that only the Pricing team needed both, and the rest could successfully serve their requirements from one or the other. © DataStax, All Rights Reserved. 18

- 19. Issues with large batches • Teams unknowingly using batches: © DataStax, All Rights Reserved. 19

- 20. Poor Data Models • Using Cassandra in a batch way: • Reading large tables entirely with SELECT * FROM table; • Re-inserting data to a table on a schedule, even when data has not changed • Lack of de-normalized tables to support specific queries • Queries using ALLOW FILTERING • “XREF” tables • Queue-like usage of tables • Extremely large row sizes • Abuse of Collection data types • Read before writing (or writing then immediately reading) © DataStax, All Rights Reserved. 20

- 21. Current State Cassandra at Target

- 22. August 2016 • DataStax Enterprise 4.0.3 (Cassandra 2.0.7.31)… Upgrade to 2.1 planned. • Java 1.8 • G1GC 32GB Heap, MaxGCPauseMillis=500 • DCD Classic - 18 nodes (256GB RAM, 24 HT CPUs, 1TB disk): • 6 nodes at two Target data centers (3 on-the-metal nodes each). • 12 nodes at two Tel-co data centers (6 on-the-metal nodes each). • DCD Cloud - 18 nodes (256GB RAM, 24 HT CPUs): • 6 nodes at two Target data centers (3 on-the-metal nodes each). • 12 nodes at two Cloud data centers (6 i2.2xlarge nodes each). • Upgraded connection (up to 10 Gbit) with Tel-co. © DataStax, All Rights Reserved. 22

- 23. Lessons Learned • Spend time on your data model! • Most overlooked aspect of Cassandra architecting. • Build relationships with your application teams. • Build tables to suit the query patterns. • Talk about consistency requirements with your app teams. • Ask the questions: • Do you really need to replicate everywhere? • Do you really need to read/write at LOCAL_QUORUM? • What is your anticipated read/write ratio? • Watch for tombstones! TTL-ing data helps, but TTLs are not free! © DataStax, All Rights Reserved. 23

- 24. Lessons Learned (part 2) • Involve your network team. • Use a metrics solution (Graphite/Grafana, OpsCenter, etc) • Give them exact data to work with. • When building a new cluster with data centers in the cloud, thoroughly test- out the operational aspects of your cluster: • Bootstrapping/decommissioning a node. • Running repairs. • Trigger a GC (cassandra-stress can help with that). • G1GC helps for larger heap sizes, but it’s not a silver bullet. © DataStax, All Rights Reserved. 24

- 25. Questions? Cassandra at Target

Public clipboards featuring this slide

No public clipboards found for this slide